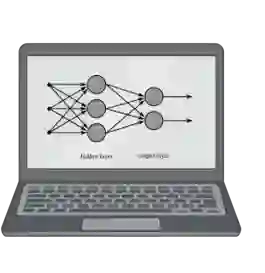

Causality and eXplainable Artificial Intelligence (XAI) have developed as separate fields in computer science, even though the underlying concepts of causation and explanation share common ancient roots. This is further enforced by the lack of review works jointly covering these two fields. In this paper, we investigate the literature to try to understand how and to what extent causality and XAI are intertwined. More precisely, we seek to uncover what kinds of relationships exist between the two concepts and how one can benefit from them, for instance, in building trust in AI systems. As a result, three main perspectives are identified. In the first one, the lack of causality is seen as one of the major limitations of current AI and XAI approaches, and the "optimal" form of explanations is investigated. The second is a pragmatic perspective and considers XAI as a tool to foster scientific exploration for causal inquiry, via the identification of pursue-worthy experimental manipulations. Finally, the third perspective supports the idea that causality is propaedeutic to XAI in three possible manners: exploiting concepts borrowed from causality to support or improve XAI, utilizing counterfactuals for explainability, and considering accessing a causal model as explaining itself. To complement our analysis, we also provide relevant software solutions used to automate causal tasks. We believe our work provides a unified view of the two fields of causality and XAI by highlighting potential domain bridges and uncovering possible limitations.

翻译:暂无翻译