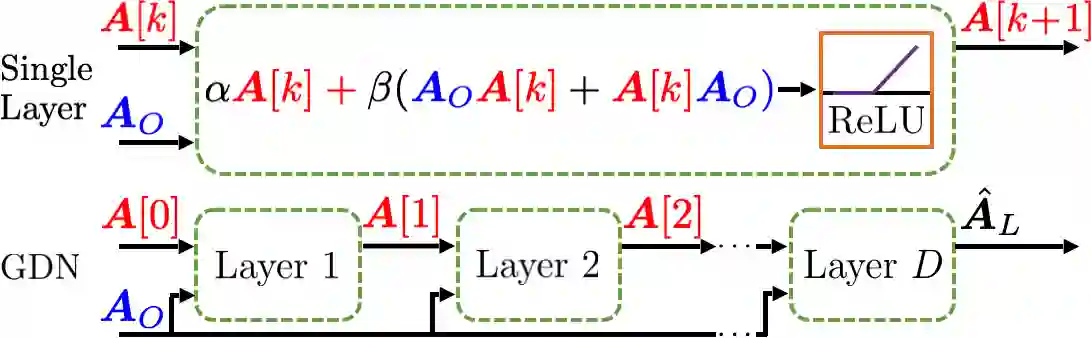

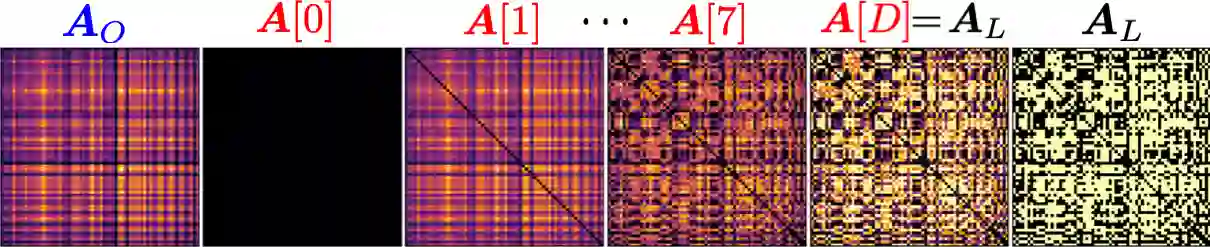

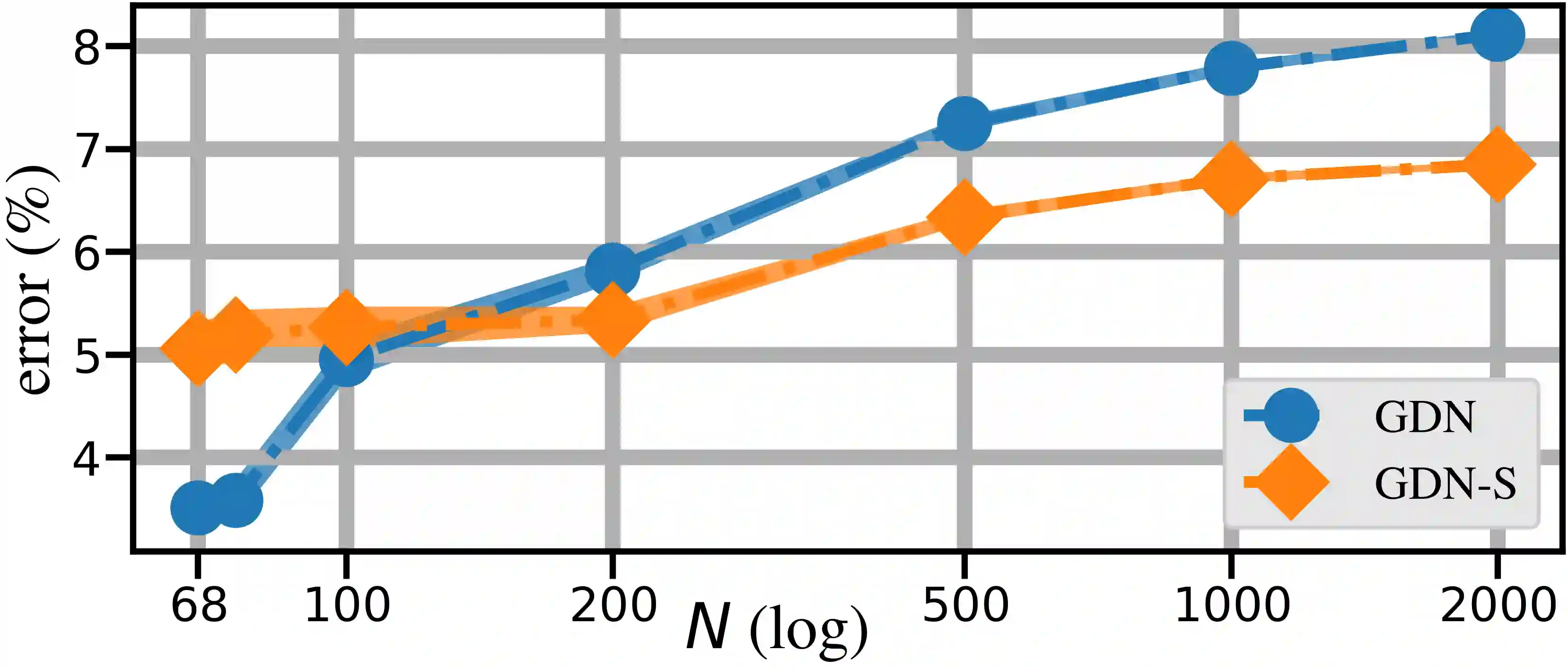

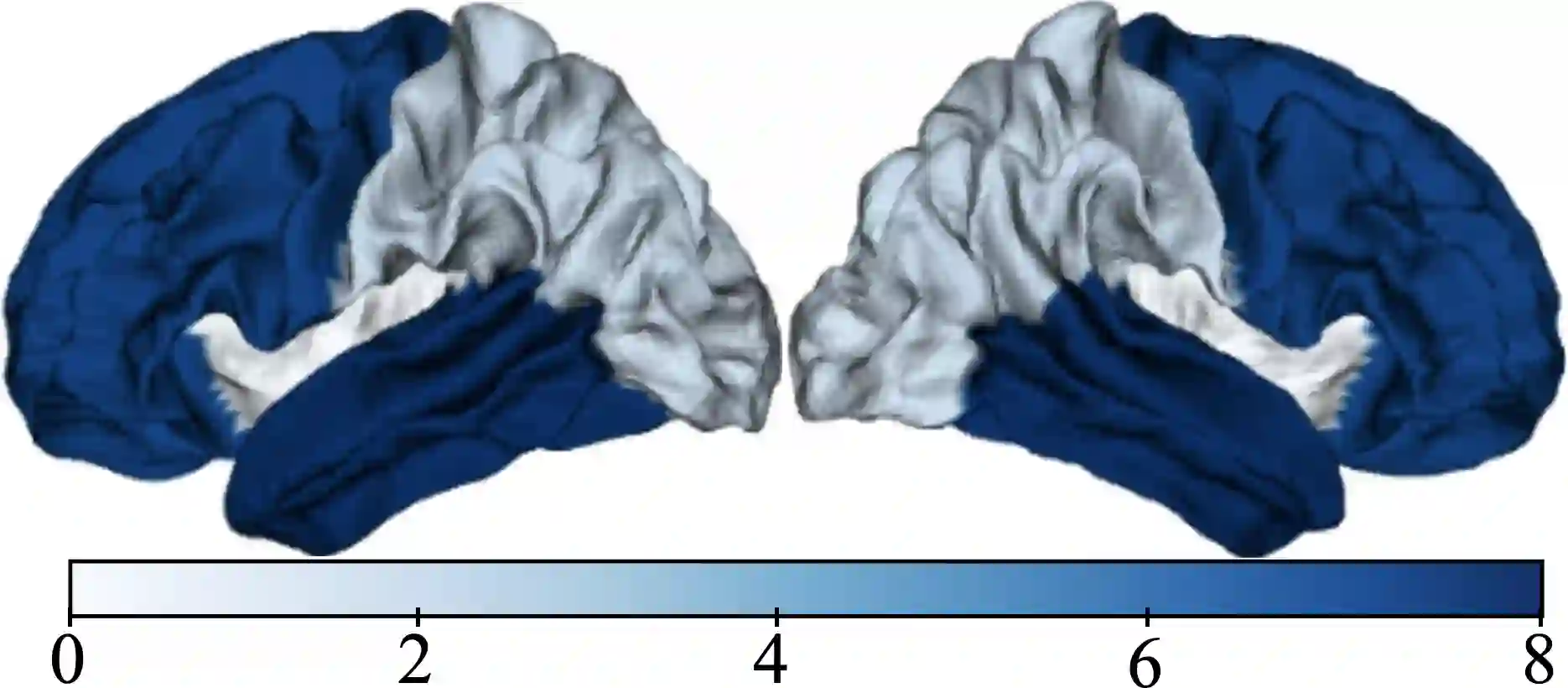

Machine learning frameworks such as graph neural networks typically rely on a given, fixed graph to exploit relational inductive biases and thus effectively learn from network data. However, when said graphs are (partially) unobserved, noisy, or dynamic, the problem of inferring graph structure from data becomes relevant. In this paper, we postulate a graph convolutional relationship between the observed and latent graphs, and formulate the graph learning task as a network inverse (deconvolution) problem. In lieu of eigendecomposition-based spectral methods or iterative optimization solutions, we unroll and truncate proximal gradient iterations to arrive at a parameterized neural network architecture that we call a Graph Deconvolution Network (GDN). GDNs can learn a distribution of graphs in a supervised fashion, perform link prediction or edge-weight regression tasks by adapting the loss function, and they are inherently inductive. We corroborate GDN's superior graph recovery performance and its generalization to larger graphs using synthetic data in supervised settings. Furthermore, we demonstrate the robustness and representation power of GDNs on real world neuroimaging and social network datasets.

翻译:图形神经网络等机器学习框架通常依靠一个特定固定的图形来利用感应偏差,从而有效地从网络数据中学习。然而,当上述图形(部分)未观测到、吵闹或动态时,从数据中推断图形结构的问题变得相关。在本文中,我们假设观测到的图形与潜伏图形之间的关系,并将图形学习任务设计成一个网络反向(反向)问题。我们用监测环境中的合成数据来代替基于eigendecomposition的光谱恢复方法或迭代优化解决方案,我们解开和转动准显性梯度转换,以达到一个我们称之为图解变网络(GDN)的参数性神经网络结构。GDNs可以以有监督的方式学习图表的分布,通过调整损失函数进行链接预测或边缘重量回归任务,它们具有内在的诱导力。我们用GDN的高级图形恢复性能及其一般化能力与大图表相比。此外,我们还展示GDNs对真实世界神经和社会网络的构造的坚固性和代表性。