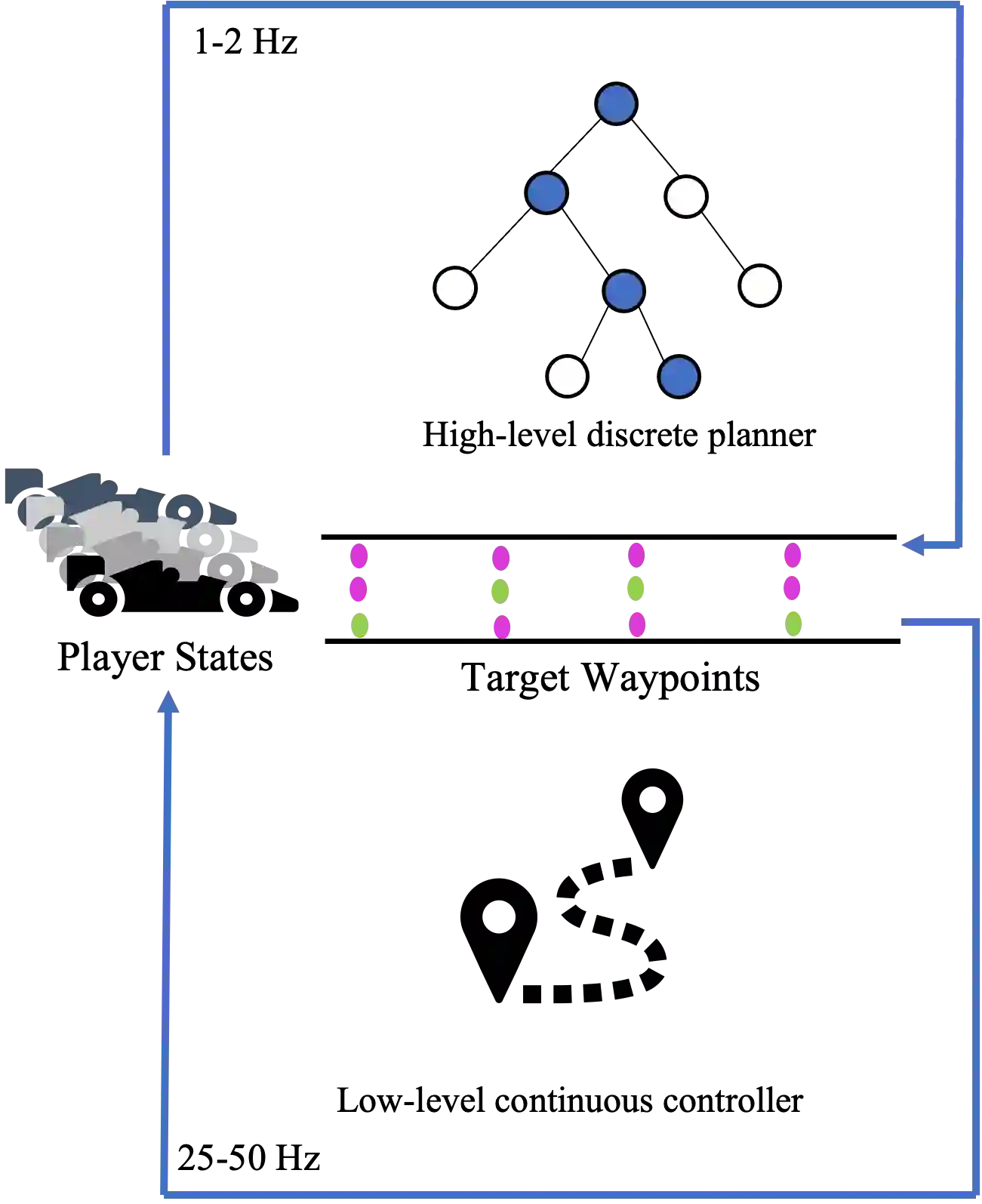

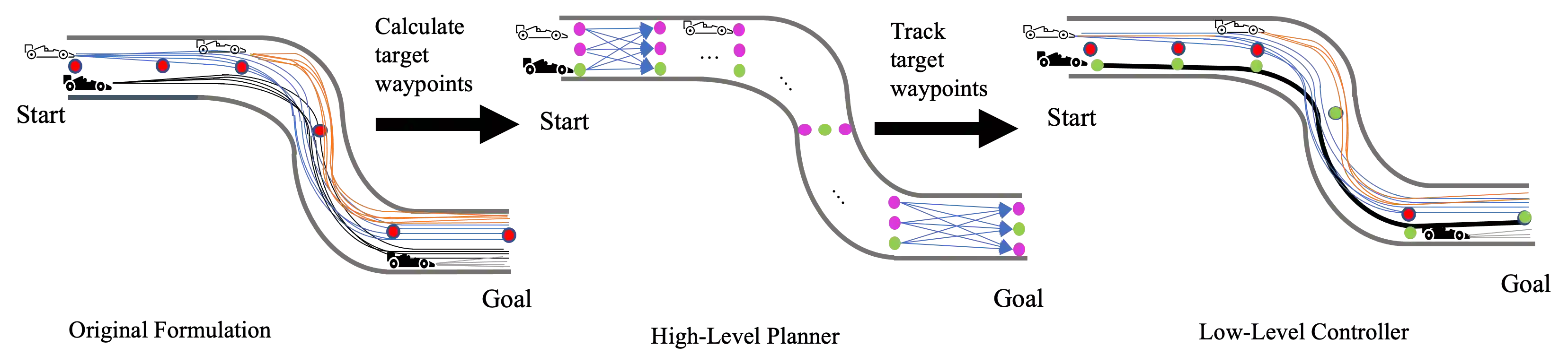

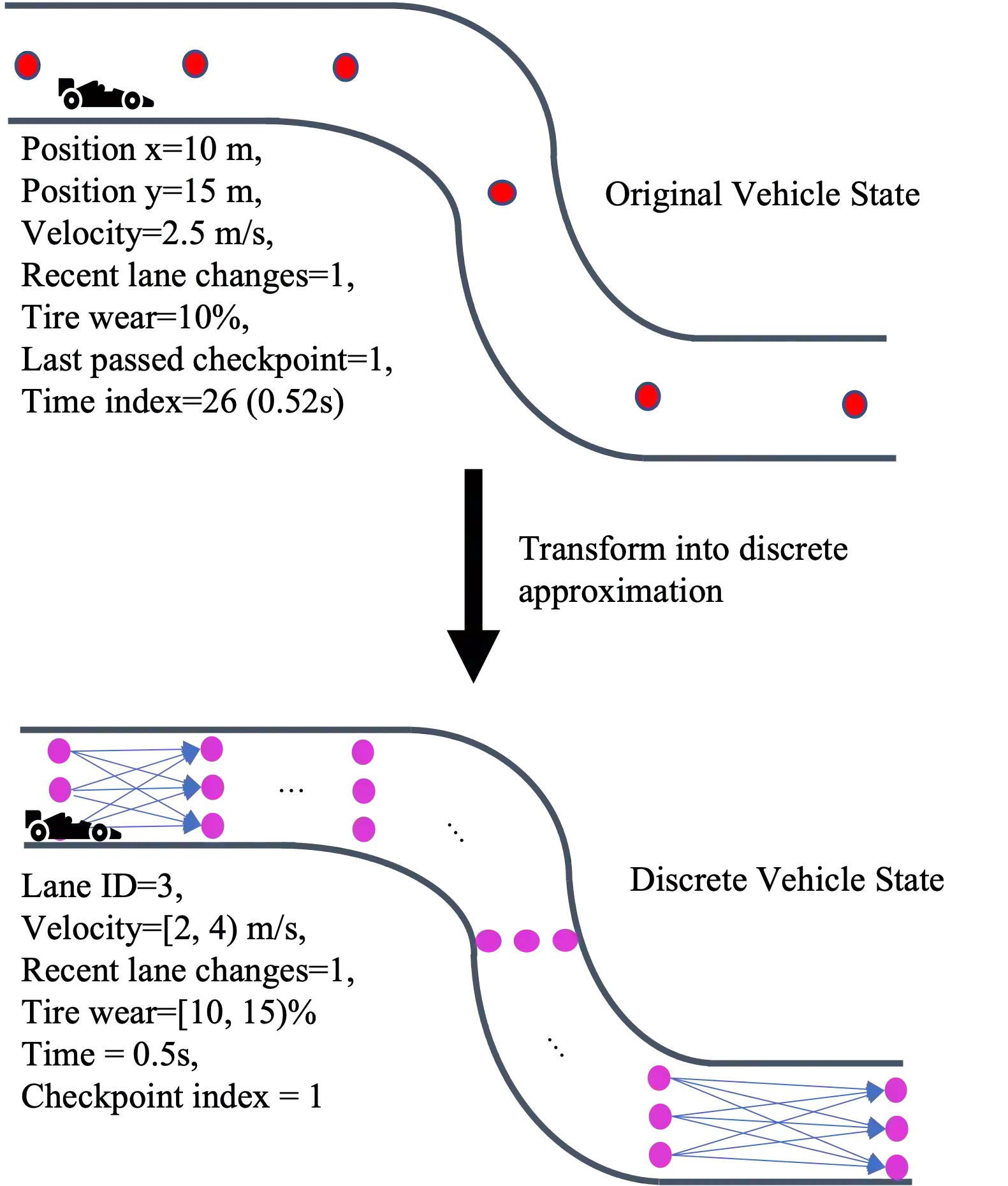

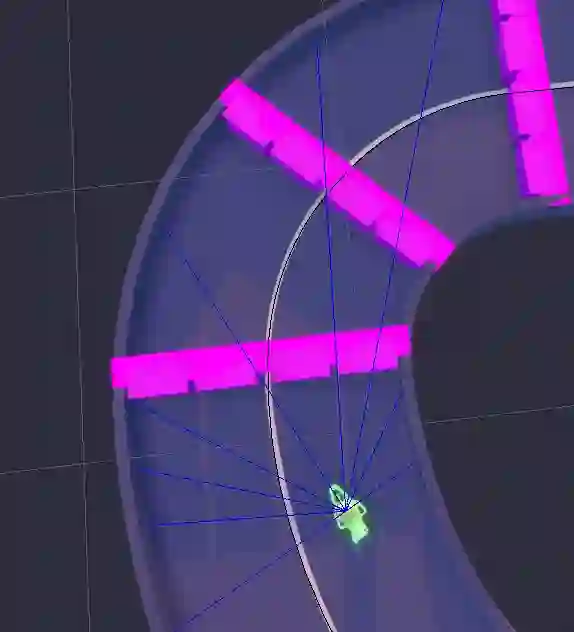

We study the problem of autonomous racing amongst teams composed of cooperative agents subject to realistic safety and fairness rules. We develop a hierarchical controller to solve this problem consisting of two levels, extending prior work where bi-level hierarchical control is applied to head-to-head autonomous racing. A high-level planner constructs a discrete game that encodes the complex rules with simplified dynamics to produce a sequence of target waypoints. The low-level controller uses the resulting waypoints as a reference trajectory and computes high-resolution control inputs by solving a simplified racing game with a reduced set of rules. We consider two approaches for the low-level planner: training a multi-agent reinforcement learning (MARL) policy and solving a linear-quadratic Nash game (LQNG) approximation. We test our controllers against three baselines on a simple oval track and a complex track: an end-to-end MARL controller, a MARL controller tracking a fixed racing line, and an LQNG controller tracking a fixed racing line. Quantitative results show that our hierarchical methods outperform their respective baseline methods in terms of race wins, overall team performance, and abiding by the rules. Qualitatively, we observe the hierarchical controllers mimicking actions performed by expert human drivers such as coordinated overtaking moves, defending against multiple opponents, and long-term planning for delayed advantages. We show that hierarchical planning for game-theoretic reasoning produces both cooperative and competitive behavior even when challenged with complex rules and constraints.

翻译:我们研究由合作人员组成、符合现实安全和公平规则的团队之间自主赛跑的问题。我们开发了一个等级控制器来解决这个问题,由两个级别组成:扩大以前工作范围,对头对头自动赛实行双级等级控制;一个高级规划器将复杂的规则编码为简化的动态,以生成一系列目标路标。低级别控制器将由此产生的路标用作参考轨迹,并通过解决一套简化的游戏,降低规则,计算高分辨率控制投入。我们考虑低级别规划器的两个方法:培训多级强化(MARL)政策,并解决线性横向Nash游戏(LQNG)近似值。我们用三个基线来测试我们的控制器,在简单的奥瓦尔轨道和复杂轨道上将复杂的规则编码为复杂的规则编码:末至终点MARL控制器,跟踪固定的赛道线,以及一个连级控制器跟踪固定赛线。量化结果显示,我们的等级方法在种族赢、总体团队级级和直线性比赛规则方面超越了各自的基线方法,我们用多级规则来遵守了多级规则,并遵守了多级规则,我们用专家级规则来捍卫了多级规则。