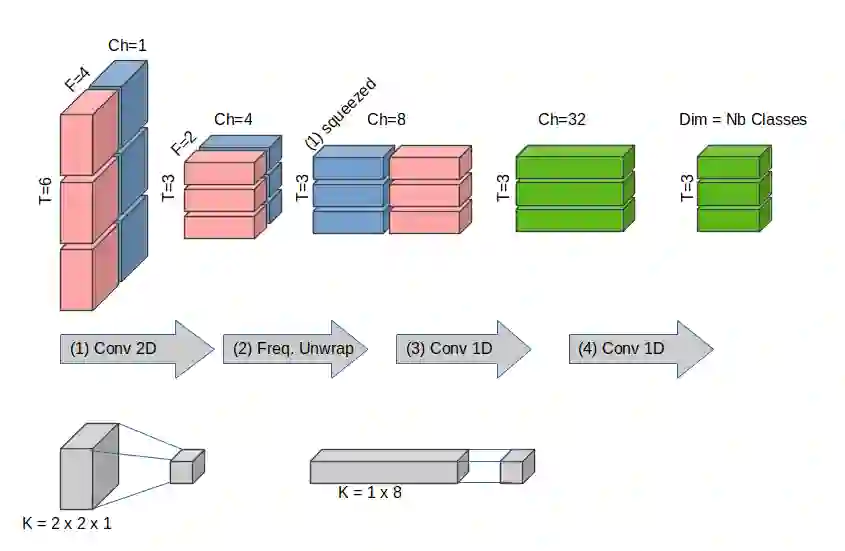

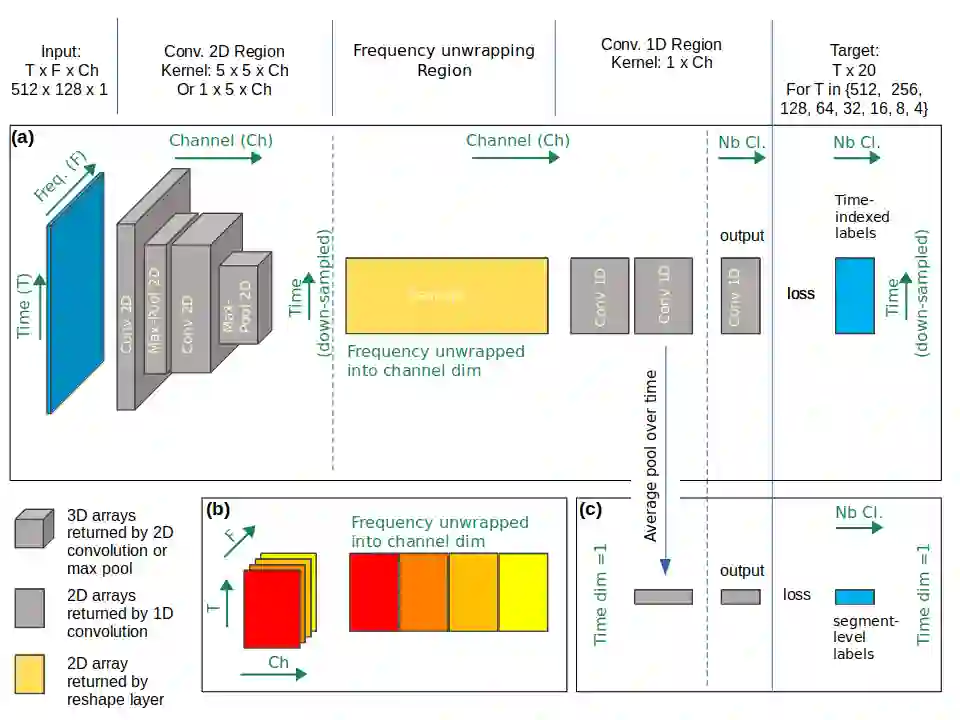

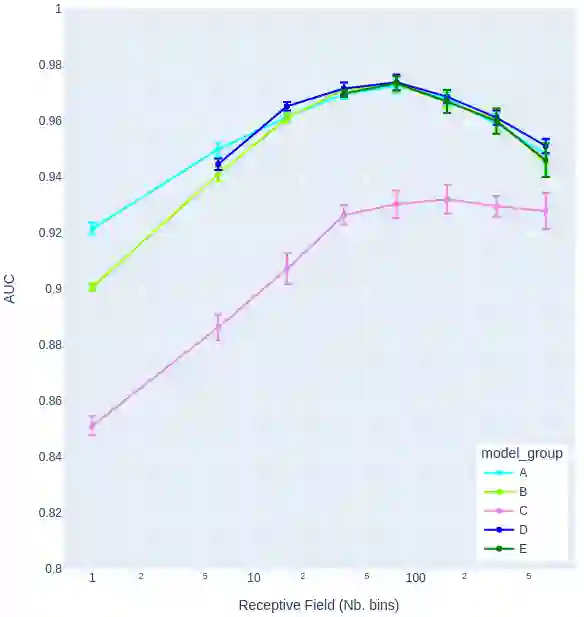

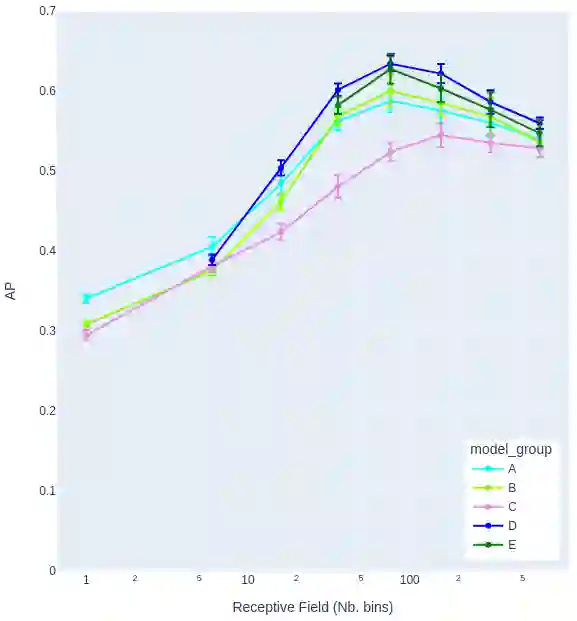

Automated classification of animal sounds is a prerequisite for large-scale monitoring of biodiversity. Convolutional Neural Networks (CNNs) are among the most promising algorithms but they are slow, often achieve poor classification in the field and typically require large training data sets. Our objective was to design CNNs that are fast at inference time and achieve good classification performance while learning from moderate-sized data. Recordings from a rainforest ecosystem were used. Start and end-point of sounds from 20 bird species were manually annotated. Spectrograms from 10 second segments were used as CNN input. We designed simple CNNs with a frequency unwrapping layer (SIMP-FU models) such that any output unit was connected to all spectrogram frequencies but only to a sub-region of time, the Receptive Field (RF). Our models allowed experimentation with different RF durations. Models either used the time-indexed labels that encode start and end-point of sounds or simpler segment-level labels. Models learning from time-indexed labels performed considerably better than their segment-level counterparts. Best classification performances was achieved for models with intermediate RF duration of 1.5 seconds. The best SIMP-FU models achieved AUCs over 0.95 in 18 of 20 classes on the test set. On compact low-cost hardware the best SIMP-FU models evaluated up to seven times faster than real-time data acquisition. RF duration was a major driver of classification performance. The optimum of 1.5 s was in the same range as the duration of the sounds. Our models achieved good classification performance while learning from moderate-sized training data. This is explained by the usage of time-indexed labels during training and adequately sized RF. Results confirm the feasibility of deploying small CNNs with good classification performance on compact low-cost devices.

翻译:暂无翻译