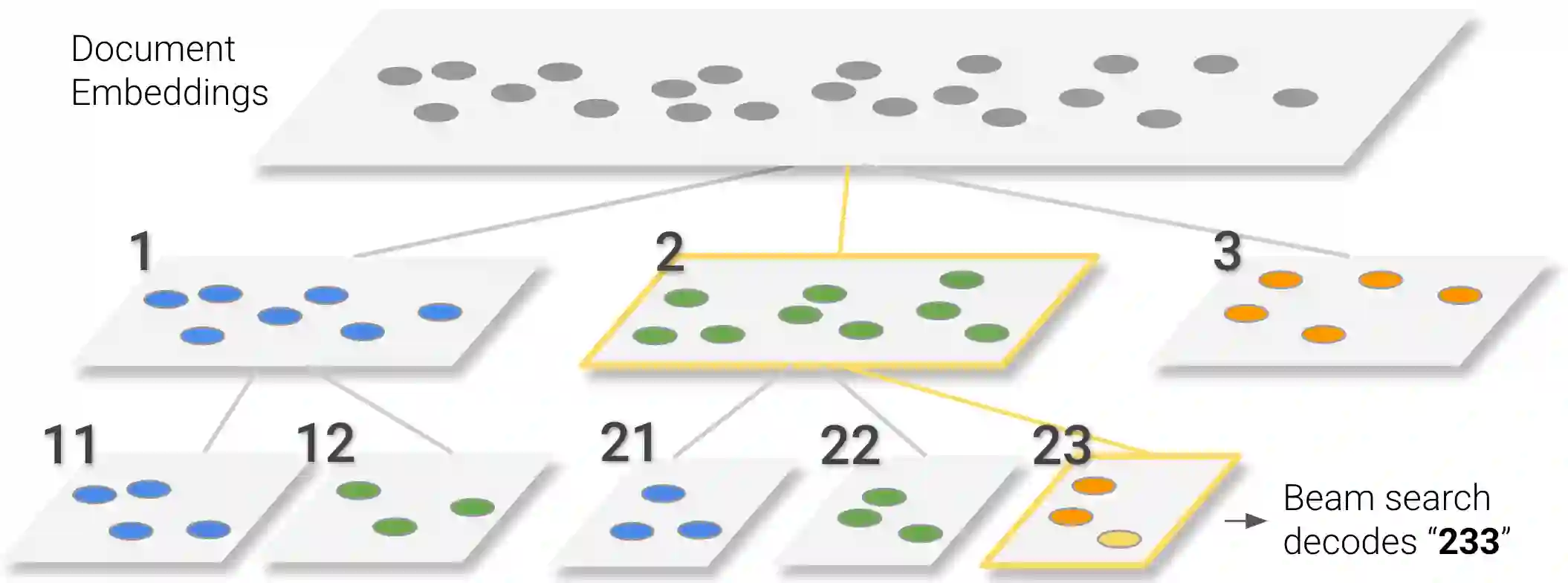

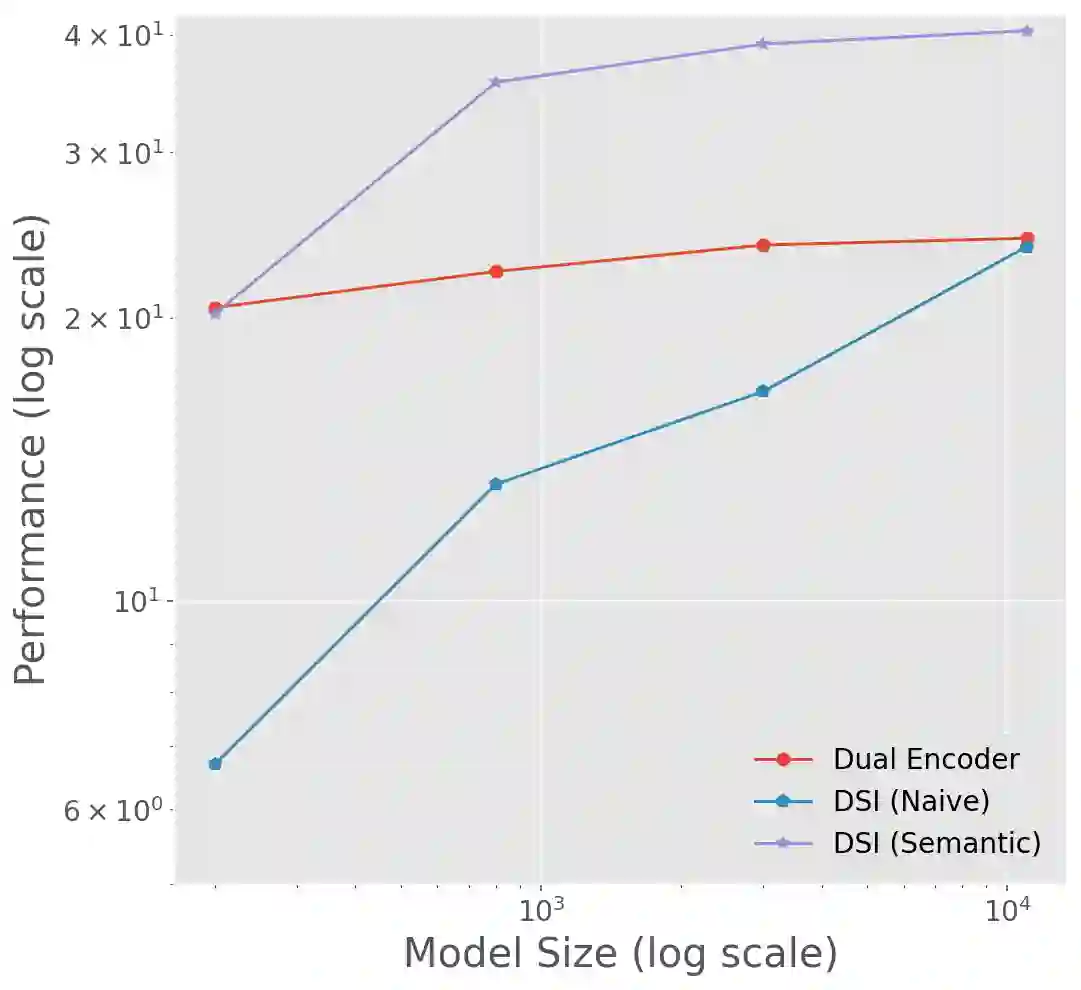

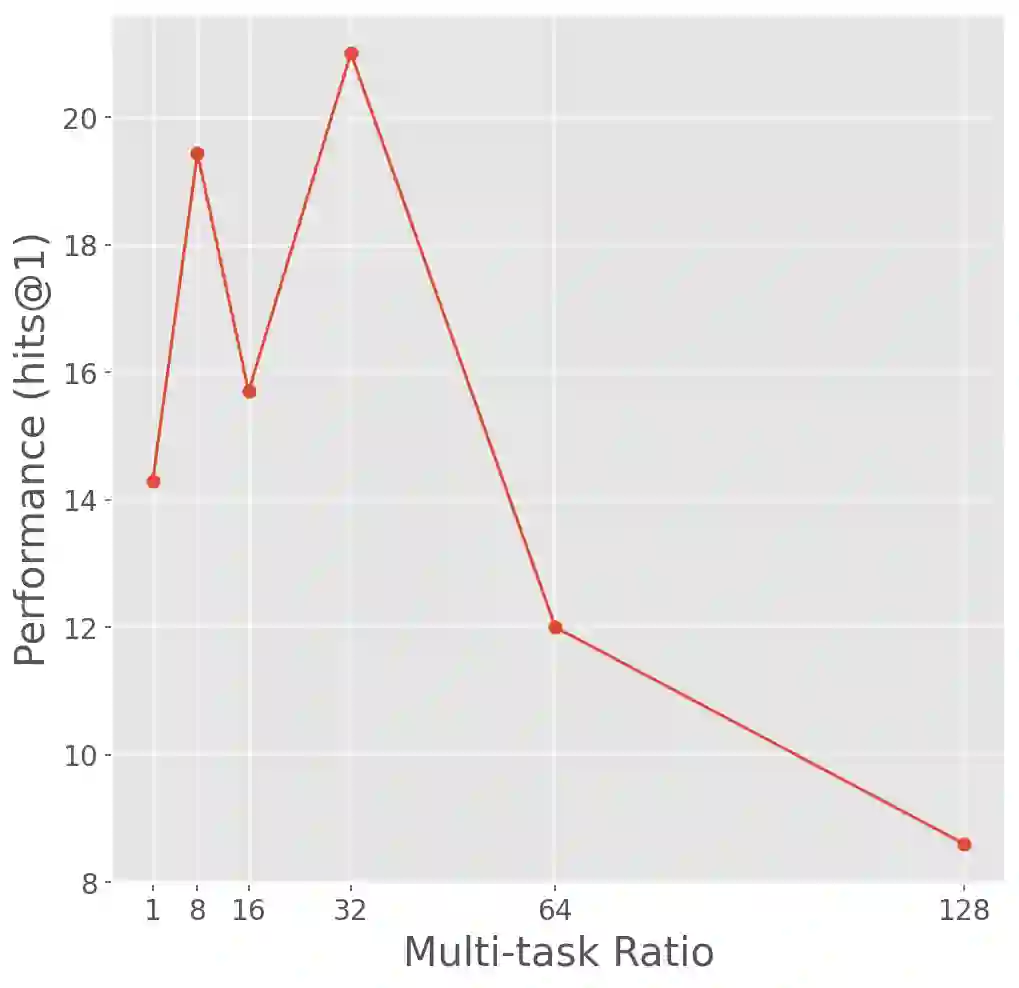

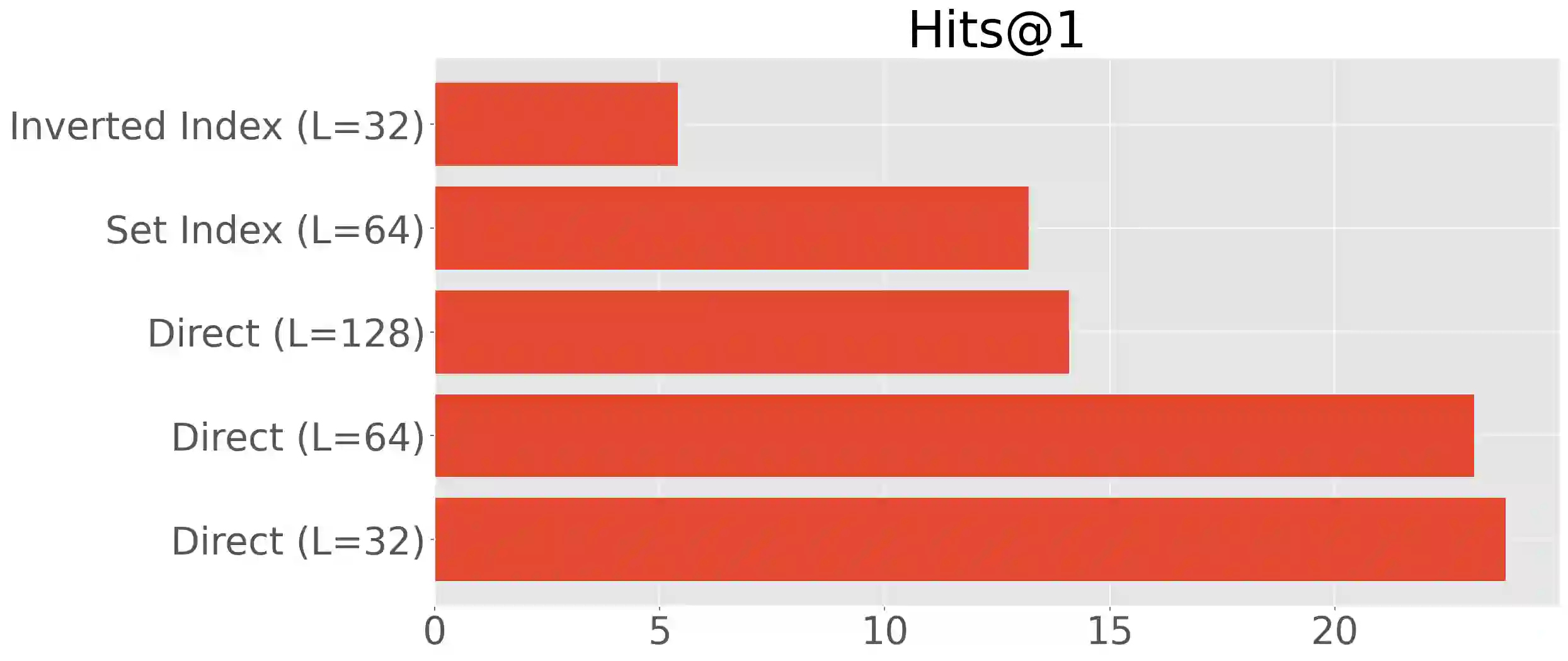

In this paper, we demonstrate that information retrieval can be accomplished with a single Transformer, in which all information about the corpus is encoded in the parameters of the model. To this end, we introduce the Differentiable Search Index (DSI), a new paradigm that learns a text-to-text model that maps string queries directly to relevant docids; in other words, a DSI model answers queries directly using only its parameters, dramatically simplifying the whole retrieval process. We study variations in how documents and their identifiers are represented, variations in training procedures, and the interplay between models and corpus sizes. Experiments demonstrate that given appropriate design choices, DSI significantly outperforms strong baselines such as dual encoder models. Moreover, DSI demonstrates strong generalization capabilities, outperforming a BM25 baseline in a zero-shot setup.

翻译:在本文中,我们证明信息检索可以用一个单一的变换器完成,该变换器将所有关于该物质的信息都编码在模型参数中。为此,我们引入了差异搜索索引(DSI),这是一个新模式,学习了文本到文本的模式,将查询直接用文字串串到相关的 docid ;换句话说,DSI模式只直接使用其参数回答问题,大大简化了整个检索过程。我们研究了文件及其识别特征的表达方式、培训程序的变化以及模型和体积大小之间的相互作用。实验表明,如果有适当的设计选择,DSI大大超越了双编码模型等强大的基线。此外,DSI展示了很强的概括能力,在零点设置中完成了BM25基线。