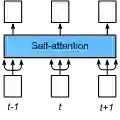

Transformers are quickly becoming one of the most heavily applied deep learning architectures across modalities, domains, and tasks. In vision, on top of ongoing efforts into plain transformers, hierarchical transformers have also gained significant attention, thanks to their performance and easy integration into existing frameworks. These models typically employ localized attention mechanisms, such as the sliding-window Neighborhood Attention (NA) or Swin Transformer's Shifted Window Self Attention. While effective at reducing self attention's quadratic complexity, local attention weakens two of the most desirable properties of self attention: long range inter-dependency modeling, and global receptive field. In this paper, we introduce Dilated Neighborhood Attention (DiNA), a natural, flexible and efficient extension to NA that can capture more global context and expand receptive fields exponentially at no additional cost. NA's local attention and DiNA's sparse global attention complement each other, and therefore we introduce Dilated Neighborhood Attention Transformer (DiNAT), a new hierarchical vision transformer built upon both. DiNAT variants enjoy significant improvements over attention-based baselines such as NAT and Swin, as well as modern convolutional baseline ConvNeXt. Our Large model is ahead of its Swin counterpart by 1.5% box AP in COCO object detection, 1.3% mask AP in COCO instance segmentation, and 1.1% mIoU in ADE20K semantic segmentation, and faster in throughput. We believe combinations of NA and DiNA have the potential to empower various tasks beyond those presented in this paper. To support and encourage research in this direction, in vision and beyond, we open-source our project at: https://github.com/SHI-Labs/Neighborhood-Attention-Transformer.

翻译:变压器正在迅速成为在模式、 领域和任务中应用最密集的深层次学习结构之一。 在愿景中, 等级变压器由于其性能和容易融入现有框架而获得极大关注。 这些模型通常使用本地关注机制, 如滑动窗口邻里注意(NA) 或Swin变压器的转动窗口自我关注。 在有效减少自我关注的二次复杂性的同时, 当地关注会削弱两种最理想的自我关注特性: 长距离相互依存模型, 以及全球可容纳的字段。 在本文件中,我们引入了疏通缩的邻里注意(DINA), 这是一种自然、灵活和高效的扩展, 可以在不增加成本的情况下捕捉更多的全球环境, 并快速扩展可容纳的字段。 NA的本地关注和Dive变压器(Dielbority Reformormormationer), 我们引入了Dilate Nighbormall 变压器。 DianNAT 变压器在NAAT 和SwinForldal Creax 中, 在Sional ASyal ASal ASal ASal 和Sloveal ASyal ASyal ASyal ASyal ASld 和Sldal ASional 中, 在Syal ASional 和Sldxxx 中, 在Syal AS AS delval delview