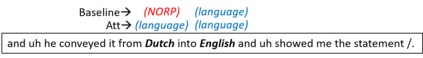

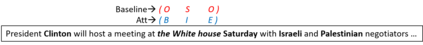

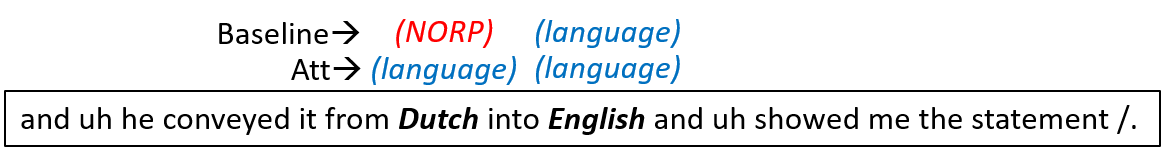

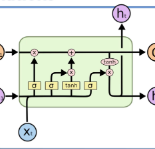

Recent researches prevalently used BiLSTM-CNN as a core module for NER in a sequence-labeling setup. This paper formally shows the limitation of BiLSTM-CNN encoders in modeling cross-context patterns for each word, i.e., patterns crossing past and future for a specific time step. Two types of cross-structures are used to remedy the problem: A BiLSTM variant with cross-link between layers; a multi-head self-attention mechanism. These cross-structures bring consistent improvements across a wide range of NER domains for a core system using BiLSTM-CNN without additional gazetteers, POS taggers, language-modeling, or multi-task supervision. The model surpasses comparable previous models on OntoNotes 5.0 and WNUT 2017 by 1.4% and 4.6%, especially improving emerging, complex, confusing, and multi-token entity mentions, showing the importance of remedying the core module of NER.

翻译:最近的研究普遍使用BILSTM-CNN作为NER的核心模块,在顺序标签设置中作为NER的核心模块。本文件正式显示BILSTM-CNN编码器在为每个单词建模跨文本模式(即跨越过去和未来的特定时间步骤的模式)方面的局限性。使用两种跨结构来纠正问题:BILSTM变量,各层之间交叉链接;多头自省机制。这些交叉结构为使用BILSTM-CNN的核心系统在广泛的NER领域带来一致的改进,没有额外的地名录、POS标记器、语言建模或多任务监督。该模型比OntoNotes 5.0和WNUT 2017的类似模型增加了1.4%和4.6%,特别是改进了新兴的、复杂的、混乱的和多口实体,显示了对NER核心模块进行补救的重要性。