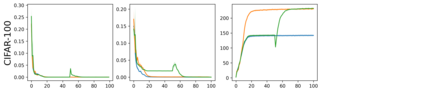

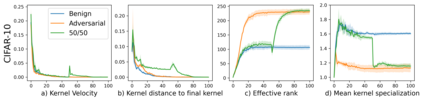

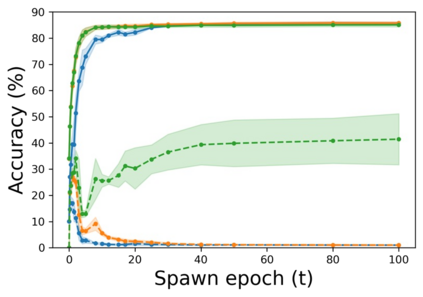

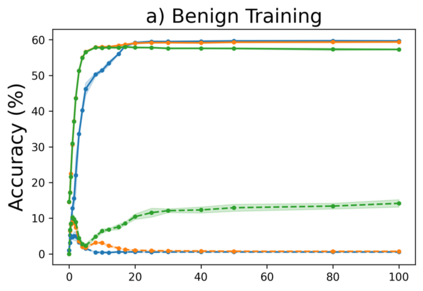

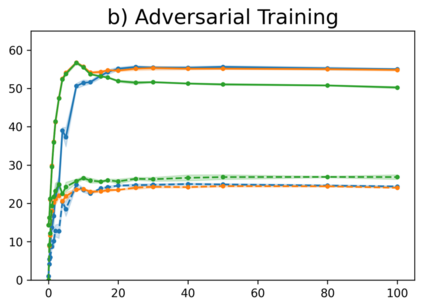

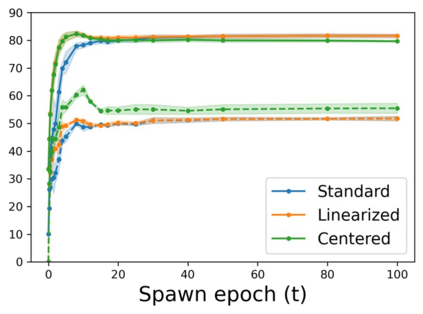

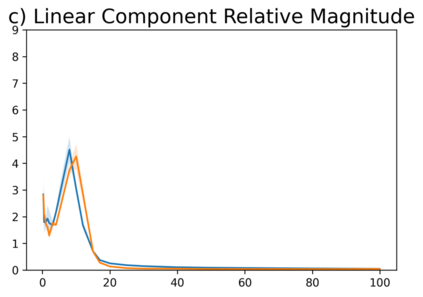

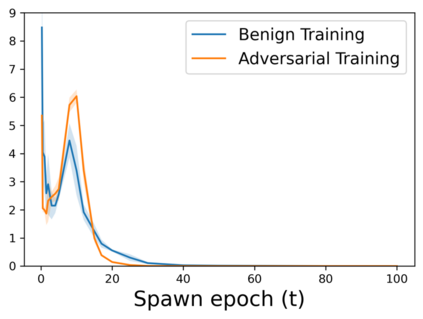

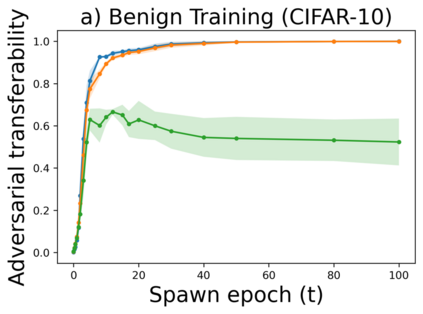

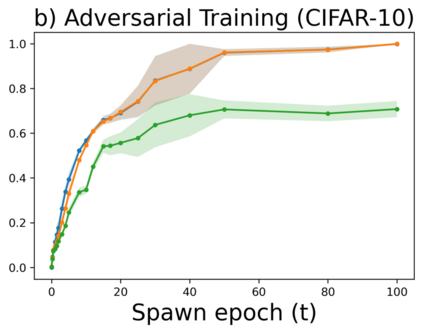

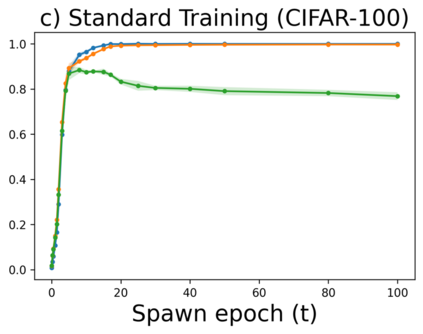

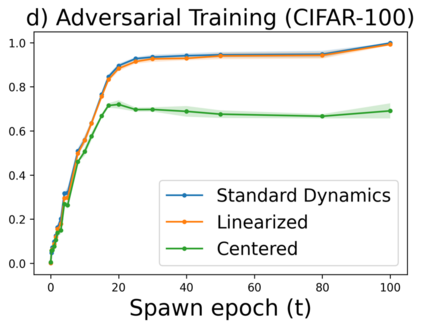

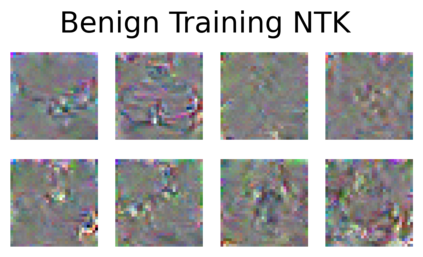

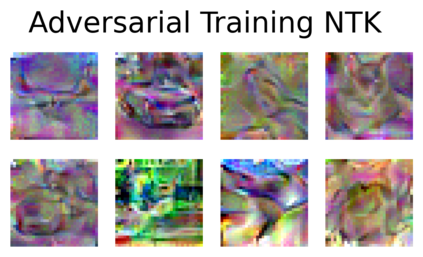

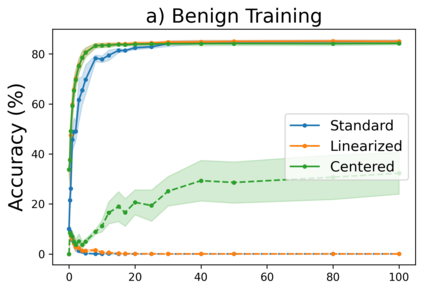

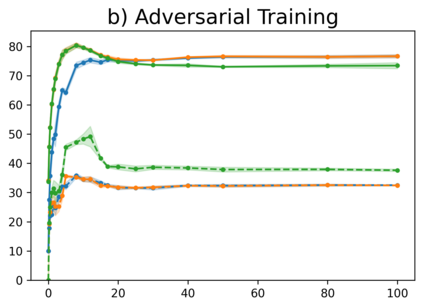

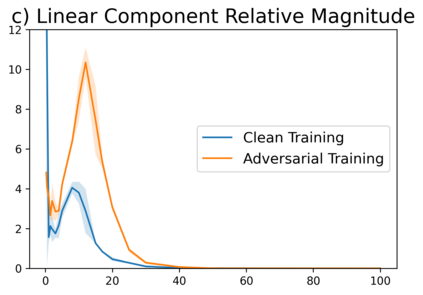

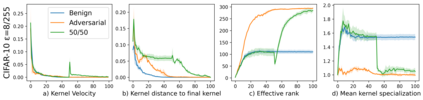

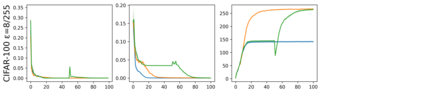

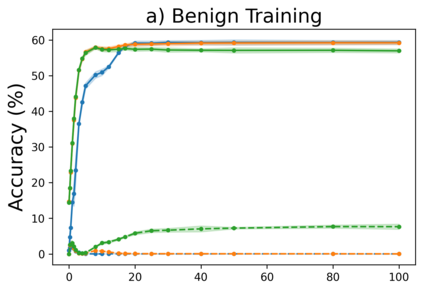

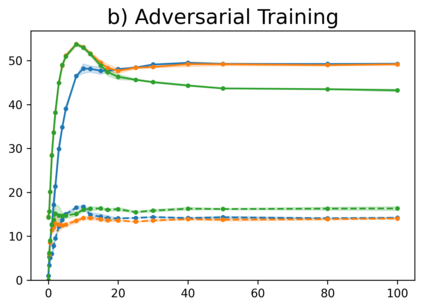

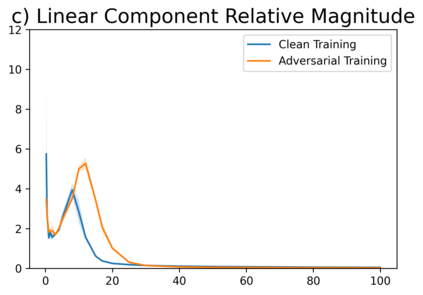

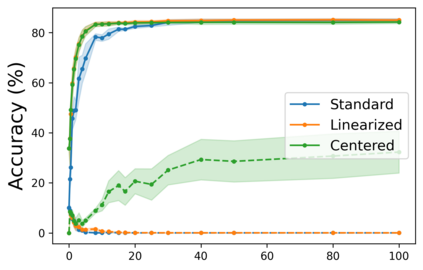

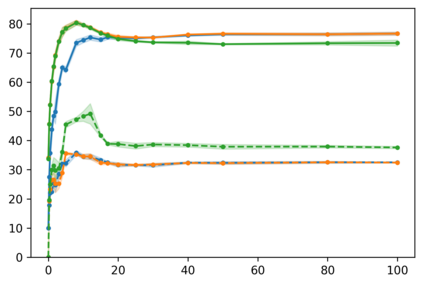

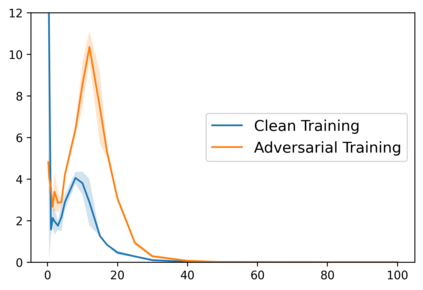

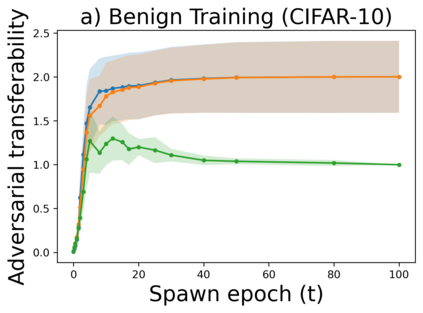

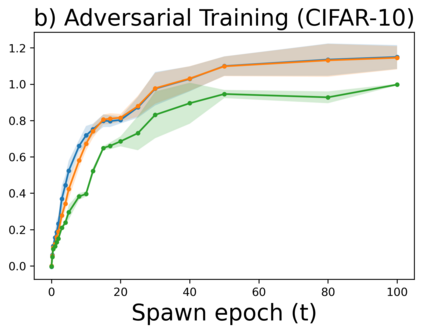

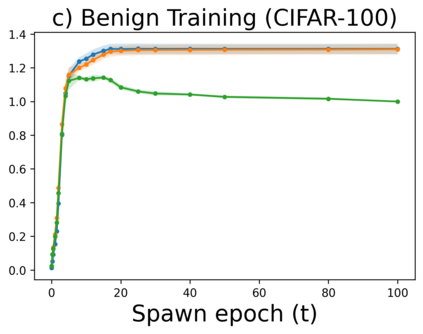

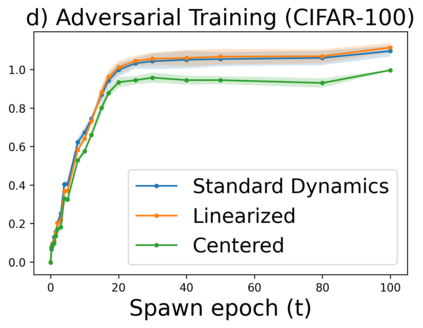

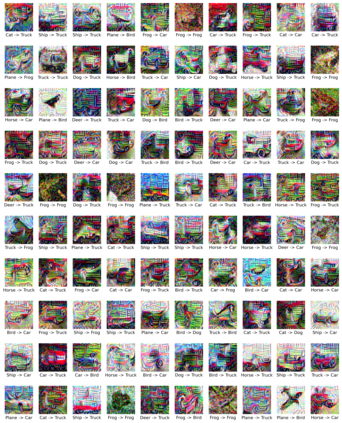

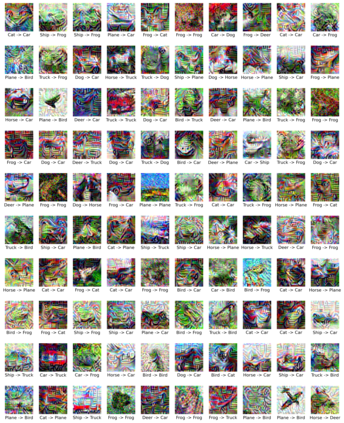

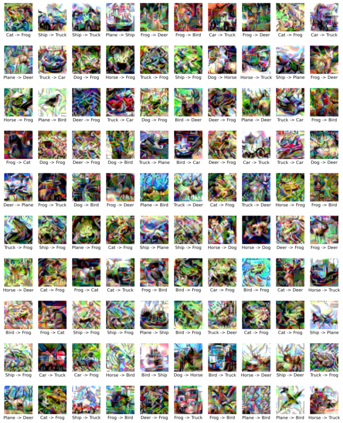

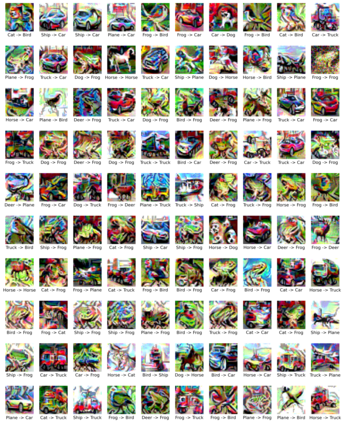

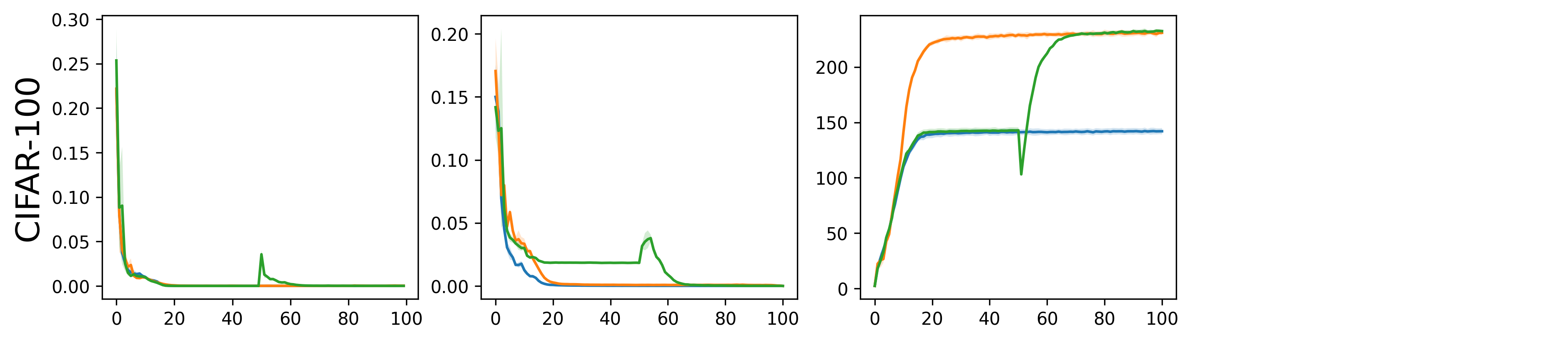

Two key challenges facing modern deep learning are mitigating deep networks' vulnerability to adversarial attacks and understanding deep learning's generalization capabilities. Towards the first issue, many defense strategies have been developed, with the most common being Adversarial Training (AT). Towards the second challenge, one of the dominant theories that has emerged is the Neural Tangent Kernel (NTK) -- a characterization of neural network behavior in the infinite-width limit. In this limit, the kernel is frozen, and the underlying feature map is fixed. In finite widths, however, there is evidence that feature learning happens at the earlier stages of the training (kernel learning) before a second phase where the kernel remains fixed (lazy training). While prior work has aimed at studying adversarial vulnerability through the lens of the frozen infinite-width NTK, there is no work that studies the adversarial robustness of the empirical/finite NTK during training. In this work, we perform an empirical study of the evolution of the empirical NTK under standard and adversarial training, aiming to disambiguate the effect of adversarial training on kernel learning and lazy training. We find under adversarial training, the empirical NTK rapidly converges to a different kernel (and feature map) than standard training. This new kernel provides adversarial robustness, even when non-robust training is performed on top of it. Furthermore, we find that adversarial training on top of a fixed kernel can yield a classifier with $76.1\%$ robust accuracy under PGD attacks with $\varepsilon = 4/255$ on CIFAR-10.

翻译:现代深层学习所面临的两个关键挑战是降低深层次网络对对抗性攻击的脆弱性,并理解深层次学习的概括能力。但是,在有限的宽度方面,有证据表明,在第二阶段培训(核心学习)之前的早期(核心学习),已经制定了许多防御战略,最常见的是反向训练(AT)。在第二个挑战方面,出现的主要理论之一是神经唐氏内核内核(NTK),这是在无限宽限范围内对神经网络行为的描述。在这个限度内核内核被冻结,基本特征图是固定的。但是,在有限的宽度方面,有证据表明,在第二阶段培训(核心学习)之前的早期阶段(核心学习)会发生学习(核心学习),在第二阶段,内核内核内核内核训练(核心内核内核内核内核内核内核)的早期对抗性行为(核心内核内核内核内核内核内核内核内核内核)的演变过程,在标准下,我们通过对核内核内核内核内核内核内核的高级训练,可以找到一种稳定的训练的顶性训练。