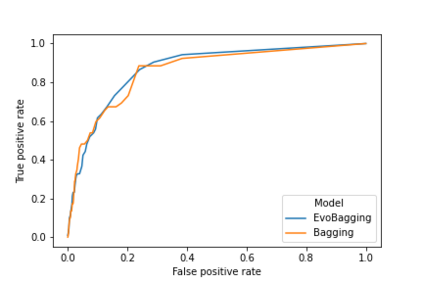

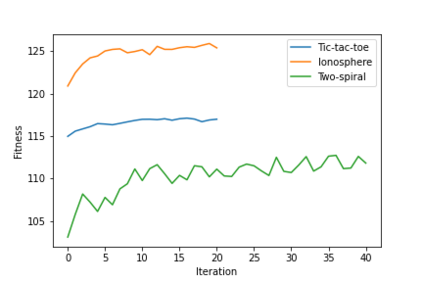

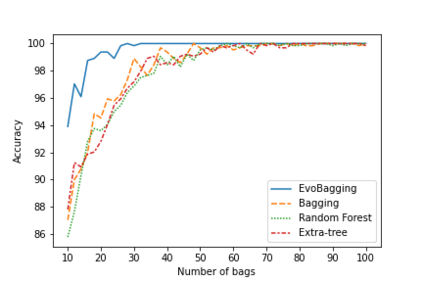

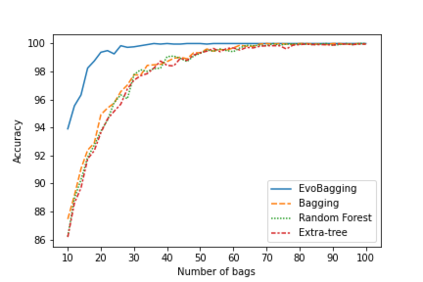

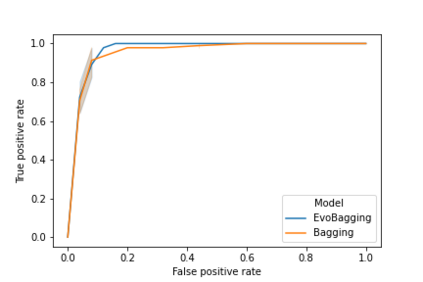

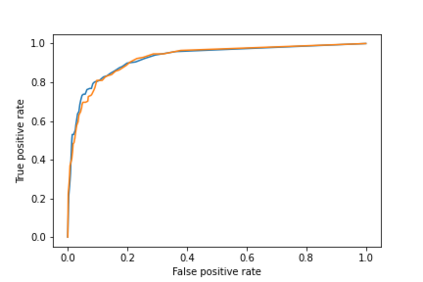

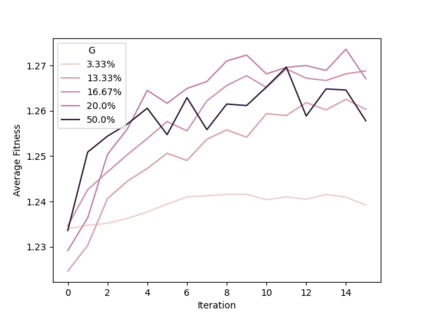

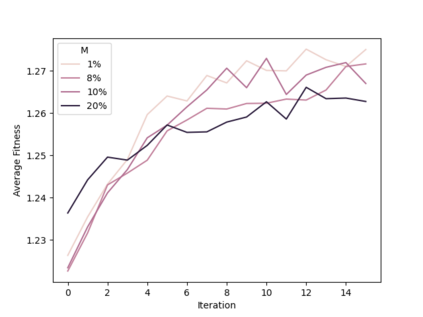

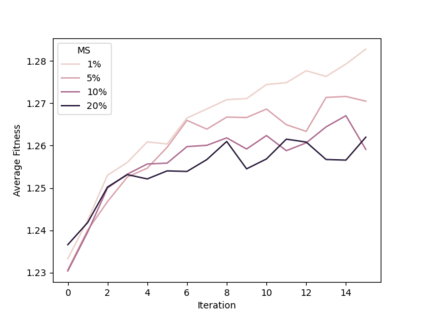

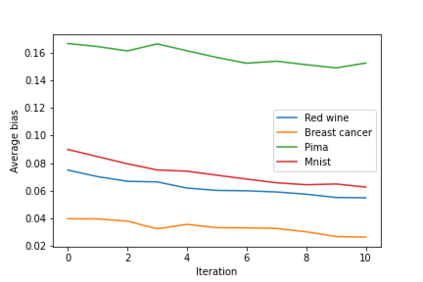

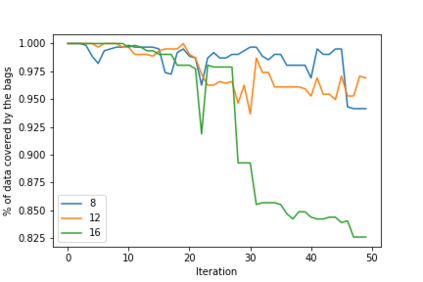

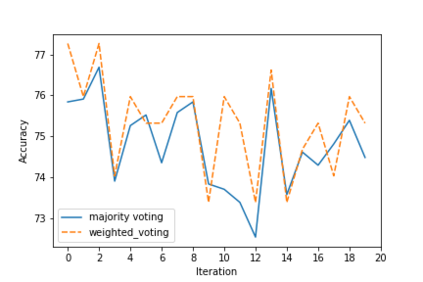

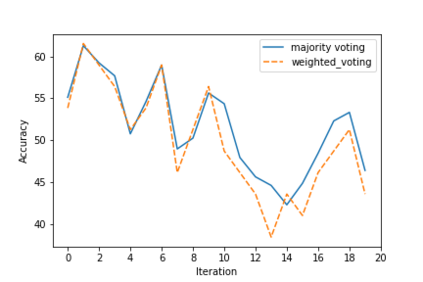

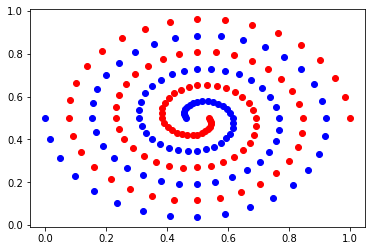

Ensemble learning has gained success in machine learning with major advantages over other learning methods. Bagging is a prominent ensemble learning method that creates subgroups of data, known as bags, that are trained by individual machine learning methods such as decision trees. Random forest is a prominent example of bagging with additional features in the learning process. \textcolor{black}{A limitation of bagging is high bias (model under-fitting) in the aggregated prediction when the individual learners have high biases.} Evolutionary algorithms have been prominent for optimisation problems and also been used for machine learning. Evolutionary algorithms are gradient-free methods with a population of candidate solutions that maintain diversity for creating new solutions. In conventional bagged ensemble learning, the bags are created once and the content, in terms of the training examples, is fixed over the learning process. In our paper, we propose evolutionary bagged ensemble learning, where we utilise evolutionary algorithms to evolve the content of the bags in order to enhance the ensemble by providing diversity in the bags iteratively. The results show that our evolutionary ensemble bagging method outperforms conventional ensemble methods (bagging and random forests) for several benchmark datasets under certain constraints. Evolutionary bagging can inherently sustain a diverse set of bags without sacrificing any data.

翻译:与其它学习方法相比,隐形学习在机器学习中取得了成功,并取得了优于其他学习方法的重大优势。 粘贴是一种突出的混合学习方法,它创造了数据分组,称为袋,由单个机器学习方法培训,例如决策树。 随机森林是学习过程中加袋的突出例子。 \ textcololor{black ⁇ A 包装限制在综合预测中具有很高的偏差(模范不适) 。 当个别学习者有高偏差时, 进化算法在优化问题中占有突出地位, 也被用于机器学习。 进化算法是一种没有梯度的方法, 拥有维持新解决方案多样性的候选解决方案群集。 在传统的包装团学习中, 包包包是一次创建的突出例子, 内容固定在学习过程中。 在我们的论文中, 包装袋的局限是进化加袋式学习方法, 我们利用进化算法来演化袋的内容, 以便通过反复提供袋中的多样性来增强组合。 演进式算法显示, 我们进式组合组合式的计算方法可以超越某些常规的进式数据基准。