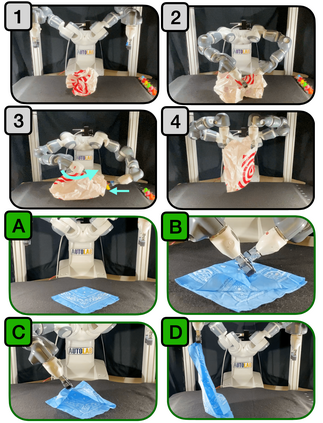

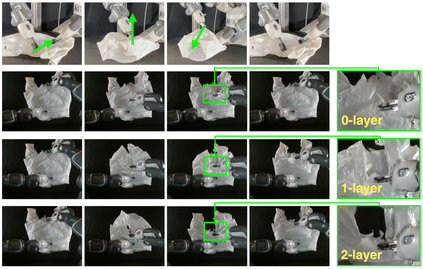

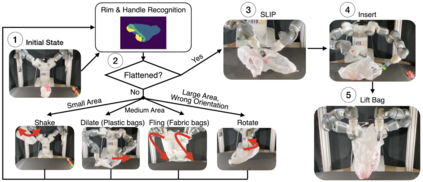

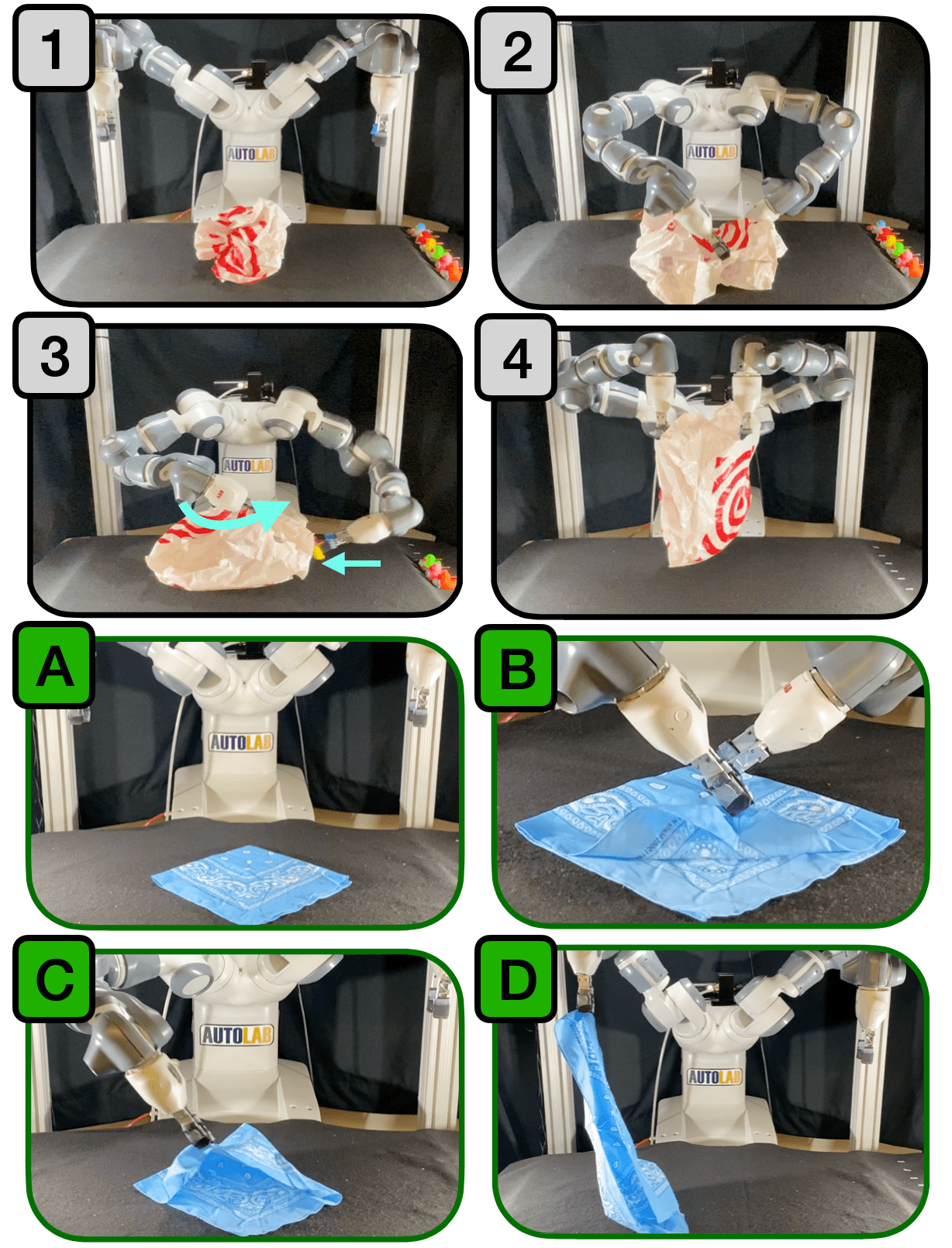

Many fabric handling and 2D deformable material tasks in homes and industry require singulating layers of material such as opening a bag or arranging garments for sewing. In contrast to methods requiring specialized sensing or end effectors, we use only visual observations with ordinary parallel jaw grippers. We propose SLIP: Singulating Layers using Interactive Perception, and apply SLIP to the task of autonomous bagging. We develop SLIP-Bagging, a bagging algorithm that manipulates a plastic or fabric bag from an unstructured state, and uses SLIP to grasp the top layer of the bag to open it for object insertion. In physical experiments, a YuMi robot achieves a success rate of 67% to 81% across bags of a variety of materials, shapes, and sizes, significantly improving in success rate and generality over prior work. Experiments also suggest that SLIP can be applied to tasks such as singulating layers of folded cloth and garments. Supplementary material is available at https://sites.google.com/view/slip-bagging/.

翻译:许多家庭和工业中需要处理织物和二维可变形材料的任务,例如打开袋子或安排缝纫时的服装。与需要专门传感或末端执行器的方法不同,本文仅使用普通平行夹爪的视觉观察。我们提出了SLIP:使用交互感知技术来分层的方法,并将其应用于自主包装的任务。我们开发了SLIP-Bagging算法,该算法使用SLIP来抓取袋子的顶层以打开它,完成物品插入。在物理实验中,YuMi机器人实现了一个成功率在67%至81%之间的性能,跨越了各种材料,形状和大小的袋子,显着提高了成功率和普适性。实验还表明,SLIP可应用于折叠布匹和衣物的分层任务。供稿材料位于https://sites.google.com/view/slip-bagging/.