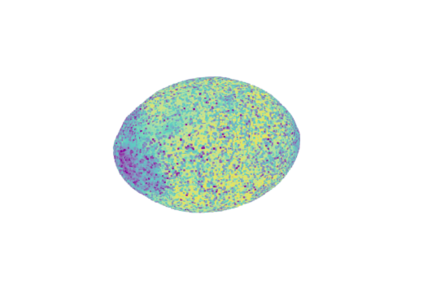

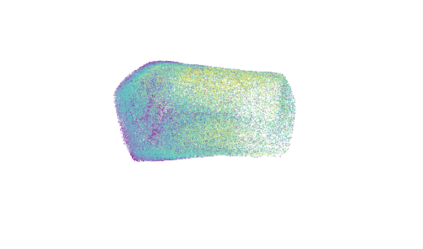

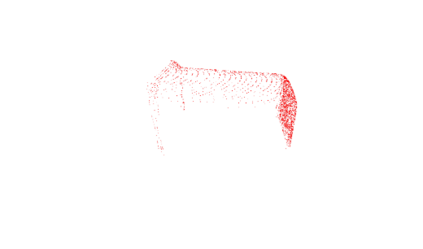

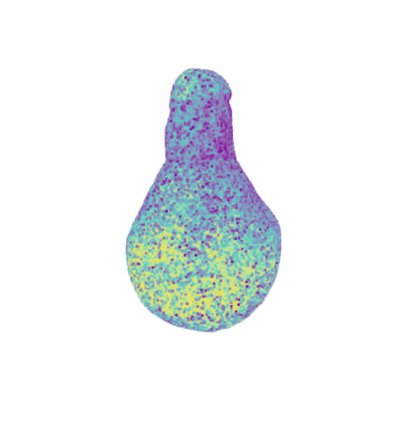

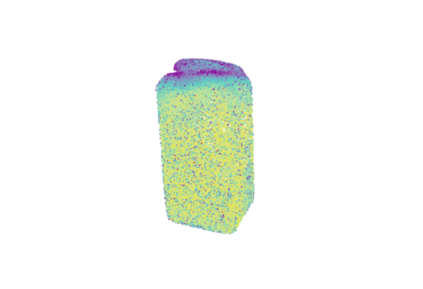

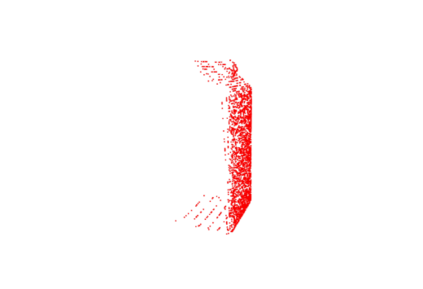

Many robotic tasks involving some form of 3D visual perception greatly benefit from a complete knowledge of the working environment. However, robots often have to tackle unstructured environments and their onboard visual sensors can only provide incomplete information due to limited workspaces, clutter or object self-occlusion. In recent years, deep learning architectures for shape completion have begun taking traction as effective means of inferring a complete 3D object representation from partial visual data. Nevertheless, most of the existing state-of-the-art approaches provide a fixed output resolution in the form of voxel grids, strictly related to the size of the neural network output stage. While this is enough for some tasks, e.g. obstacle avoidance in navigation, grasping and manipulation require finer resolutions and simply scaling up the neural network outputs is computationally expensive. In this paper, we address this limitation by proposing an object shape completion method based on an implicit 3D representation providing a confidence value for each reconstructed point. As a second contribution, we propose a gradient-based method for efficiently sampling such implicit function at an arbitrary resolution, tunable at inference time. We experimentally validate our approach by comparing reconstructed shapes with ground truths, and by deploying our shape completion algorithm in a robotic grasping pipeline. In both cases, we compare results with a state-of-the-art shape completion approach.

翻译:许多涉及某种形式的三维视觉感知的机器人任务从对工作环境的完整了解中获益匪浅,然而,机器人往往必须处理非结构化的环境,其机载视觉传感器只能提供因有限的工作空间、杂乱或物体自我封闭而不完整的信息。近年来,用于完成形状的深层次学习结构开始采用牵引作为从部分视觉数据推断完整的三维物体表示的有效手段。然而,大多数现有最先进的方法以自愿电网的形式提供固定输出分辨率,其形式严格与神经网络输出阶段的大小有关。虽然这足以满足某些任务,例如避免导航障碍、掌握和操纵需要更细的分辨率,而只是扩大神经网络产出的尺度计算成本很高。在本文件中,我们提出一个基于隐含的三维表示,为每个重建的点提供信任价值的完成方法。作为第二种贡献,我们建议一种基于梯度的方法,在任意解析、可测量的网络输出阶段有效取样这种隐含的功能。我们实验性地将完成过程与我们的方法进行对比,通过实验性的方式将我们的完成方式与我们完成过程的模型进行对比。