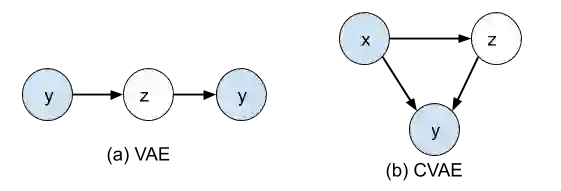

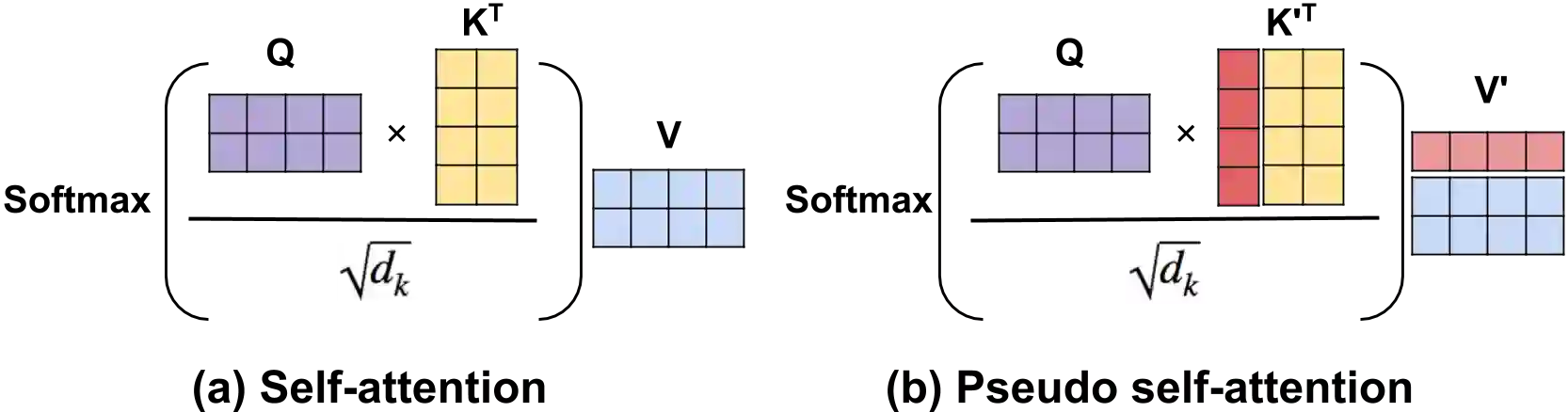

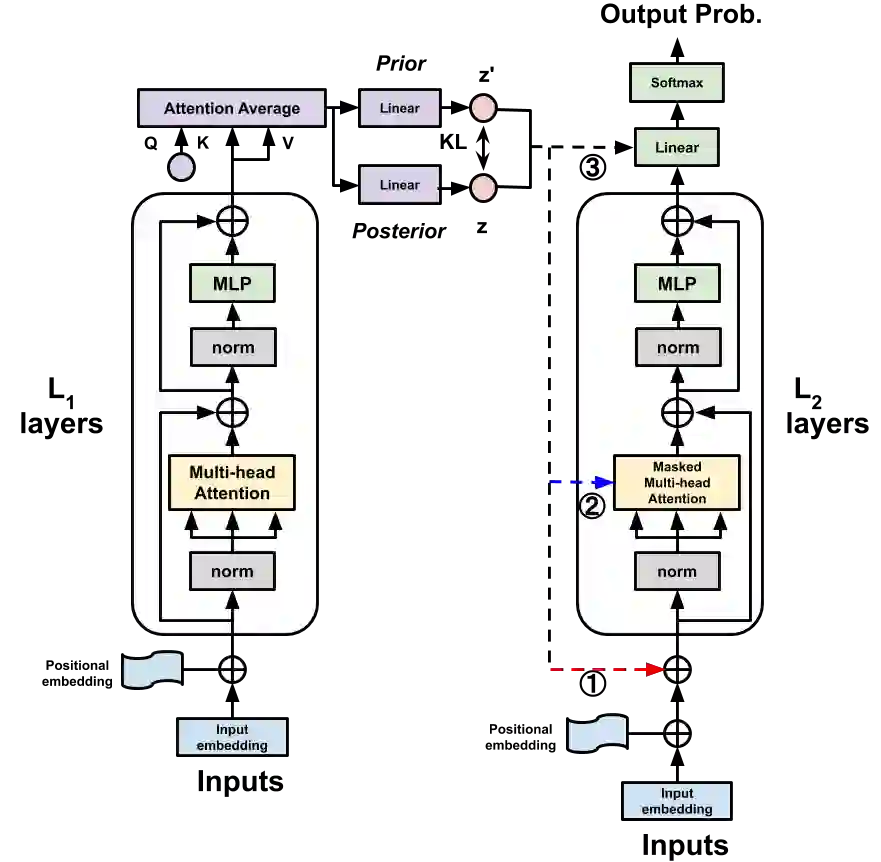

We investigate large-scale latent variable models (LVMs) for neural story generation -- an under-explored application for open-domain long text -- with objectives in two threads: generation effectiveness and controllability. LVMs, especially the variational autoencoder (VAE), have achieved both effective and controllable generation through exploiting flexible distributional latent representations. Recently, Transformers and its variants have achieved remarkable effectiveness without explicit latent representation learning, thus lack satisfying controllability in generation. In this paper, we advocate to revive latent variable modeling, essentially the power of representation learning, in the era of Transformers to enhance controllability without hurting state-of-the-art generation effectiveness. Specifically, we integrate latent representation vectors with a Transformer-based pre-trained architecture to build conditional variational autoencoder (CVAE). Model components such as encoder, decoder and the variational posterior are all built on top of pre-trained language models -- GPT2 specifically in this paper. Experiments demonstrate state-of-the-art conditional generation ability of our model, as well as its excellent representation learning capability and controllability.

翻译:我们调查了神经故事生成的大规模潜伏变量模型(LVMs) -- -- 一个探索不足的开放式长文本应用软件 -- -- 其目标分为两条线:生成有效性和控制性。LVMs,特别是变异自动coder(VAE),通过利用灵活的分布潜在代表形式,实现了有效和可控的生成。最近,变形器及其变异器在没有明确的潜在代表性学习的情况下取得了显著成效,从而在生成过程中缺乏令人满意的控制。在本文件中,我们主张在变形器时代恢复潜在变异模型,主要是代表学习的力量,以加强可控性,同时不损害艺术生成的状态。具体地说,我们将潜在代谢矢体与基于变形器的预先培训结构相结合,以建立有条件的变形自动编码(CVAE) 。 变形器、变形器和变形后代体等模型部件都建在预先培训过的语言模型的顶端上 -- -- GPT2 特别是本文中。实验显示了我们模型的状态有条件生成能力,以及其极好的学习能力和可控性。