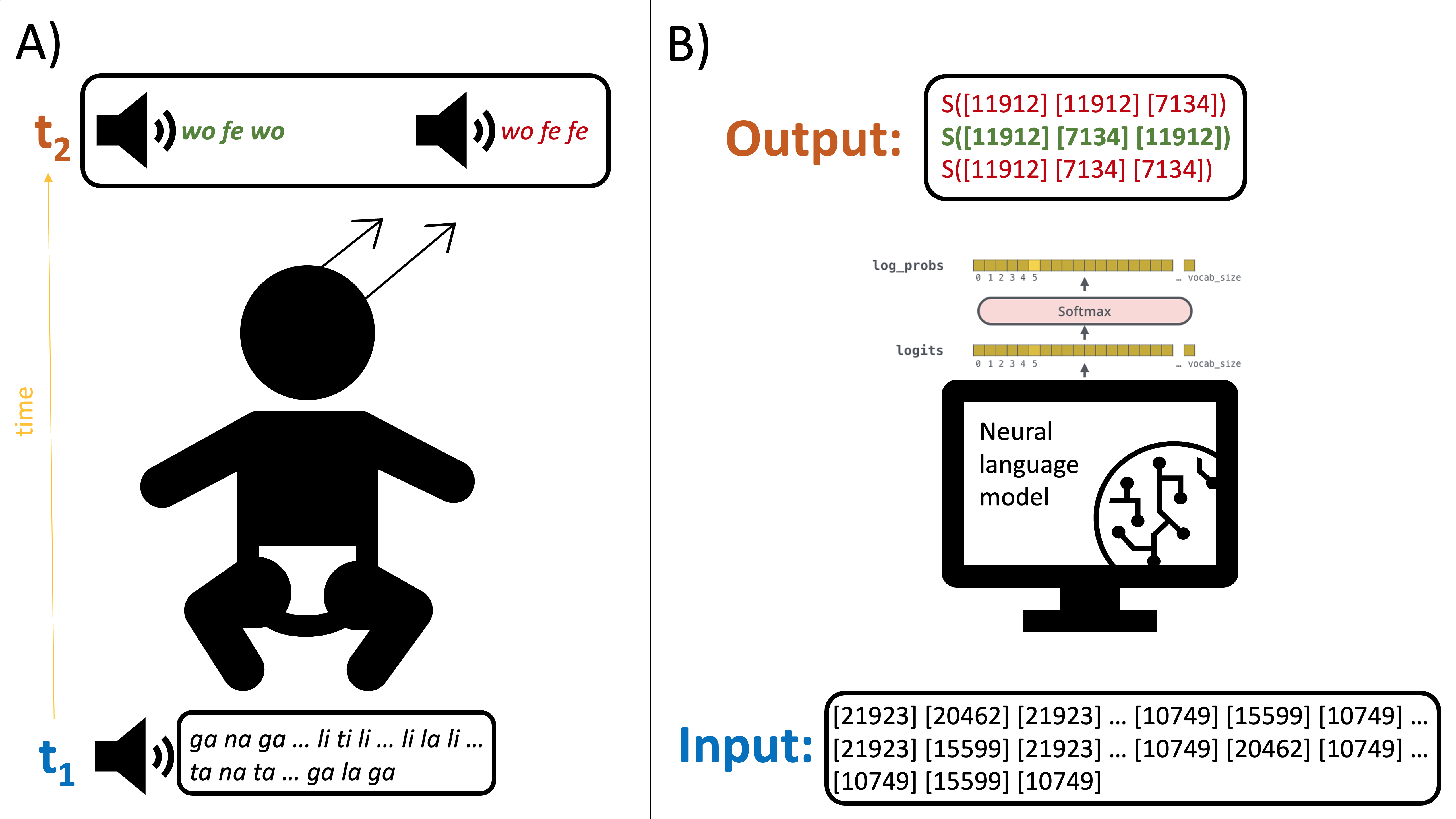

In recent years, deep neural language models have made strong progress in various NLP tasks. This work explores one facet of the question whether state-of-the-art NLP models exhibit elementary mechanisms known from human cognition. The exploration is focused on a relatively primitive mechanism for which there is a lot of evidence from various psycholinguistic experiments with infants. The computation of "abstract sameness relations" is assumed to play an important role in human language acquisition and processing, especially in learning more complex grammar rules. In order to investigate this mechanism in BERT and other pre-trained language models (PLMs), the experiment designs from studies with infants were taken as the starting point. On this basis, we designed experimental settings in which each element from the original studies was mapped to a component of language models. Even though the task in our experiments was relatively simple, the results suggest that the cognitive faculty of computing abstract sameness relations is stronger in infants than in all investigated PLMs.

翻译:近年来,深入的神经语言模型在各种国家语言规划任务中取得了长足的进步。 这项工作探索了最先进的国家语言规划模型是否展示了人类认知中已知的基本机制的问题的一个方面。 探索的重点是一个相对原始的机制,它有许多来自对婴儿的各种心理语言实验的证据。 “ 抽象的相同关系”的计算假定在人类语言的获取和处理中起着重要作用,特别是在学习更复杂的语法规则方面。 为了在BERT和其他预先培训的语言模型中调查这一机制,对婴儿的研究中的实验设计被作为起点。 在此基础上,我们设计了实验环境,将原始研究中的每个要素都绘制成语言模型的一部分。 尽管我们实验中的任务相对简单,但结果显示,在婴儿中计算抽象的相同关系的认知能力比所有被调查的PLMs要强。