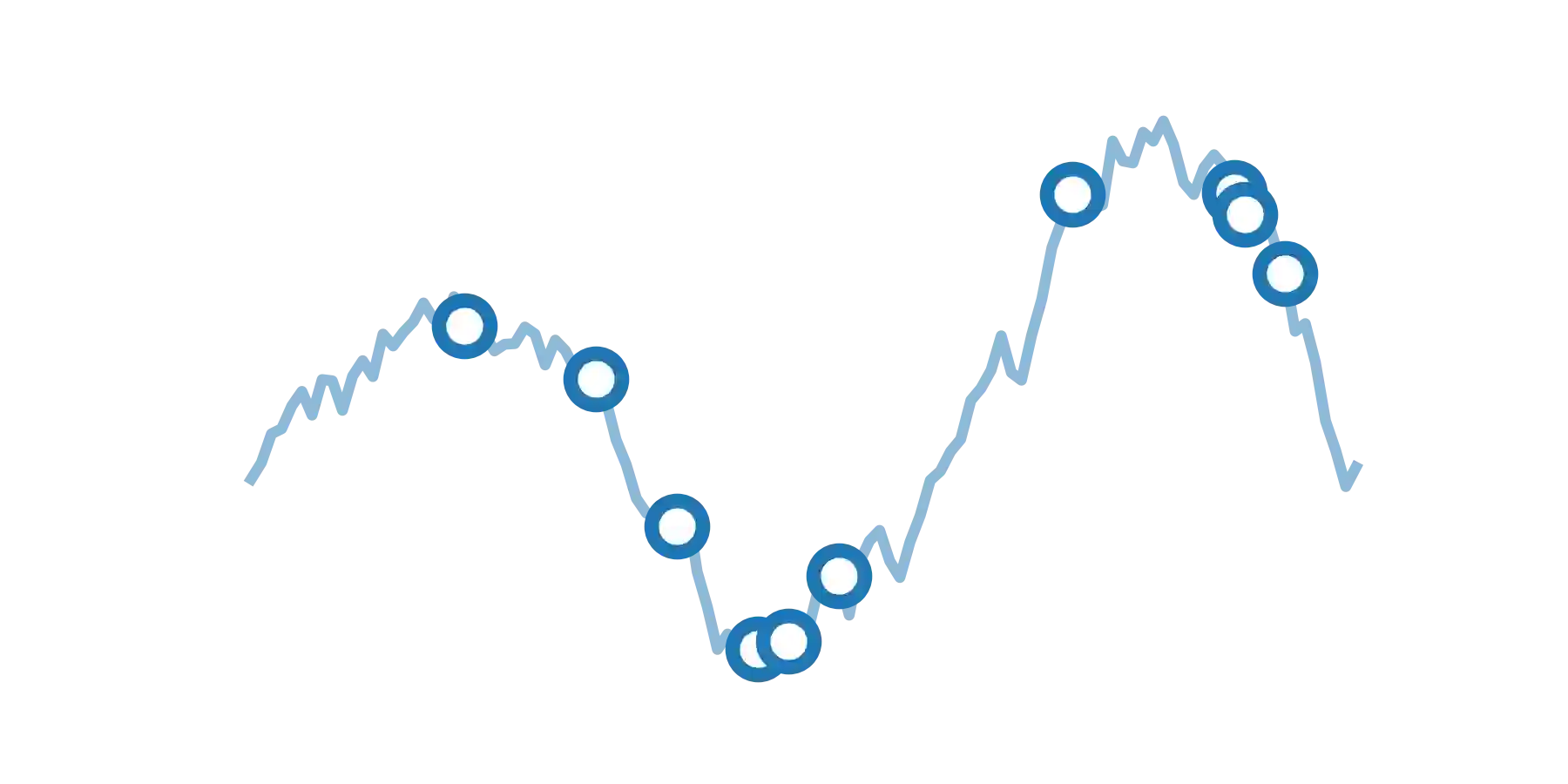

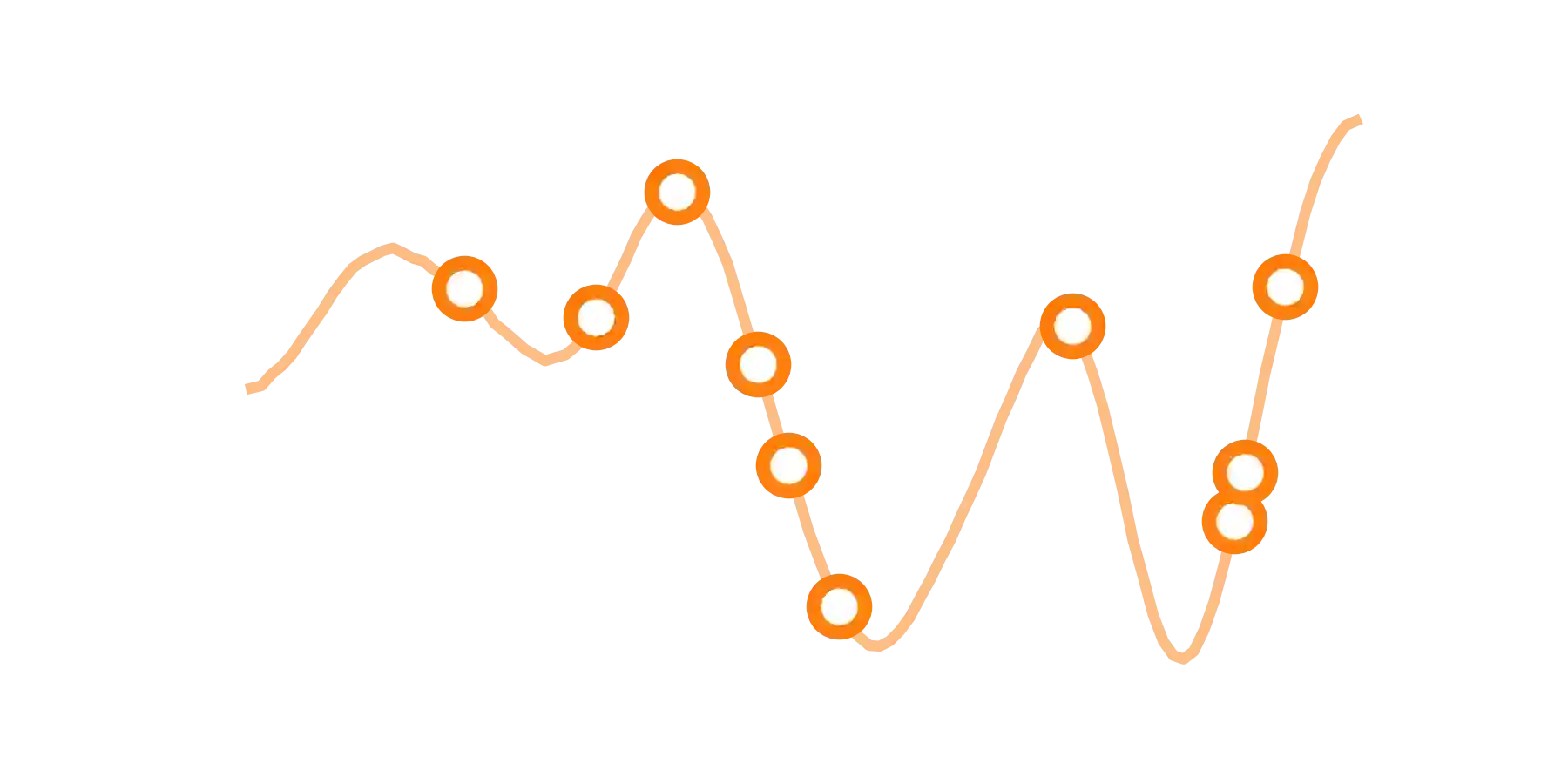

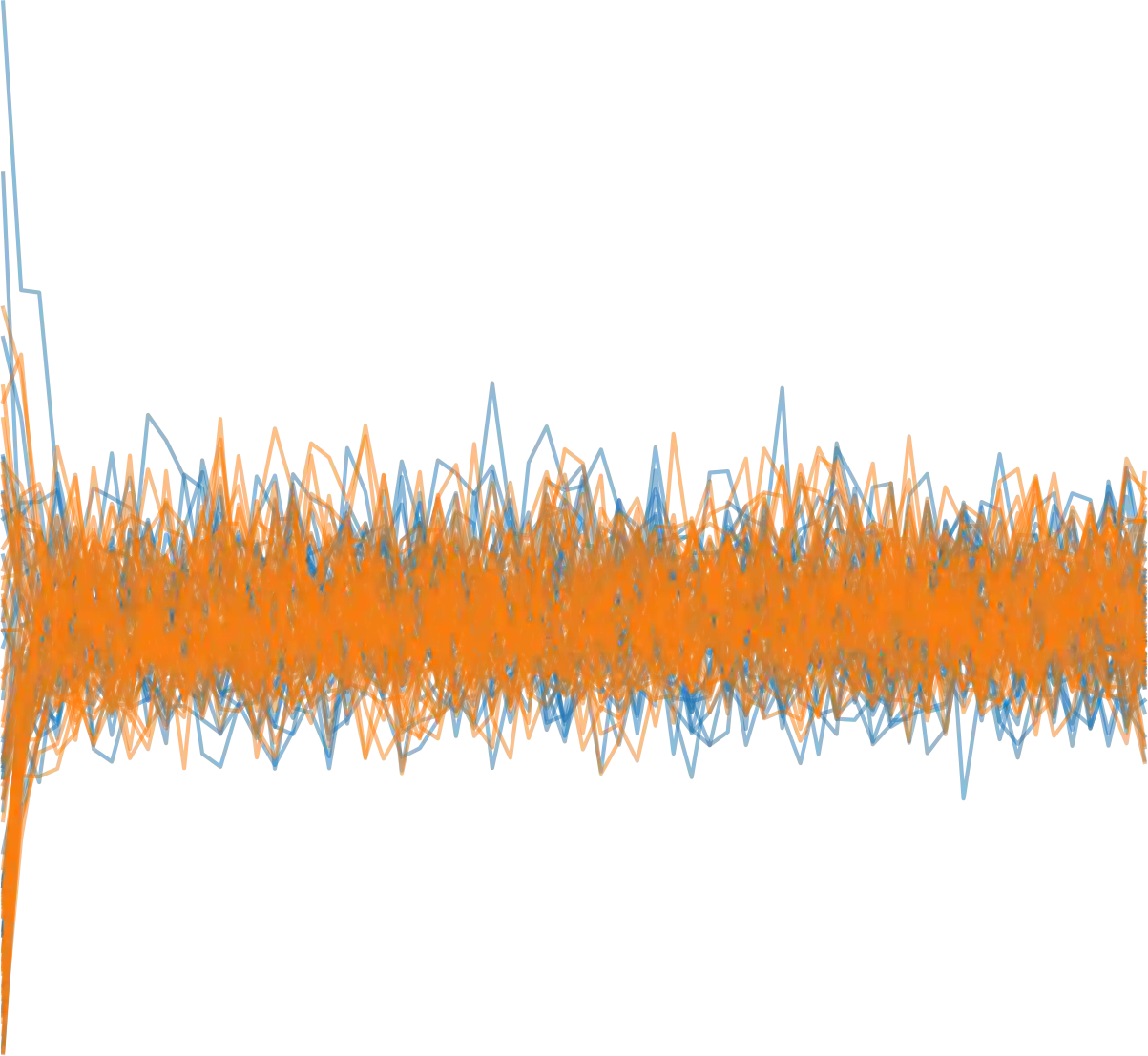

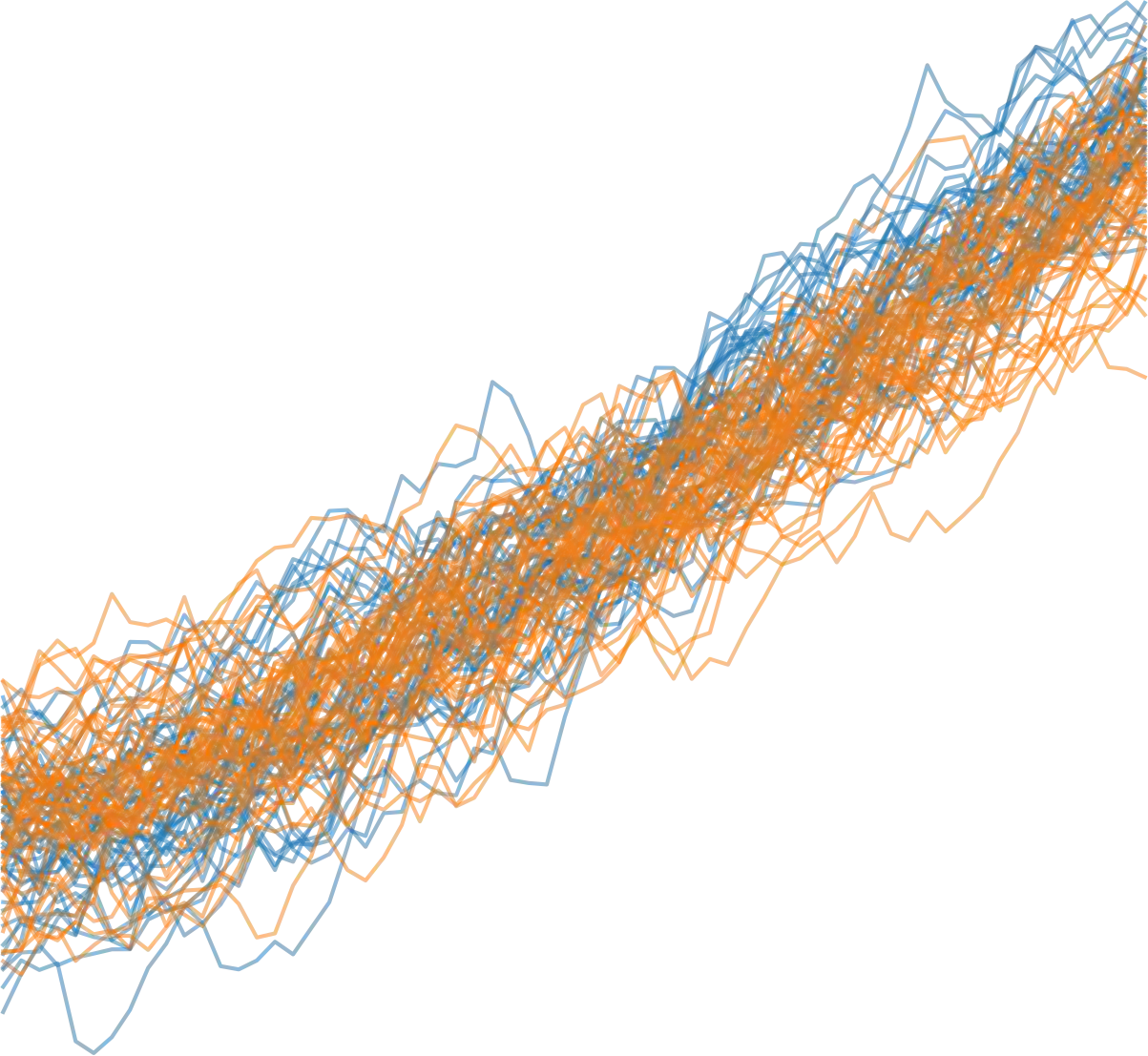

Temporal data like time series are often observed at irregular intervals which is a challenging setting for existing machine learning methods. To tackle this problem, we view such data as samples from some underlying continuous function. We then define a diffusion-based generative model that adds noise from a predefined stochastic process while preserving the continuity of the resulting underlying function. A neural network is trained to reverse this process which allows us to sample new realizations from the learned distribution. We define suitable stochastic processes as noise sources and introduce novel denoising and score-matching models on processes. Further, we show how to apply this approach to the multivariate probabilistic forecasting and imputation tasks. Through our extensive experiments, we demonstrate that our method outperforms previous models on synthetic and real-world datasets.

翻译:时间序列等时空数据通常不定期观测,这是现有机器学习方法的一个挑战性环境。为了解决这一问题,我们将这类数据作为某些潜在连续功能的样本来看待。然后我们定义一个基于传播的基因模型,从预先定义的随机过程增加噪音,同时保持由此而来的基本功能的连续性。一个神经网络接受培训,以扭转这一进程,从而使我们能够从所学的分布中抽取新的成果。我们把适当的随机过程定义为噪音源,并引入关于过程的新颖的分泌和比对模型。此外,我们展示了如何将这种方法应用于多变量概率预测和估算任务。我们通过广泛的实验,证明我们的方法优于以前关于合成和真实世界数据集的模式。

相关内容

Source: Apple - iOS 8