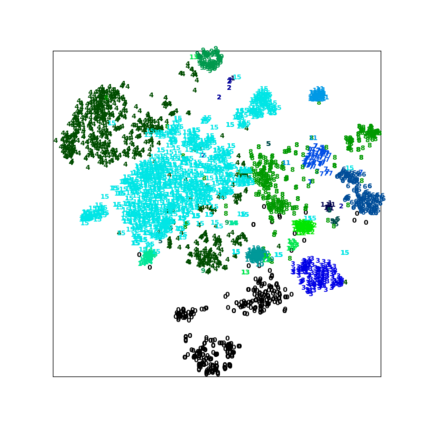

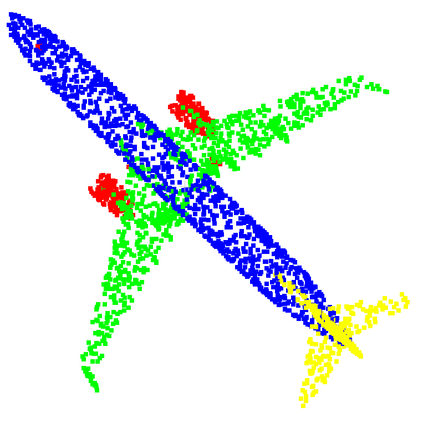

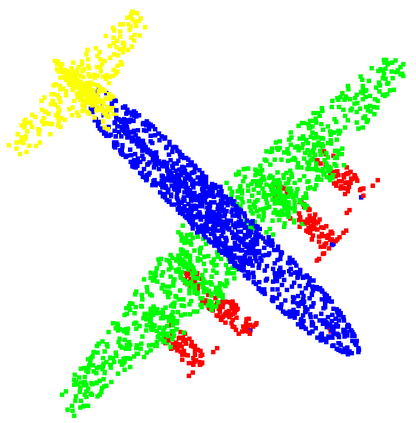

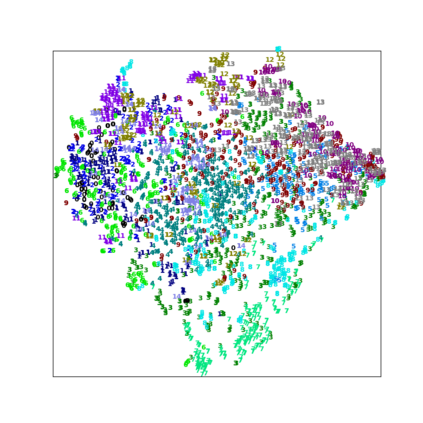

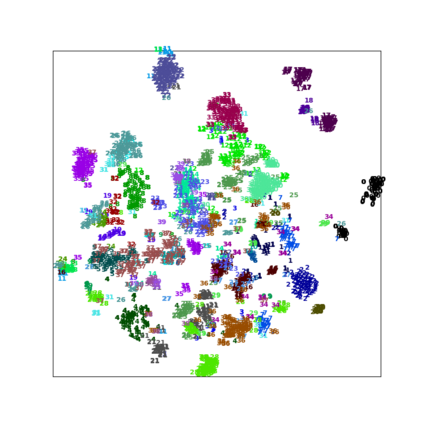

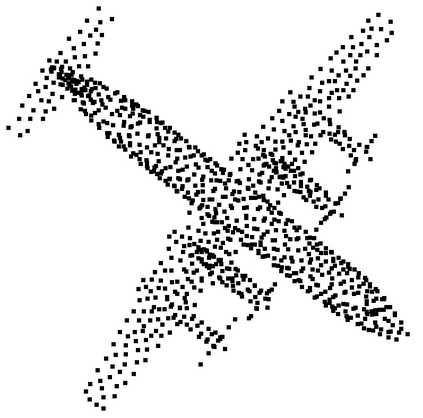

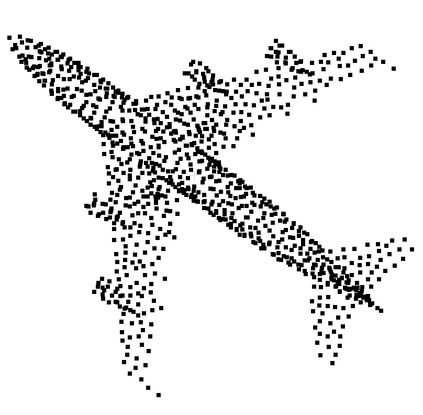

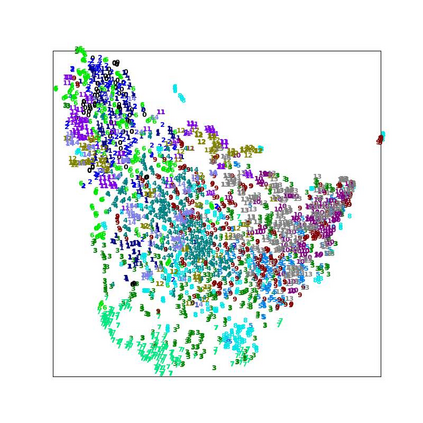

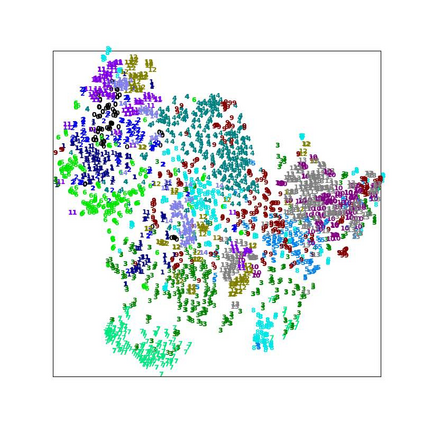

Recently, pre-trained point cloud models have found extensive applications in downstream tasks like object classification. However, these tasks often require {full fine-tuning} of models and lead to storage-intensive procedures, thus limiting the real applications of pre-trained models. Inspired by the great success of visual prompt tuning (VPT) in vision, we attempt to explore prompt tuning, which serves as an efficient alternative to full fine-tuning for large-scale models, to point cloud pre-trained models to reduce storage costs. However, it is non-trivial to apply the traditional static VPT to point clouds, owing to the distribution diversity of point cloud data. For instance, the scanned point clouds exhibit various types of missing or noisy points. To address this issue, we propose an Instance-aware Dynamic Prompt Tuning (IDPT) for point cloud pre-trained models, which utilizes a prompt module to perceive the semantic prior features of each instance. This semantic prior facilitates the learning of unique prompts for each instance, thus enabling downstream tasks to robustly adapt to pre-trained point cloud models. Notably, extensive experiments conducted on downstream tasks demonstrate that IDPT outperforms full fine-tuning in most tasks with a mere 7\% of the trainable parameters, thus significantly reducing the storage pressure. Code is available at \url{https://github.com/zyh16143998882/IDPT}.

翻译:最近,预训练的点云模型在对象分类等下游任务中得到了广泛应用。然而,这些任务通常需要模型的完全微调,从而导致存储密集的程序,因此限制了预训练模型的实际应用。受视觉提示调整(VPT)在视觉领域的巨大成功的启发,我们尝试探索提示调整,作为大型模型的有效替代方案,减少点云预训练模型的存储成本。然而,由于点云数据分布的多样性,在点云中应用传统的静态VPT是非常棘手的。例如,扫描点云展示了各种类型的缺失或噪声点。为了解决这个问题,我们提出了一种针对点云预训练模型的实例感知动态提示调整(IDPT),利用提示模块来感知每个实例的语义先验特征。这种语义先验有助于学习每个实例的独特提示,从而使下游任务能够强健地适应预训练的点云模型。值得注意的是,对下游任务进行的大量实验表明,IDPT在大多数任务中优于完全微调,仅具备7%的可训练参数,从而显着减轻了存储压力。代码可在 \url{https://github.com/zyh16143998882/IDPT} 上找到。