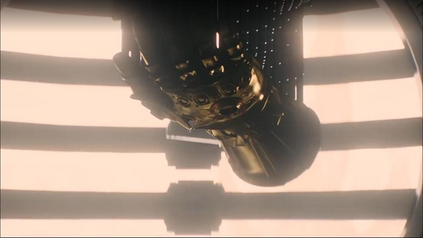

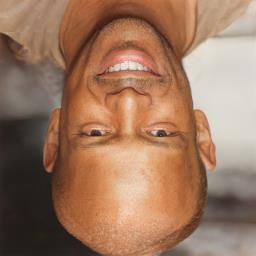

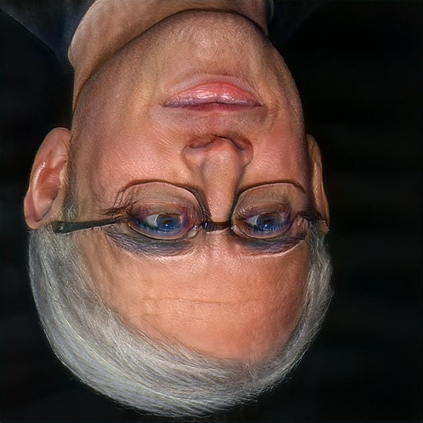

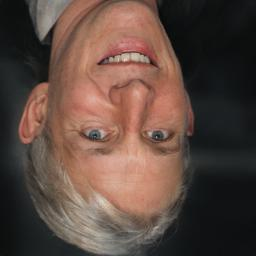

We study joint video and language (VL) pre-training to enable cross-modality learning and benefit plentiful downstream VL tasks. Existing works either extract low-quality video features or learn limited text embedding, while neglecting that high-resolution videos and diversified semantics can significantly improve cross-modality learning. In this paper, we propose a novel High-resolution and Diversified VIdeo-LAnguage pre-training model (HD-VILA) for many visual tasks. In particular, we collect a large dataset with two distinct properties: 1) the first high-resolution dataset including 371.5k hours of 720p videos, and 2) the most diversified dataset covering 15 popular YouTube categories. To enable VL pre-training, we jointly optimize the HD-VILA model by a hybrid Transformer that learns rich spatiotemporal features, and a multimodal Transformer that enforces interactions of the learned video features with diversified texts. Our pre-training model achieves new state-of-the-art results in 10 VL understanding tasks and 2 more novel text-to-visual generation tasks. For example, we outperform SOTA models with relative increases of 38.5% R@1 in zero-shot MSR-VTT text-to-video retrieval task, and 53.6% in high-resolution dataset LSMDC. The learned VL embedding is also effective in generating visually pleasing and semantically relevant results in text-to-visual manipulation and super-resolution tasks.

翻译:我们研究联合视频和语言(VL)预培训,以便能够跨模式学习,并获益于丰富的下游VL任务。现有的工作要么是提取低质量视频功能,要么是学习有限的文字嵌入,而忽视高分辨率视频和多样化的语义可以大大改进跨模式学习。在本文中,我们为许多视觉任务建议了一个新型的高分辨率和多样化Vdeo-LAnguage预培训模式(HD-VILA),特别是我们收集了一个大型数据集,有两个不同的属性:1)第一个高分辨率数据集,包括371.5千小时的720页视频和2)覆盖15个广受欢迎的YouTube类别的最多样化数据集。为了让VL前培训,我们联合优化了HD-VILA模型, 由混合变异性变异体学习丰富的波形特征,以及一个多式变形变形变形变形变形模型,用多种文本进行互动。我们的培训前模型在10VLL理解任务和2个更新版本的文本到视觉生成任务中,在SL5-RVS-RS-S-S-S-S-R-S-S-R-S-SL-S-S-S-S-S-SL-S-S-R-R-S-R-SL-SL-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-S-