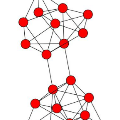

Cross-resolution image alignment is a key problem in multiscale gigapixel photography, which requires to estimate homography matrix using images with large resolution gap. Existing deep homography methods concatenate the input images or features, neglecting the explicit formulation of correspondences between them, which leads to degraded accuracy in cross-resolution challenges. In this paper, we consider the cross-resolution homography estimation as a multimodal problem, and propose a local transformer network embedded within a multiscale structure to explicitly learn correspondences between the multimodal inputs, namely, input images with different resolutions. The proposed local transformer adopts a local attention map specifically for each position in the feature. By combining the local transformer with the multiscale structure, the network is able to capture long-short range correspondences efficiently and accurately. Experiments on both the MS-COCO dataset and the real-captured cross-resolution dataset show that the proposed network outperforms existing state-of-the-art feature-based and deep-learning-based homography estimation methods, and is able to accurately align images under $10\times$ resolution gap.

翻译:跨分辨率图像校正是多尺度的千兆字节摄影中的一个关键问题,它要求使用分辨率差距巨大的图像来估计同系矩阵。现有的深层同系法方法将输入图像或特征集中在一起,忽视了它们之间对等的明确表述,从而导致跨分辨率挑战的准确性降低。在本文中,我们认为跨分辨率同系法估算是一个多式问题,并提议在多尺度结构中嵌入一个本地变压器网络,以明确学习多式联运投入之间的对等,即输入图像与不同分辨率的对等。拟议的本地变压器专门为每个特征的位置使用一个本地注意地图。通过将本地变压器与多尺度结构相结合,网络能够高效和准确地捕捉到长短距离通信。对MS-CO数据集和真实剖析跨分辨率数据集的实验表明,拟议的网络超越了现有最先进的基于地段和深学习的同系估计方法,并且能够将图像精确地与10美元分辨率差距下图像相匹配。