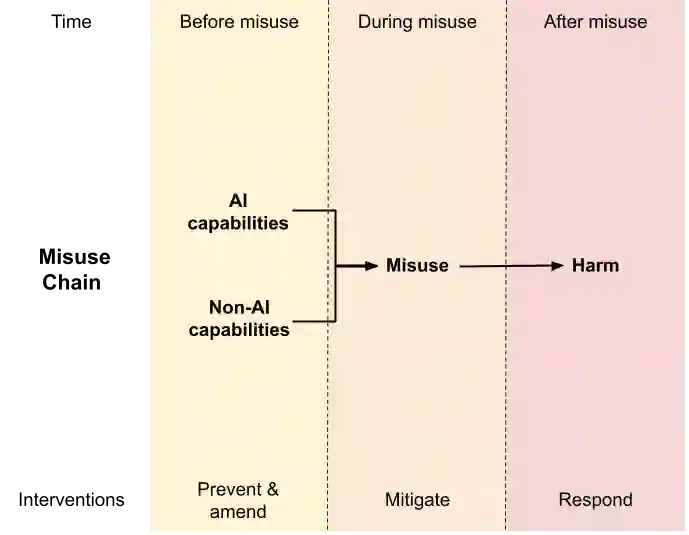

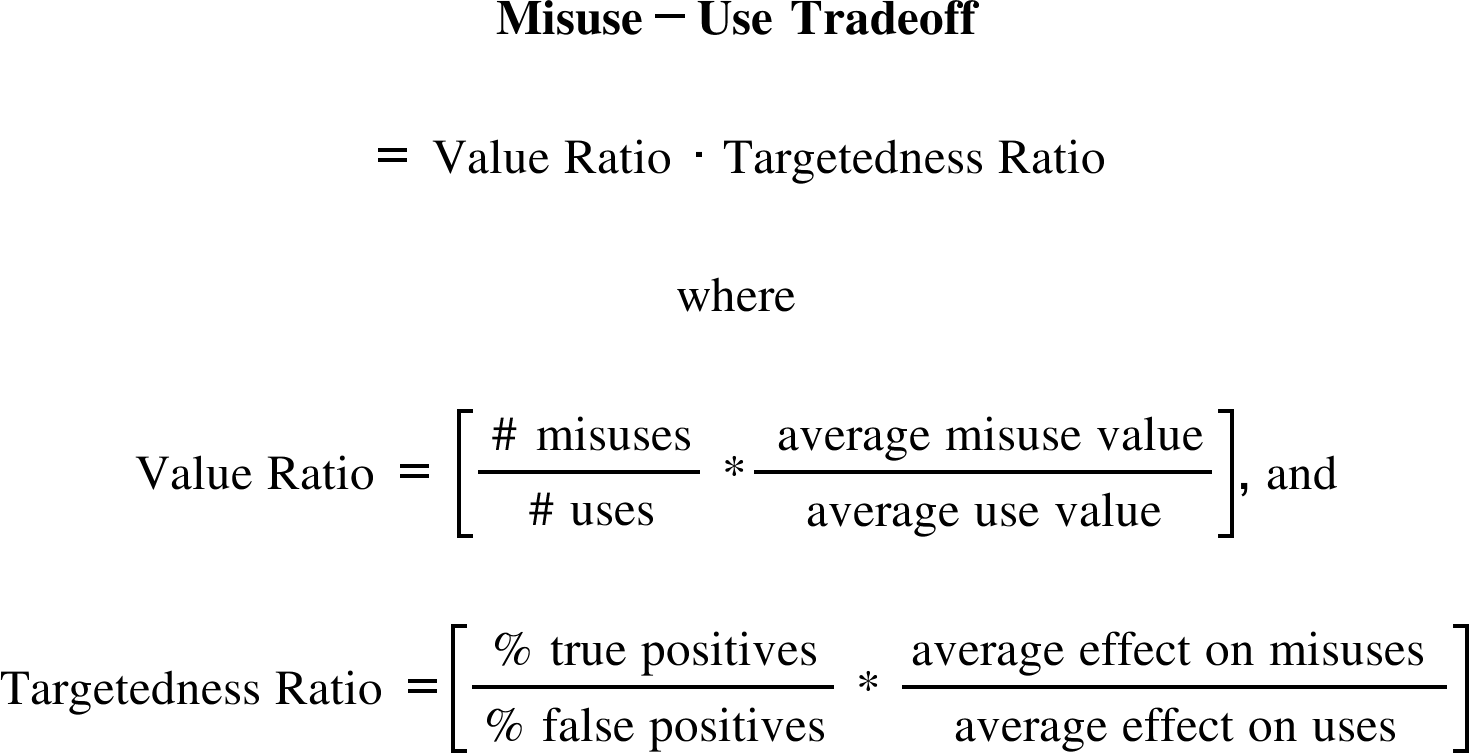

Artificial intelligence (AI) systems will increasingly be used to cause harm as they grow more capable. In fact, AI systems are already starting to be used to automate fraudulent activities, violate human rights, create harmful fake images, and identify dangerous toxins. To prevent some misuses of AI, we argue that targeted interventions on certain capabilities will be warranted. These restrictions may include controlling who can access certain types of AI models, what they can be used for, whether outputs are filtered or can be traced back to their user, and the resources needed to develop them. We also contend that some restrictions on non-AI capabilities needed to cause harm will be required. Though capability restrictions risk reducing use more than misuse (facing an unfavorable Misuse-Use Tradeoff), we argue that interventions on capabilities are warranted when other interventions are insufficient, the potential harm from misuse is high, and there are targeted ways to intervene on capabilities. We provide a taxonomy of interventions that can reduce AI misuse, focusing on the specific steps required for a misuse to cause harm (the Misuse Chain), and a framework to determine if an intervention is warranted. We apply this reasoning to three examples: predicting novel toxins, creating harmful images, and automating spear phishing campaigns.

翻译:人工智能(AI)系统能力的提高将越来越被用于造成危害。事实上,AI系统已经开始被用于自动化欺诈行为,侵犯人权,创建有害的虚假图像和识别危险的毒素。为了防止部分AI的滥用,我们认为针对某些能力的有针对性干预是必要的。这些限制可能包括控制谁可以访问某些类型的AI模型以及这些模型可以用于什么、输出是否被过滤或可以追溯到其用户,以及开发它们所需的资源。我们还认为一些限制非AI能力的行为也是必要的。尽管限制能力可能会面临不利的滥用和使用的权衡,我们认为当其他干预无效时,针对某些能力的干预是必要的,潜在的滥用危害较高并且有有针对性的方式可以进行干预。我们提供了一种减少AI滥用的干预分类法,着重于需要滥用造成危害的具体步骤(滥用链),并提供了一种确定干预是否有必要的框架。我们将此推理应用到三个例子中:预测新型毒素,创造有害图像和自动化钓鱼活动。