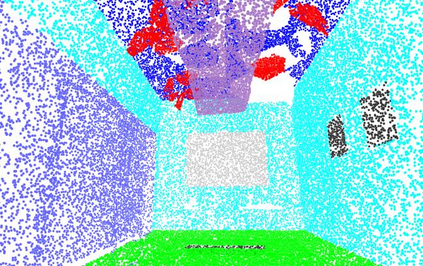

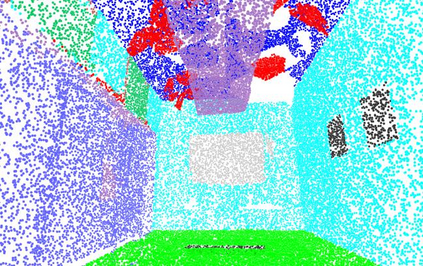

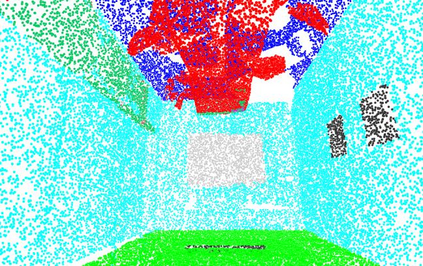

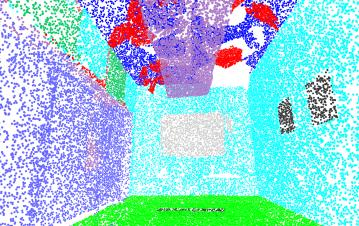

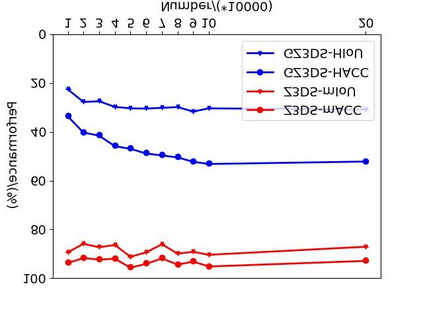

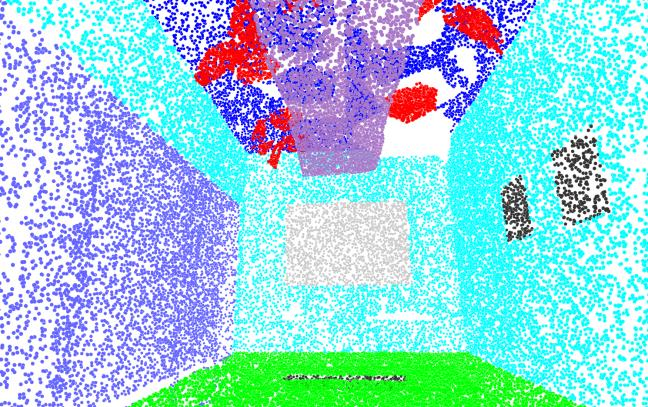

This work is to tackle the problem of point cloud semantic segmentation for 3D hybrid scenes under the framework of zero-shot learning. Here by hybrid, we mean the scene consists of both seen-class and unseen-class 3D objects, a more general and realistic setting in application. To our knowledge, this problem has not been explored in the literature. To this end, we propose a network to synthesize point features for various classes of objects by leveraging the semantic features of both seen and unseen object classes, called PFNet. The proposed PFNet employs a GAN architecture to synthesize point features, where the semantic relationship between seen-class and unseen-class features is consolidated by adapting a new semantic regularizer, and the synthesized features are used to train a classifier for predicting the labels of the testing 3D scene points. Besides we also introduce two benchmarks for algorithmic evaluation by re-organizing the public S3DIS and ScanNet datasets under six different data splits. Experimental results on the two benchmarks validate our proposed method, and we hope our introduced two benchmarks and methodology could be of help for more research on this new direction.

翻译:这项工作是为了在零光学习的框架内解决三维混合场景的点云语分解问题。 这里, 以杂交方式, 我们指的是场景由可见类和看不见类三维对象组成, 一个更一般和现实的应用环境。 据我们所知, 文献中还没有探讨这个问题。 为此, 我们提议建立一个网络, 利用已见和不可见对象类别的语义特征, 将各类对象的点特征综合起来, 称为 PFNet。 拟议的 PFNet 使用一个 GAN 结构来合成点特征, 通过修改一个新的语义定律, 将可见类和不可见类特征合并在一起, 而合成的特征被用来训练一个分类师, 以预测测试三维场景点的标签。 除了我们引入了两个逻辑评估基准, 通过重新组织公共 S3DIS 和 ScanNet 数据集在六种不同数据分解下进行 。 两种基准的实验结果验证了我们提出的方法, 我们希望我们引入的两个基准和方法能够帮助对这一新方向进行更多的研究。