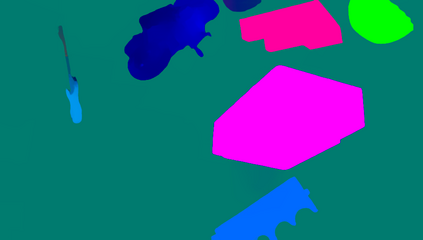

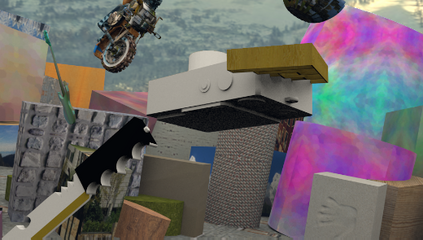

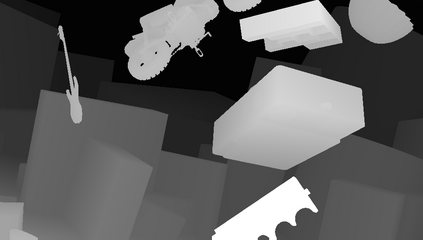

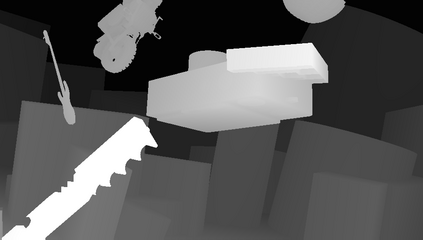

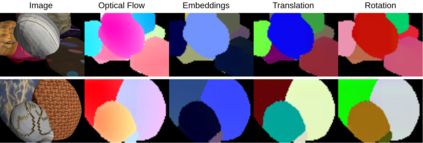

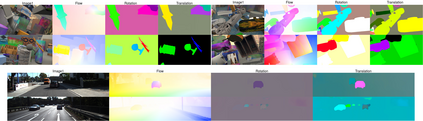

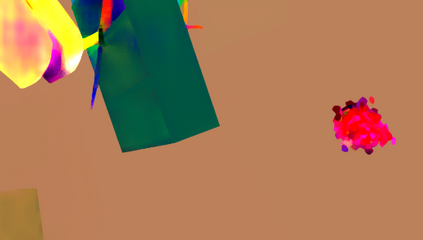

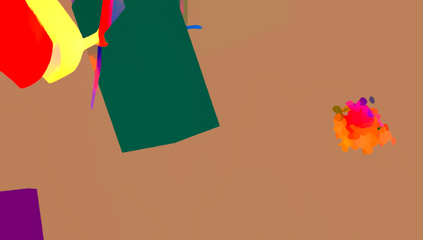

We address the problem of scene flow: given a pair of stereo or RGB-D video frames, estimate pixelwise 3D motion. We introduce RAFT-3D, a new deep architecture for scene flow. RAFT-3D is based on the RAFT model developed for optical flow but iteratively updates a dense field of pixelwise SE3 motion instead of 2D motion. A key innovation of RAFT-3D is rigid-motion embeddings, which represent a soft grouping of pixels into rigid objects. Integral to rigid-motion embeddings is Dense-SE3, a differentiable layer that enforces geometric consistency of the embeddings. Experiments show that RAFT-3D achieves state-of-the-art performance. On FlyingThings3D, under the two-view evaluation, we improved the best published accuracy (d < 0.05) from 30.33% to 83.71%. On KITTI, we achieve an error of 5.77, outperforming the best published method (6.31), despite using no object instance supervision.

翻译:我们处理场景流的问题:给一对立体或RGB-D视频框,估计像素3D运动。我们引入了RAFT-3D,这是场景流的一个新的深层结构。RAFT-3D以为光流开发的RAFT模型为基础,但反复更新了一个密集的像素SE3运动领域,而不是2D运动。RAFT-3D的关键创新是硬性动作嵌入,它代表硬性像素嵌入硬性物体。硬性动作嵌入的软组合是Dense-SE3,这是执行嵌入体几何一致的可差异层。实验显示RAFT-3D取得了最新水平的性能。在两眼评估下,我们提高了最佳的公布精度(d < 0.05) 从30.33%提高到83.71%。在KITTI中,我们发现一个5.77的误差,优于已公布的最佳方法(6.31),尽管没有使用对象实例监督。