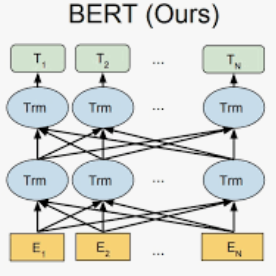

Natural Language Processing (NLP) techniques can be applied to help with the diagnosis of medical conditions such as depression, using a collection of a person's utterances. Depression is a serious medical illness that can have adverse effects on how one feels, thinks, and acts, which can lead to emotional and physical problems. Due to the sensitive nature of such data, privacy measures need to be taken for handling and training models with such data. In this work, we study the effects that the application of Differential Privacy (DP) has, in both a centralized and a Federated Learning (FL) setup, on training contextualized language models (BERT, ALBERT, RoBERTa and DistilBERT). We offer insights on how to privately train NLP models and what architectures and setups provide more desirable privacy utility trade-offs. We envisage this work to be used in future healthcare and mental health studies to keep medical history private. Therefore, we provide an open-source implementation of this work.

翻译:自然语言处理(NLP)技术可用于帮助诊断抑郁等医疗条件,使用个人言语的集合。抑郁是一种严重的医疗疾病,可能对人们的感受、思想和行为产生不利影响,可能导致情感和身体问题。由于这些数据的敏感性,在处理和培训数据模型时需要采用隐私措施。在这项工作中,我们研究了应用差异隐私(DP)在集中和联邦学习(FL)系统中对背景化语言模型(BERT、ALBERT、ROBERTA和DTILBERT)的培训所产生的影响。我们就如何私下培训NLP模型以及哪些架构和设置提供了更可取的隐私效用权衡提出了见解。我们设想在未来的卫生保健和心理健康研究中使用这项工作,以保持医疗史的隐私。因此,我们提供了这项工作的公开实施。