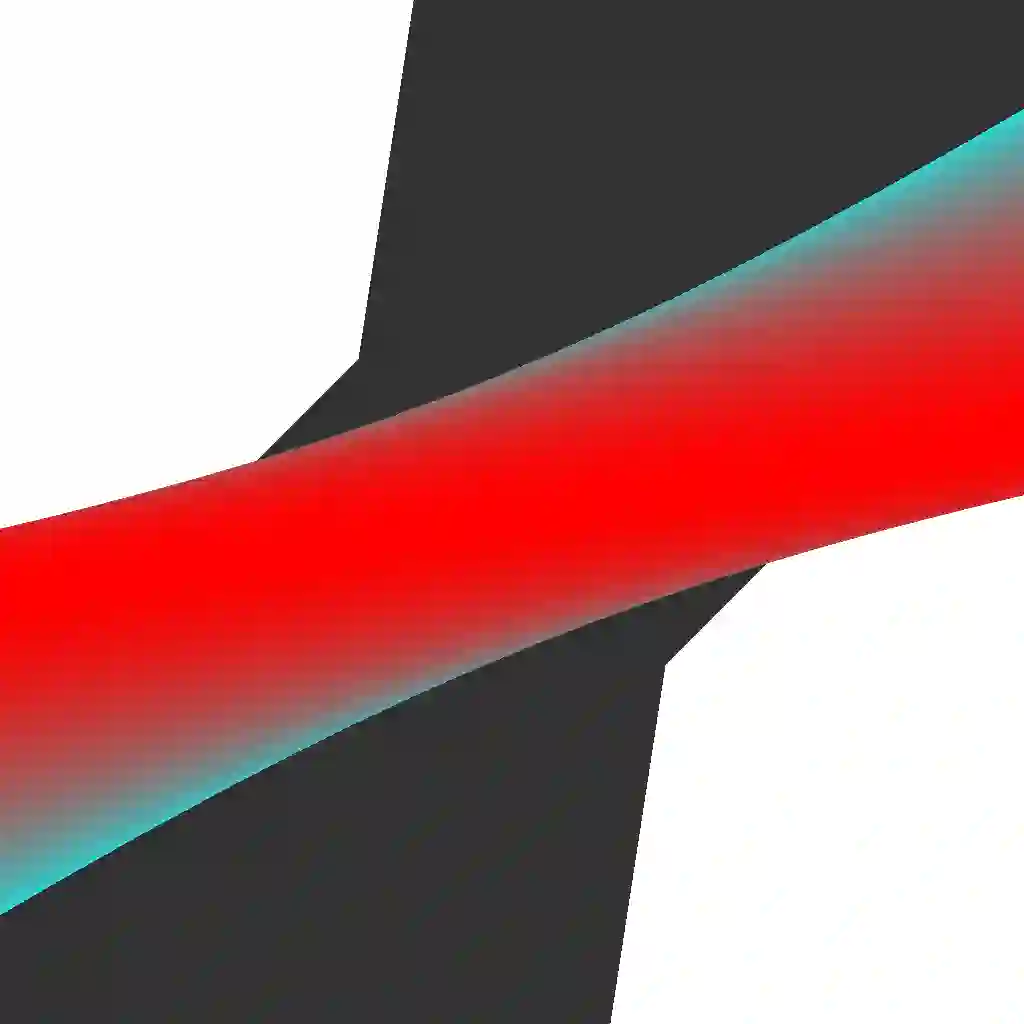

Inferring representations of 3D scenes from 2D observations is a fundamental problem of computer graphics, computer vision, and artificial intelligence. Emerging 3D-structured neural scene representations are a promising approach to 3D scene understanding. In this work, we propose a novel neural scene representation, Light Field Networks or LFNs, which represent both geometry and appearance of the underlying 3D scene in a 360-degree, four-dimensional light field parameterized via a neural implicit representation. Rendering a ray from an LFN requires only a *single* network evaluation, as opposed to hundreds of evaluations per ray for ray-marching or volumetric based renderers in 3D-structured neural scene representations. In the setting of simple scenes, we leverage meta-learning to learn a prior over LFNs that enables multi-view consistent light field reconstruction from as little as a single image observation. This results in dramatic reductions in time and memory complexity, and enables real-time rendering. The cost of storing a 360-degree light field via an LFN is two orders of magnitude lower than conventional methods such as the Lumigraph. Utilizing the analytical differentiability of neural implicit representations and a novel parameterization of light space, we further demonstrate the extraction of sparse depth maps from LFNs.

翻译:从 2D 观测中推断 3D 显示 3D 场景是计算机图形、计算机视觉和人工智能的一个基本问题。 出现 3D 结构型神经场景展示是3D 场景理解的一个很有希望的方法。 在这项工作中,我们提议一个新的神经场面展示,即光场网络或 LFN,它代表了360度四维光场的几何和基底 3D 场的外观,通过神经隐含代表法进行三D 的四维光场参数。从 LFN 射出一幅射线,只需要一个 *sing* 网络评价,而对于3D 结构型神经场场景展示的光源或量制成器进行数百次评价。在简单场景的设置中,我们利用元化学习来了解一个前的LFNFS 场场景,使多视角一致的场面重建能够从一个很小的单一图像观测中进行,从而大大降低时间和记忆的复杂性,并能够实时显示。 通过 LFNF 储存360 光场域域域域域域域域评价的费用比常规的深度图的深度要低2级,比常规方法要低2级,例如我们深度的深度分析的深度图的深度图。