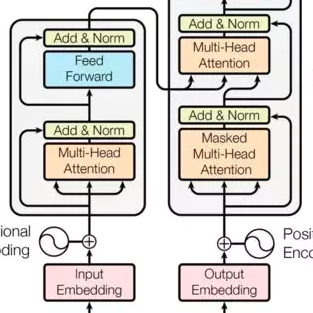

This paper describes the system submitted to the IWSLT 2021 Multilingual Speech Translation (MultiST) task from Huawei Noah's Ark Lab. We use a unified transformer architecture for our MultiST model, so that the data from different modalities (i.e., speech and text) and different tasks (i.e., Speech Recognition, Machine Translation, and Speech Translation) can be exploited to enhance the model's ability. Specifically, speech and text inputs are firstly fed to different feature extractors to extract acoustic and textual features, respectively. Then, these features are processed by a shared encoder--decoder architecture. We apply several training techniques to improve the performance, including multi-task learning, task-level curriculum learning, data augmentation, etc. Our final system achieves significantly better results than bilingual baselines on supervised language pairs and yields reasonable results on zero-shot language pairs.

翻译:本文介绍了提交Huawei Noah的方舟实验室的IWSLT 2021多语种语言翻译(MultiST)任务的系统。 我们用统一的变压器结构来设计我们的多语种翻译模型,以便利用不同模式(即语音和文字)和不同任务(即语音识别、机器翻译和语音翻译)的数据来提高模型的能力。 具体地说, 语言和文字投入首先被反馈给不同的地物提取器, 以分别提取声学和文字特征。 然后, 这些特征由共同的编码- 解码器结构处理。 我们运用了几种培训技术来改进性能, 包括多任务学习、任务级课程学习、数据增强等。 我们的最终系统取得了大大优于对监管语言的双语基线, 并在零发语言配对上产生合理的结果 。