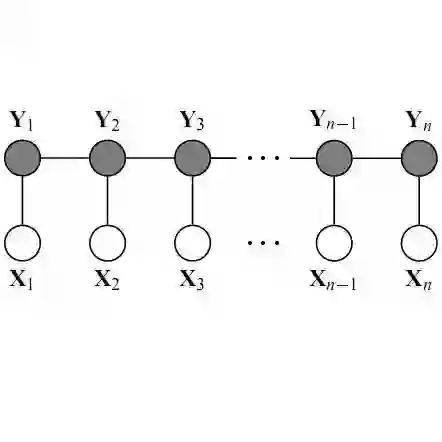

Self-Attention has become prevalent in computer vision models. Inspired by fully connected Conditional Random Fields (CRFs), we decompose it into local and context terms. They correspond to the unary and binary terms in CRF and are implemented by attention mechanisms with projection matrices. We observe that the unary terms only make small contributions to the outputs, and meanwhile standard CNNs that rely solely on the unary terms achieve great performances on a variety of tasks. Therefore, we propose Locally Enhanced Self-Attention (LESA), which enhances the unary term by incorporating it with convolutions, and utilizes a fusion module to dynamically couple the unary and binary operations. In our experiments, we replace the self-attention modules with LESA. The results on ImageNet and COCO show the superiority of LESA over convolution and self-attention baselines for the tasks of image recognition, object detection, and instance segmentation. The code is made publicly available.

翻译:自留在计算机视觉模型中已变得很普遍。 在完全连接的有条件随机字段(CRFs)的启发下,我们将其分解为本地和上下文术语,它们符合通用报告格式中的单词和二进制术语,并通过投影矩阵的注意机制加以执行。我们注意到,单词术语对产出的贡献很小,而完全依赖单词术语的标准有线电视新闻网则在各种任务上取得显著的成绩。因此,我们提议本地增强自留(LESA),通过将单词与卷变结合来增强单词,并利用一个聚合模块来动态地将单词和二进制操作结合起来。在我们的实验中,我们用LESA取代自留式模块。图像网络和COCO的结果显示LESA在图像识别、对象探测和实例分割任务方面优于递进和自留基线。代码是公开的。