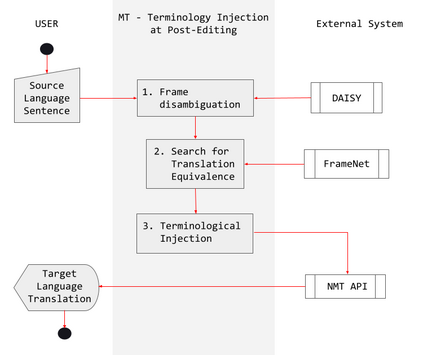

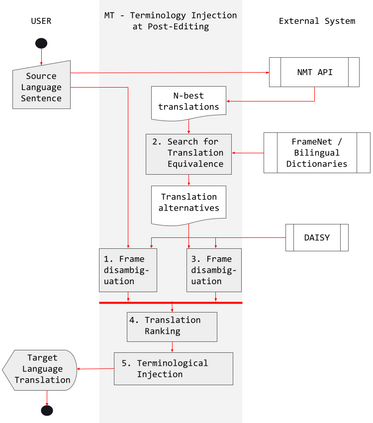

In this paper we present Scylla, a methodology for domain adaptation of Neural Machine Translation (NMT) systems that make use of a multilingual FrameNet enriched with qualia relations as an external knowledge base. Domain adaptation techniques used in NMT usually require fine-tuning and in-domain training data, which may pose difficulties for those working with lesser-resourced languages and may also lead to performance decay of the NMT system for out-of-domain sentences. Scylla does not require fine-tuning of the NMT model, avoiding the risk of model over-fitting and consequent decrease in performance for out-of-domain translations. Two versions of Scylla are presented: one using the source sentence as input, and another one using the target sentence. We evaluate Scylla in comparison to a state-of-the-art commercial NMT system in an experiment in which 50 sentences from the Sports domain are translated from Brazilian Portuguese to English. The two versions of Scylla significantly outperform the baseline commercial system in HTER.

翻译:在本文中,我们介绍了Scylla,这是一个利用多语种框架网进行神经机器翻译系统领域改造的方法,该方法利用了丰富了四方关系的多语言框架网,作为外部知识库;NMT中所用的域适应技术通常需要微调和内部培训数据,这可能给那些使用资源较少语言的人造成困难,还可能导致NMT系统外判决的性能衰减;Scylla不需要微调NMT模式,避免模型超配的风险,从而避免外部翻译的性能下降;Scylla有两种版本:一种是将源句用作投入,另一种是使用目标句;我们评估Scylla与最先进的商业NMT系统进行比较,在试验中,将体育领域的50个句从巴西葡萄牙语翻译成英语;Scylla的两个版本大大超越了HTER的基线商业系统。