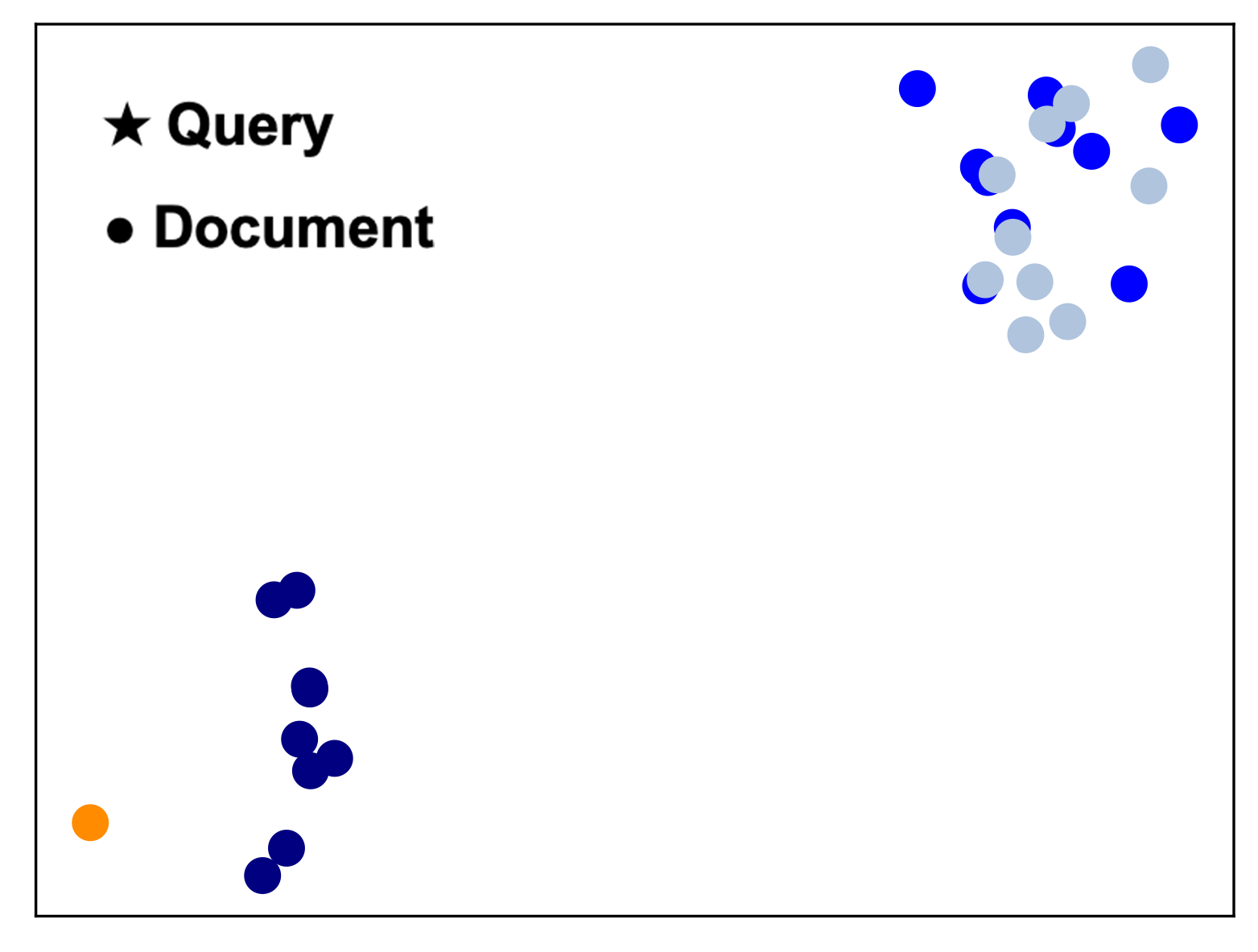

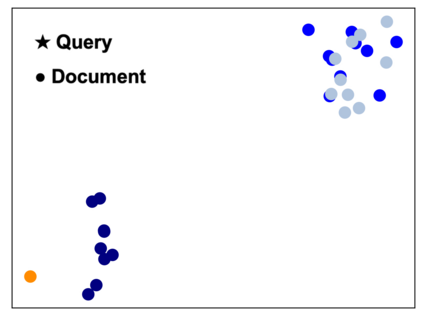

Dense retrievers encode texts and map them in an embedding space using pre-trained language models. These embeddings are critical to keep high-dimensional for effectively training dense retrievers, but lead to a high cost of storing index and retrieval. To reduce the embedding dimensions of dense retrieval, this paper proposes a Conditional Autoencoder (ConAE) to compress the high-dimensional embeddings to maintain the same embedding distribution and better recover the ranking features. Our experiments show the effectiveness of ConAE in compressing embeddings by achieving comparable ranking performance with the raw ones, making the retrieval system more efficient. Our further analyses show that ConAE can mitigate the redundancy of the embeddings of dense retrieval with only one linear layer. All codes of this work are available at https://github.com/NEUIR/ConAE.

翻译:为减少密集检索的嵌入维度,本文件提议使用一个条件自动编码器(ConAE)压缩高维嵌入,以维持相同的嵌入分布,更好地恢复排位特征。我们的实验显示,ConAE通过实现与原始的可比排位性能压缩嵌入功能,使检索系统更有效率,从而在压缩嵌入功能方面十分有效。我们的进一步分析显示,ConAE可以减少只用一个线性层嵌入密度检索的冗余。这项工作的所有代码都可在https://github.com/NEUIR/ConAE上查阅。