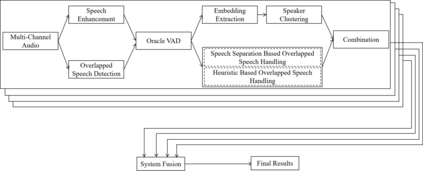

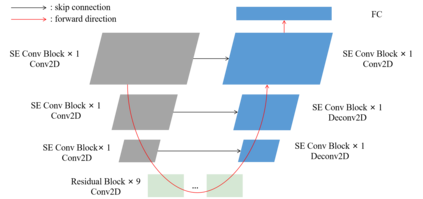

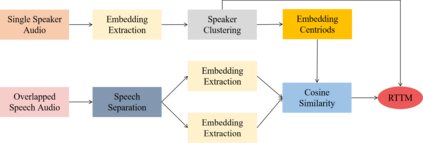

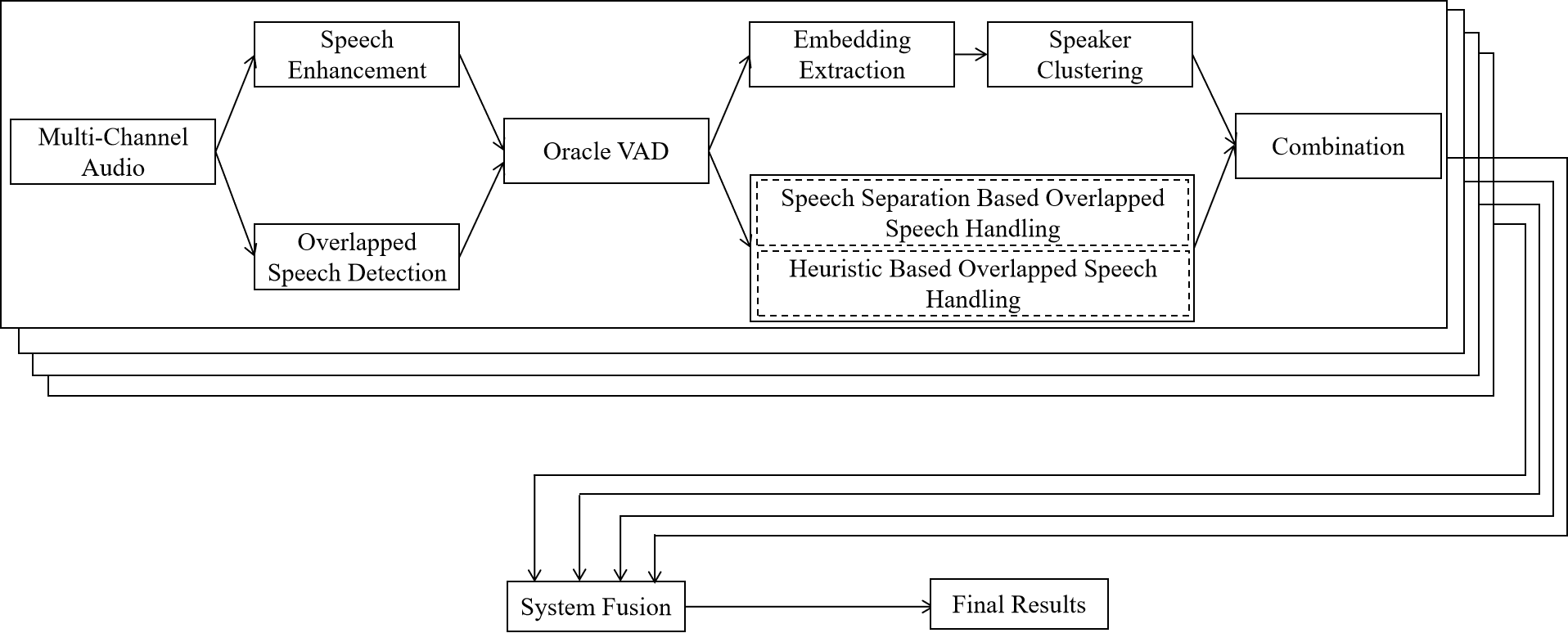

This paper describes the Royalflush speaker diarization system submitted to the Multi-channel Multi-party Meeting Transcription Challenge(M2MeT). Our system comprises speech enhancement, overlapped speech detection, speaker embedding extraction, speaker clustering, speech separation and system fusion. In this system, we made three contributions. First, we propose an architecture of combining the multi-channel and U-Net-based models, aiming at utilizing the benefits of these two individual architectures, for far-field overlapped speech detection. Second, in order to use overlapped speech detection model to help speaker diarization, a speech separation based overlapped speech handling approach, in which the speaker verification technique is further applied, is proposed. Third, we explore three speaker embedding methods, and obtained the state-of-the-art performance on the CNCeleb-E test set. With these proposals, our best individual system significantly reduces DER from 15.25% to 6.40%, and the fusion of four systems finally achieves a DER of 6.30% on the far-field Alimeeting evaluation set.

翻译:本文介绍了提交多频道多党会议分流挑战(M2MET)的皇家脸红色扬声器分化系统。我们的系统包括语音增强、语音探测重叠、语音嵌入提取、发言者群集、语音分离和系统融合。在这个系统中,我们做出了三项贡献。首先,我们提出了一个将多频道和基于U-Net的模型相结合的结构,目的是利用这两个单个结构的好处,进行远方重叠语音检测。第二,为了利用重叠语音检测模型帮助发言者分化,提出了基于语音分解的重叠语音处理方法,进一步应用了语音校验技术。第三,我们探索了三个发言者嵌入方法,并在CNCeleb-E测试集上获得了最先进的表现。有了这些提议,我们最好的单个系统将DER从15.25%大幅降至6.40%,而四个系统的组合最终在远场Alimeeting评价集中实现了6.30%的DER。