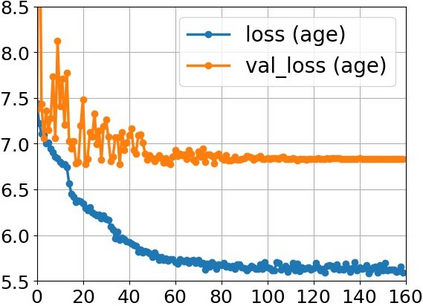

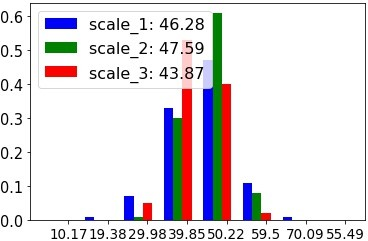

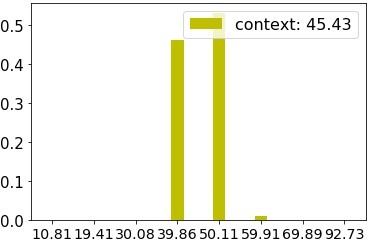

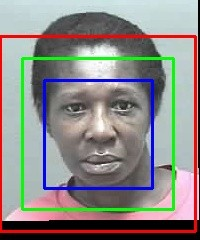

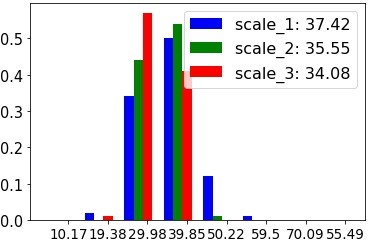

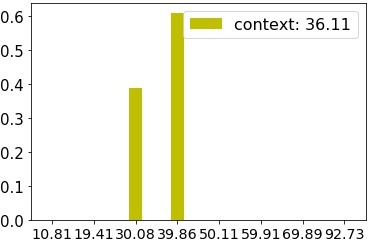

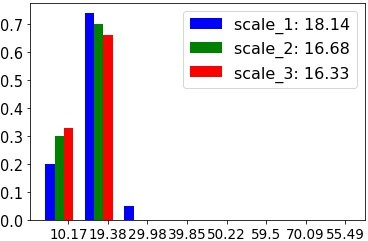

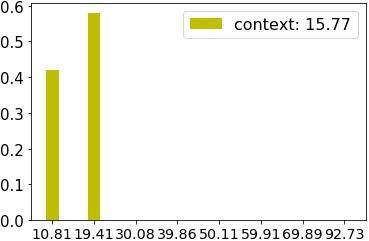

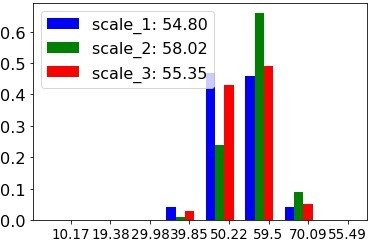

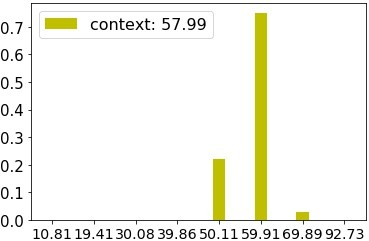

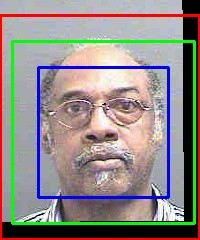

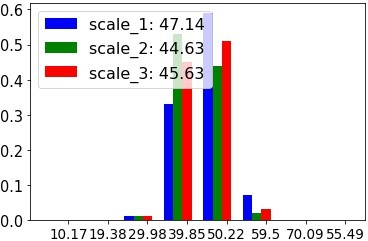

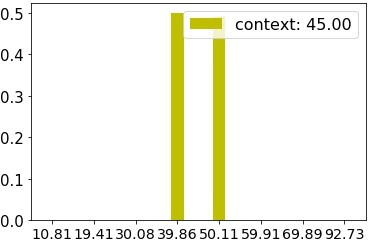

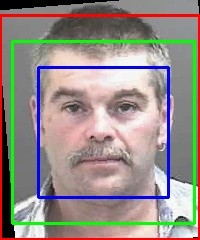

Age estimation is a classic learning problem in computer vision. Many larger and deeper CNNs have been proposed with promising performance, such as AlexNet, VggNet, GoogLeNet and ResNet. However, these models are not practical for the embedded/mobile devices. Recently, MobileNets and ShuffleNets have been proposed to reduce the number of parameters, yielding lightweight models. However, their representation has been weakened because of the adoption of depth-wise separable convolution. In this work, we investigate the limits of compact model for small-scale image and propose an extremely \textbf{C}ompact yet efficient \textbf{C}ascade \textbf{C}ontext-based \textbf{A}ge \textbf{E}stimation model(\textbf{C3AE}). This model possesses only 1/9 and 1/2000 parameters compared with MobileNets/ShuffleNets and VggNet, while achieves competitive performance. In particular, we re-define age estimation problem by two-points representation, which is implemented by a cascade model. Moreover, to fully utilize the facial context information, multi-branch CNN network is proposed to aggregate multi-scale context. Experiments are carried out on three age estimation datasets. The state-of-the-art performance on compact model has been achieved with a relatively large margin.

翻译:年龄估算是计算机视觉中一个典型的学习问题。 许多规模更大、更深层次的CNN 已经提出, 表现良好, 例如 AlexNet、 VggNet、 GoogLeNet 和 ResNet 。 然而, 这些模型对于嵌入/移动设备并不实用。 最近, MobileNets 和 ShuffleNet 提出了减少参数数量的建议, 产生轻量模型。 但是, 它们的代表性由于采用了深度和可相互分离的变异而减弱了。 在这项工作中, 我们调查了小型图像的缩缩略模型的局限性, 并提出了极有希望的性能, 例如, 我们用两个点重现来重新定义年龄估算问题 。 基于文本的\ textbf{A}ge\ textbf{E} 已经提出减少参数数量, 生成轻量模型。 但是, 与移动网络/ Shuffle Net 和 VggNet 相比, 这个模型只有1/9 1/2000 参数, 并且实现了竞争性的性能表现。 我们用双点重新定义年龄估算问题 。 IMIS- IMIS- IMISalimalalal com ex ex ex a compress compress a compress compress compress compress ex laisal compal subisal compal compal be subal compal compal sual sual lais su sual sual su lais sual sual lade su su sual laisal subilal lais la la la la subil be be be be be be subal subal subal subal a sual su su su su su su sual a sual a subal subal subal sual su su subal subal su su su su su su su su su su su su su su su su su su su su su su su su su