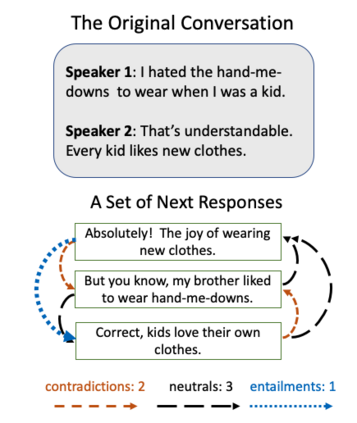

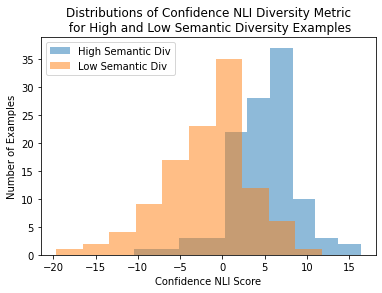

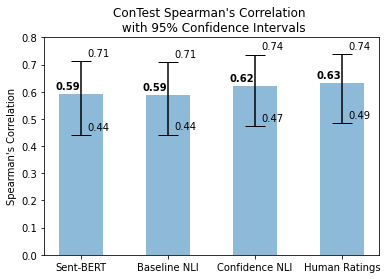

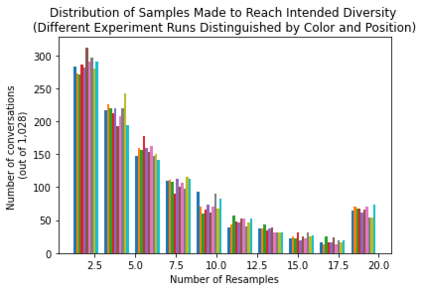

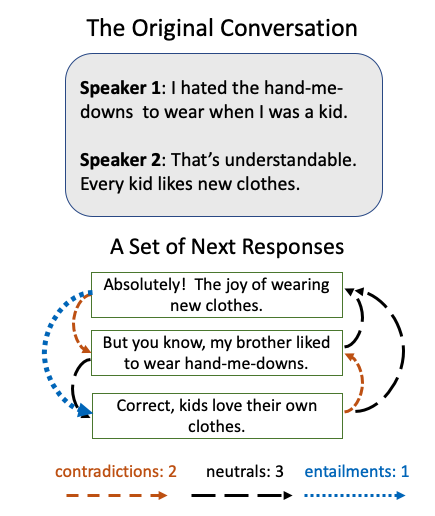

Generating diverse, interesting responses to chitchat conversations is a problem for neural conversational agents. This paper makes two substantial contributions to improving diversity in dialogue generation. First, we propose a novel metric which uses Natural Language Inference (NLI) to measure the semantic diversity of a set of model responses for a conversation. We evaluate this metric using an established framework (Tevet and Berant, 2021) and find strong evidence indicating NLI Diversity is correlated with semantic diversity. Specifically, we show that the contradiction relation is more useful than the neutral relation for measuring this diversity and that incorporating the NLI model's confidence achieves state-of-the-art results. Second, we demonstrate how to iteratively improve the semantic diversity of a sampled set of responses via a new generation procedure called Diversity Threshold Generation, which results in an average 137% increase in NLI Diversity compared to standard generation procedures.

翻译:生成对切开对话的不同而有趣的反应是神经谈话代理人的一个问题。 本文对改善对话生成的多样性作出了两个实质性贡献。 首先,我们提出一个新的衡量标准,用自然语言推论衡量一套对话模式答复的语义多样性。 我们利用一个既定框架(Tevet和Berant, 2021年)来评估这一衡量标准,并找到有力的证据,表明非语言多样性与语义多样性相关联。 具体地说,我们表明,这种矛盾关系比衡量这种多样性的中性关系更有用,而结合非语言推论模型的信心则能取得最新的结果。 其次,我们展示如何通过称为“多样性阈值生成”的新一代程序,反复改善一组抽样反应的语义多样性,其结果是与标准的生成程序相比,非语言多样性多样性平均增加了137%。