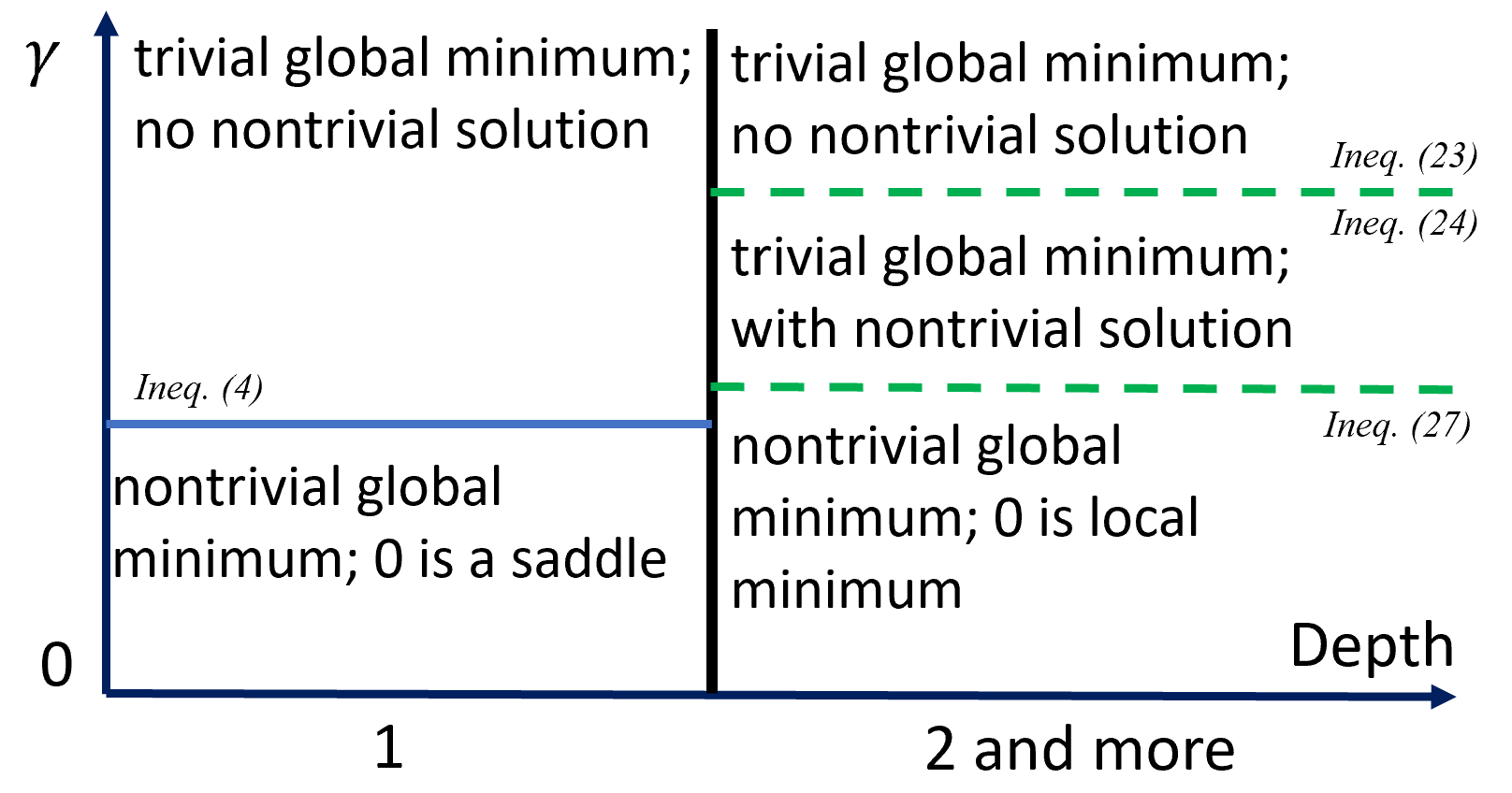

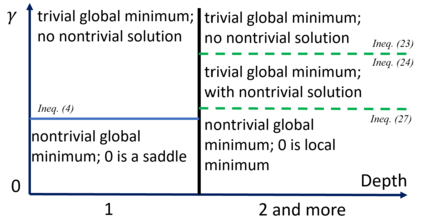

This work finds the exact solutions to a deep linear network with weight decay and stochastic neurons, a fundamental model for understanding the landscape of neural networks. Our result implies that weight decay strongly interacts with the model architecture and can create bad minima in a network with more than $1$ hidden layer, qualitatively different for a network with only $1$ hidden layer. As an application, we also analyze stochastic nets and show that their prediction variance vanishes to zero as the stochasticity, the width, or the depth tends to infinity.

翻译:这项工作为一个带有重量衰减和随机神经元的深线网络找到了确切的解决方案,这是了解神经网络景观的基本模型。 我们的结果意味着,重量衰减与模型结构有着强烈的相互作用,并且可以在一个隐藏层超过1美元的网络中制造坏的微型模型,对于一个仅拥有1美元隐藏层的网络来说,这种模型在性质上是不同的。 作为应用,我们还分析了沙网,并表明它们的预测差异随着蒸馏性、宽度或深度倾向于无限性而消失到零。