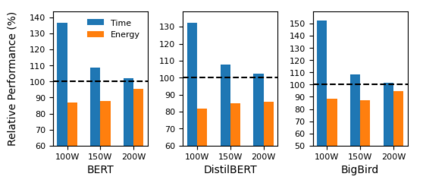

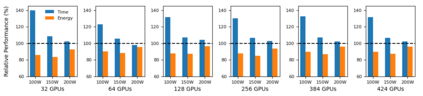

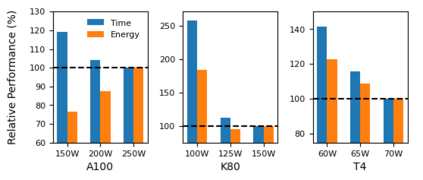

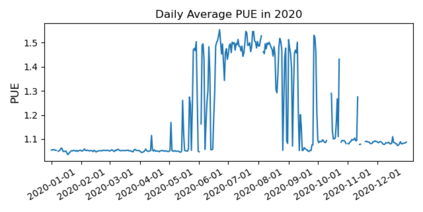

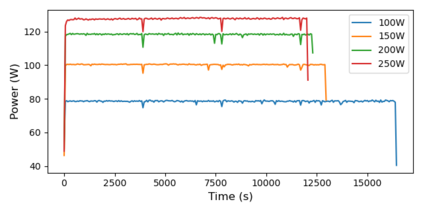

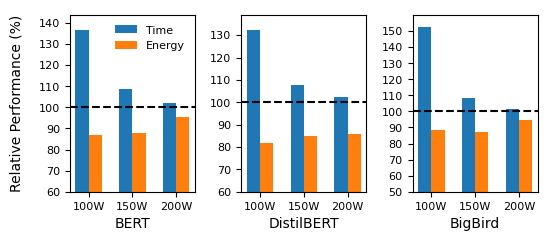

The energy requirements of current natural language processing models continue to grow at a rapid, unsustainable pace. Recent works highlighting this problem conclude there is an urgent need for methods that reduce the energy needs of NLP and machine learning more broadly. In this article, we investigate techniques that can be used to reduce the energy consumption of common NLP applications. In particular, we focus on techniques to measure energy usage and different hardware and datacenter-oriented settings that can be tuned to reduce energy consumption for training and inference for language models. We characterize the impact of these settings on metrics such as computational performance and energy consumption through experiments conducted on a high performance computing system as well as popular cloud computing platforms. These techniques can lead to significant reduction in energy consumption when training language models or their use for inference. For example, power-capping, which limits the maximum power a GPU can consume, can enable a 15\% decrease in energy usage with marginal increase in overall computation time when training a transformer-based language model.

翻译:目前自然语言处理模型的能源需求继续以快速、不可持续的速度增长。最近强调这一问题的工作表明,迫切需要采用减少NLP的能源需求和更广泛地进行机器学习的方法。在本篇文章中,我们调查可用于减少通用NLP应用的能源消耗的技术。特别是,我们侧重于测量能源使用的技术以及不同的硬件和以数据为中心的环境,这些技术可以调整,以减少用于培训和推断语言模型的能源消耗。我们通过在高性能计算系统以及流行的云计算平台上进行的实验,说明这些环境对计算性能和能源消耗等指标的影响。这些技术可以在培训语言模型或使用这些模型进行推断时导致能源消耗的大幅下降。例如,限制GPU所消耗的最大功率的节能发电能够使能源使用减少15 ⁇,在培训以变压器为基础的语言模型时总计算时间略有增加。