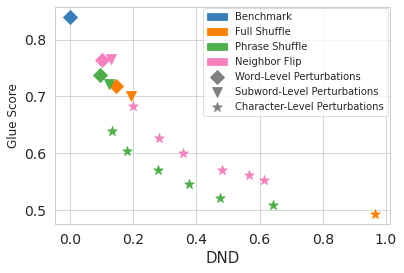

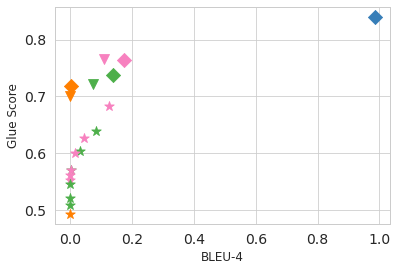

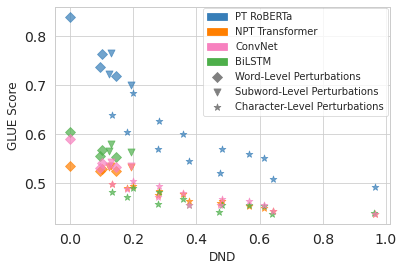

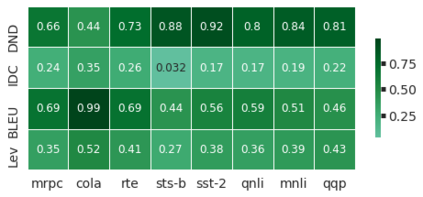

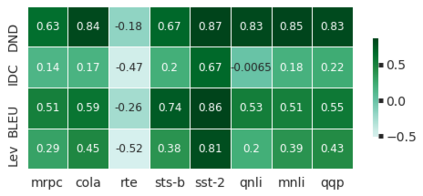

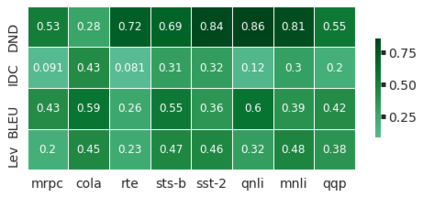

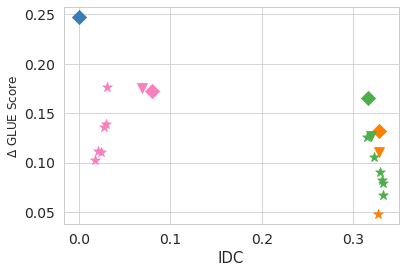

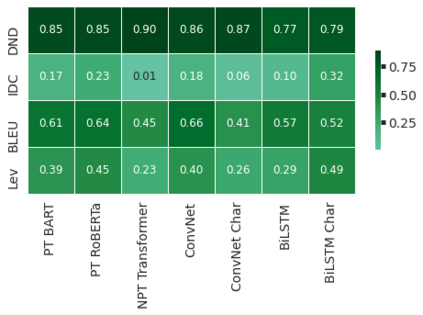

Recent research analyzing the sensitivity of natural language understanding models to word-order perturbations have shown that the state-of-the-art models in several language tasks may have a unique way to understand the text that could seldom be explained with conventional syntax and semantics. In this paper, we investigate the insensitivity of natural language models to word-order by quantifying perturbations and analysing their effect on neural models' performance on language understanding tasks in GLUE benchmark. Towards that end, we propose two metrics - the Direct Neighbour Displacement (DND) and the Index Displacement Count (IDC) - that score the local and global ordering of tokens in the perturbed texts and observe that perturbation functions found in prior literature affect only the global ordering while the local ordering remains relatively unperturbed. We propose perturbations at the granularity of sub-words and characters to study the correlation between DND, IDC and the performance of neural language models on natural language tasks. We find that neural language models - pretrained and non-pretrained Transformers, LSTMs, and Convolutional architectures - require local ordering more so than the global ordering of tokens. The proposed metrics and the suite of perturbations allow a systematic way to study the (in)sensitivity of neural language understanding models to varying degree of perturbations.

翻译:分析自然语言理解模型对文字顺序扰动的敏感性的近期研究显示,若干语言任务中最先进的模型可能有一个独特的方法来理解那些很少用传统语法和语义解释的文本。在本文中,我们通过量化扰动和分析自然语言模型对神经模型在GLUE基准中语言理解任务方面表现的影响,调查自然语言模型对文字顺序不敏感的情况,为此,我们提出了两种衡量标准----直接邻国流离失所和指数流离失所计----在四周文字中分到当地和全球的标语顺序,并观察到先前文献中发现的扰动功能仅影响全球秩序,而当地秩序则相对不受干扰。我们提议对自然语言任务中DND、IDC和神经语言模型的性能的微粒性影响进行扰动。我们发现,神经语言模型----预先和未受训练的变异语言、LSTMS和 Convolutional 结构的系统化功能,需要更精确的本地的变异性研究,而不是全球平面结构的排序。