Attention is an increasingly popular mechanism used in a wide range of neural architectures. Because of the fast-paced advances in this domain, a systematic overview of attention is still missing. In this article, we define a unified model for attention architectures for natural language processing, with a focus on architectures designed to work with vector representation of the textual data. We discuss the dimensions along which proposals differ, the possible uses of attention, and chart the major research activities and open challenges in the area.

翻译:注意力是各种神经结构中日益流行的一种机制,由于这一领域的快速进展,仍然缺乏对注意力的系统全面了解。在本条中,我们为自然语言处理的注意结构确定了统一的模式,重点是旨在与文本数据的矢量代表合作的构架。我们讨论了各种建议的不同层面、关注的可能用途以及该领域的主要研究活动和公开挑战。

相关内容

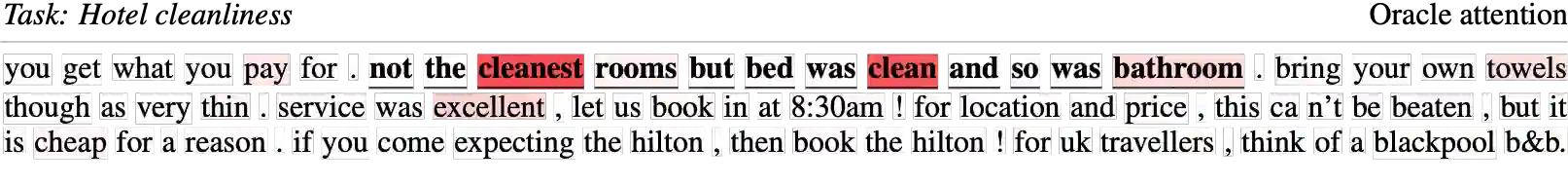

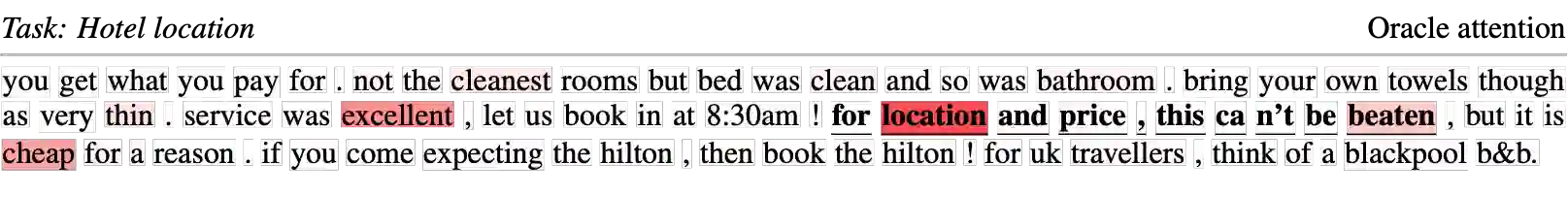

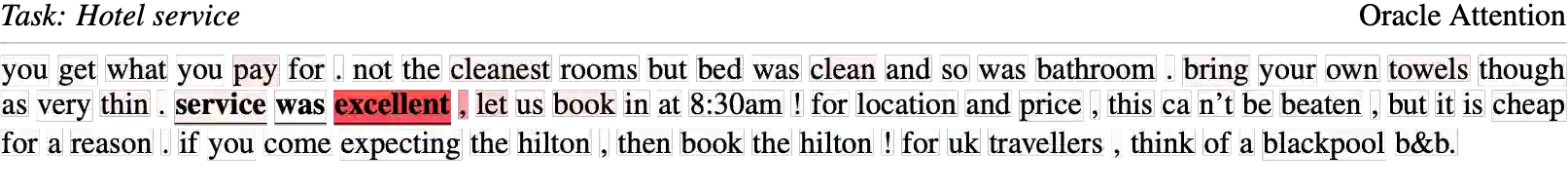

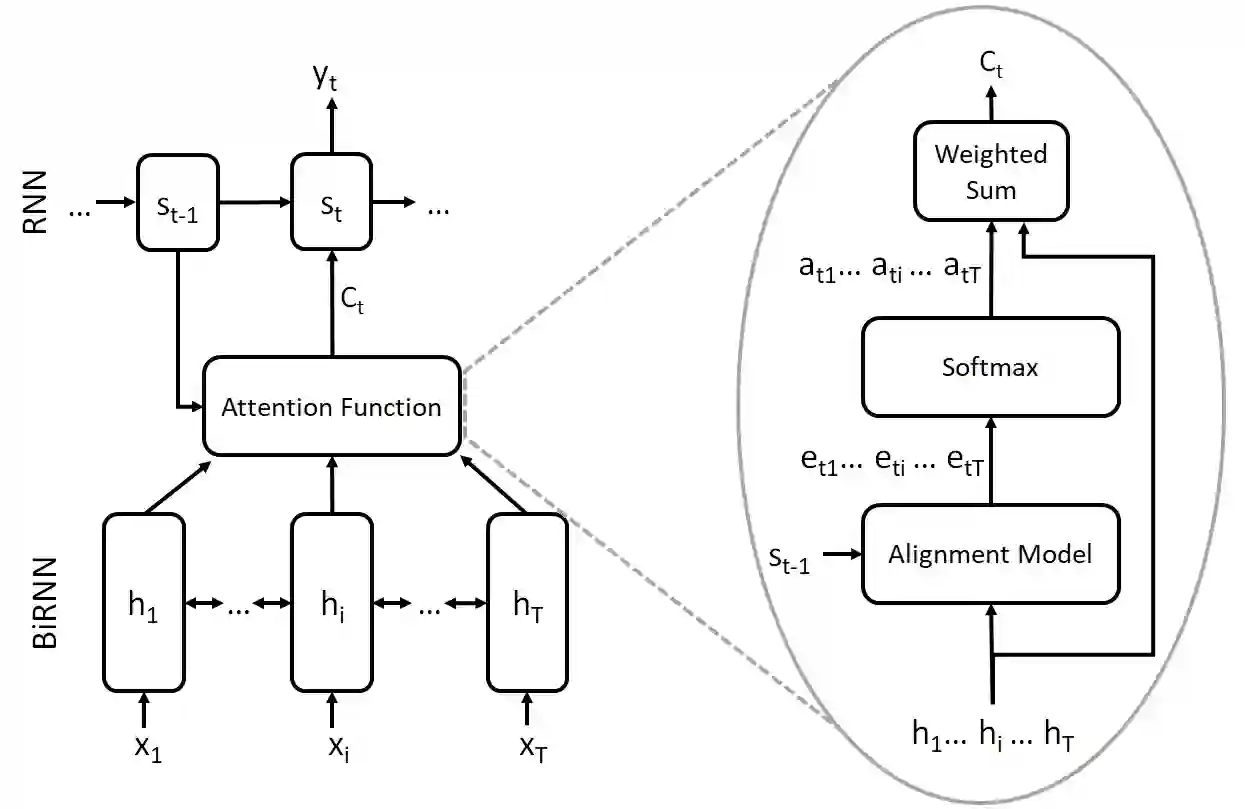

Attention机制最早是在视觉图像领域提出来的,但是真正火起来应该算是google mind团队的这篇论文《Recurrent Models of Visual Attention》[14],他们在RNN模型上使用了attention机制来进行图像分类。随后,Bahdanau等人在论文《Neural Machine Translation by Jointly Learning to Align and Translate》 [1]中,使用类似attention的机制在机器翻译任务上将翻译和对齐同时进行,他们的工作算是是第一个提出attention机制应用到NLP领域中。接着类似的基于attention机制的RNN模型扩展开始应用到各种NLP任务中。最近,如何在CNN中使用attention机制也成为了大家的研究热点。下图表示了attention研究进展的大概趋势。

Arxiv

4+阅读 · 2019年8月27日