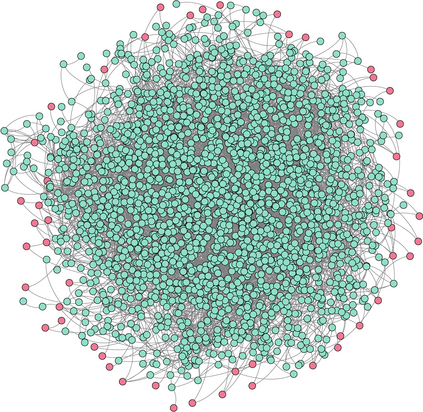

Graph convolutional networks (GCNs) have shown to be vulnerable to small adversarial perturbations, which becomes a severe threat and largely limits their applications in security-critical scenarios. To mitigate such a threat, considerable research efforts have been devoted to increasing the robustness of GCNs against adversarial attacks. However, current approaches for defense are typically designed for the whole graph and consider the global performance, posing challenges in protecting important local nodes from stronger adversarial targeted attacks. In this work, we present a simple yet effective method, named Graph Universal Adversarial Defense (GUARD). Unlike previous works, GUARD protects each individual node from attacks with a universal defensive patch, which is generated once and can be applied to any node (node-agnostic) in a graph. Extensive experiments on four benchmark datasets demonstrate that our method significantly improves robustness for several established GCNs against multiple adversarial attacks and outperforms state-of-the-art defense methods by large margins. Our code is publicly available at https://github.com/EdisonLeeeee/GUARD.

翻译:图表组合网络(GCN)显示很容易受到小型对抗性干扰,这种干扰成为严重威胁,并在很大程度上限制了其在安全危急情况下的应用。为了减轻这种威胁,已经进行了大量的研究工作,以提高GCN对对抗性攻击的稳健性。然而,目前的防御方法一般是为整个图表设计的,并考虑到全球的性能,对保护重要的地方节点免受更强烈的对抗性定点攻击构成挑战。在这项工作中,我们提出了一个简单而有效的方法,名为Greg Universarial Deference(GARD)。与以往的工程不同,GURD保护每个个人节点不受普遍防御性攻击,这种防御性补丁曾生成过一次,可应用于图中的任何节点(node-connoristic)。对四个基准数据集的广泛实验表明,我们的方法大大改进了几个既定的GCN对多重对抗性攻击的稳点的稳健性,并超越了大边界的状态-艺术防御方法。我们的代码公布在https://github.com/Edison Leee/GURD。