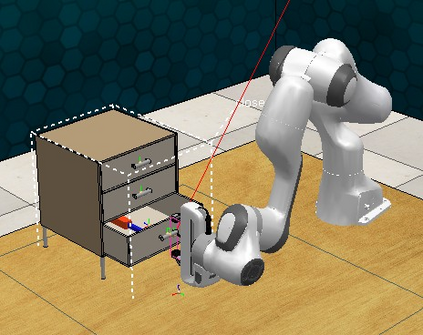

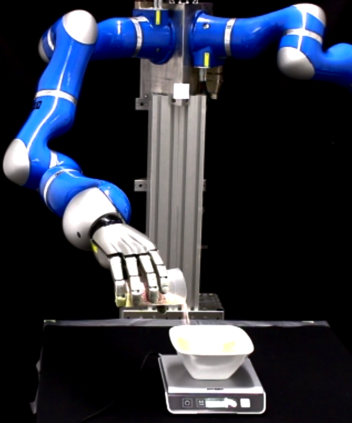

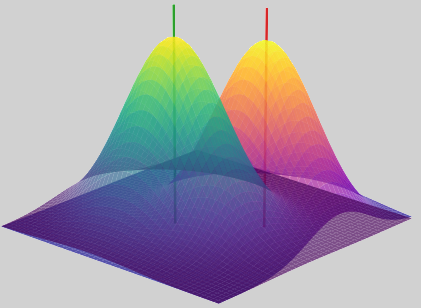

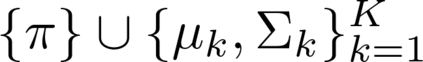

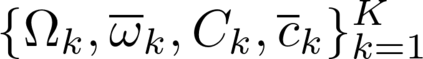

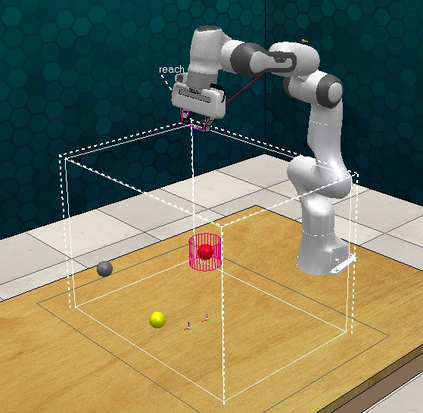

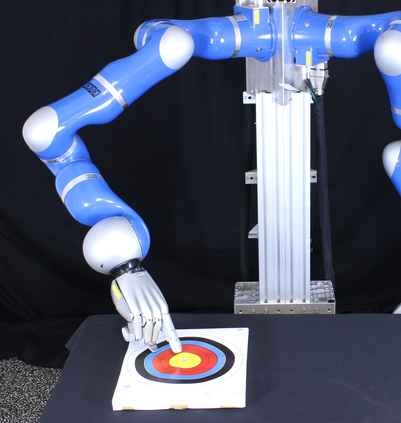

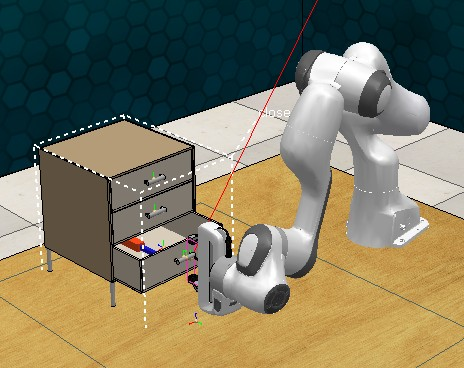

Parameterized movement primitives have been extensively used for imitation learning of robotic tasks. However, the high-dimensionality of the parameter space hinders the improvement of such primitives in the reinforcement learning (RL) setting, especially for learning with physical robots. In this paper we propose a novel view on handling the demonstrated trajectories for acquiring low-dimensional, non-linear latent dynamics, using mixtures of probabilistic principal component analyzers (MPPCA) on the movements' parameter space. Moreover, we introduce a new contextual off-policy RL algorithm, named LAtent-Movements Policy Optimization (LAMPO). LAMPO can provide gradient estimates from previous experience using self-normalized importance sampling, hence, making full use of samples collected in previous learning iterations. These advantages combined provide a complete framework for sample-efficient off-policy optimization of movement primitives for robot learning of high-dimensional manipulation skills. Our experimental results conducted both in simulation and on a real robot show that LAMPO provides sample-efficient policies against common approaches in literature.

翻译:参数空间的高维性妨碍了在强化学习(RL)设置中改进这类原始,特别是用于与物理机器人学习。在本文件中,我们提出了关于如何处理为获得低维、非线性潜伏动态而演示的轨迹的新观点,在运动的参数空间中使用概率主元件分析器混合物(MPPCA)进行运动。此外,我们引入了一种新的环境脱政策RL算法,名为Latentent-Movements Policy Opptimization(LAMPO ) 。LAMPO可以提供从以往经验中利用自我标准化重要性取样得出的梯度估计数,从而充分利用以往学习迭代中收集的样本。这些优势合在一起提供了一个完整的框架,为机器人学习高维操纵技能而以抽样高效的非政策方式优化运动原始。我们在模拟和真实机器人上进行的实验结果显示,LAMPO提供了针对文献中共同方法的样本高效政策。