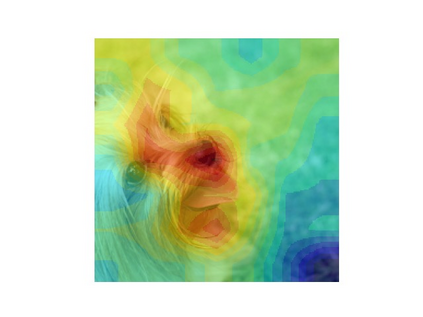

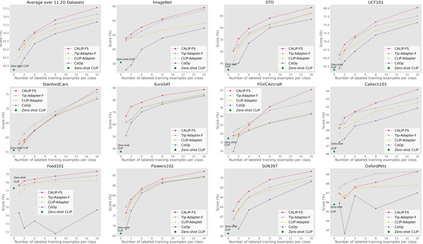

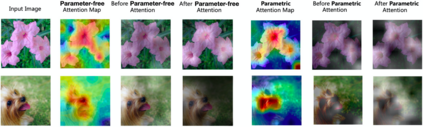

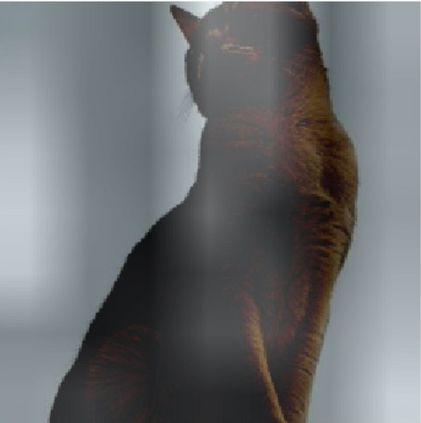

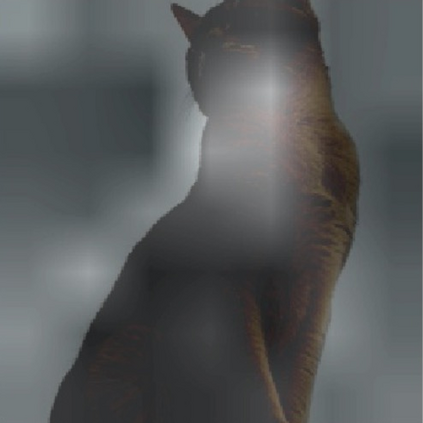

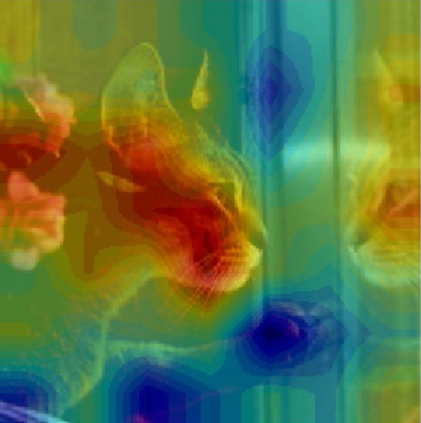

Contrastive Language-Image Pre-training (CLIP) has been shown to learn visual representations with great transferability, which achieves promising accuracy for zero-shot classification. To further improve its downstream performance, existing works propose additional learnable modules upon CLIP and fine-tune them by few-shot training sets. However, the resulting extra training cost and data requirement severely hinder the efficiency for model deployment and knowledge transfer. In this paper, we introduce a free-lunch enhancement method, CALIP, to boost CLIP's zero-shot performance via a parameter-free Attention module. Specifically, we guide visual and textual representations to interact with each other and explore cross-modal informative features via attention. As the pre-training has largely reduced the embedding distances between two modalities, we discard all learnable parameters in the attention and bidirectionally update the multi-modal features, enabling the whole process to be parameter-free and training-free. In this way, the images are blended with textual-aware signals and the text representations become visual-guided for better adaptive zero-shot alignment. We evaluate CALIP on various benchmarks of 14 datasets for both 2D image and 3D point cloud few-shot classification, showing consistent zero-shot performance improvement over CLIP. Based on that, we further insert a small number of linear layers in CALIP's attention module and verify our robustness under the few-shot settings, which also achieves leading performance compared to existing methods. Those extensive experiments demonstrate the superiority of our approach for efficient enhancement of CLIP.

翻译:实践显示,培训前语言图像(CLIP)学习视觉表现,具有很高的可转移性,在零发分级方面实现了很有希望的准确性;为进一步改善下游业绩,现有工作提议在CLIP上增加可学习模块,并微调这些模块,但由此产生的额外培训费用和数据要求严重阻碍了模型部署和知识转让的效率;在本文件中,我们引入了免费增强方法(CALIP),通过无参数关注模块提高CLIP的零发效果。具体地说,我们指导视觉和文字表达相互互动,通过关注探索跨模式信息特征。由于培训前的模块大大缩短了两个模式之间的嵌入距离,我们放弃所有可在关注和双向更新多模式特征的可学习参数,使整个进程成为无参数和无培训的。在本文中,图像与文字认知信号相结合,文本演示为更好地适应零发校正的设置。我们评估了CALIP的快速度,在C-D级分级标准下,在CAL级标准下持续地展示了CAL级升级标准。