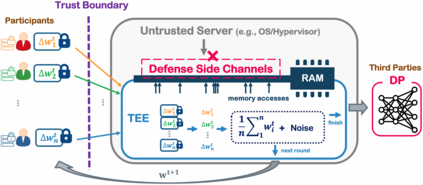

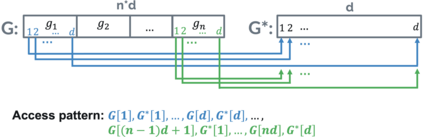

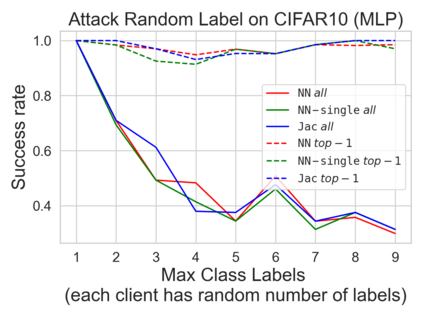

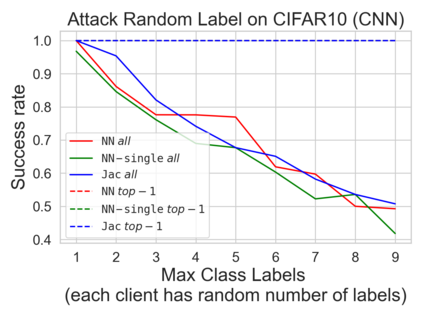

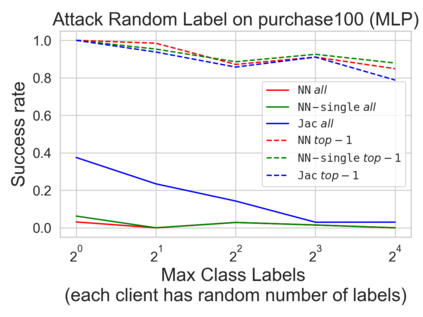

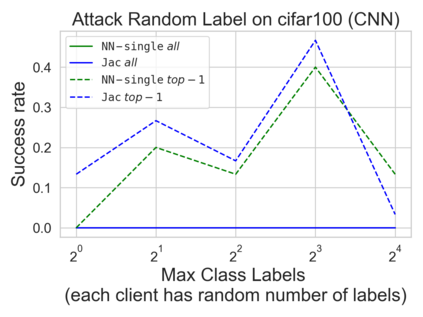

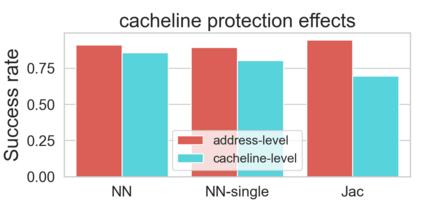

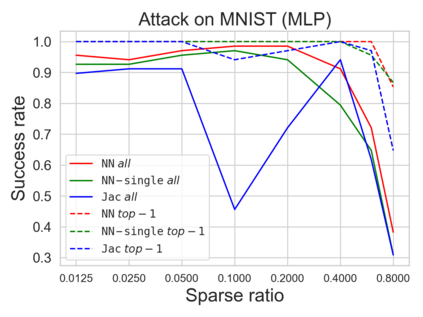

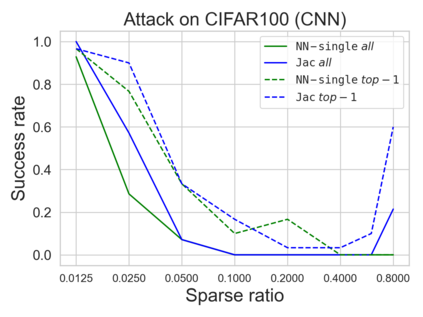

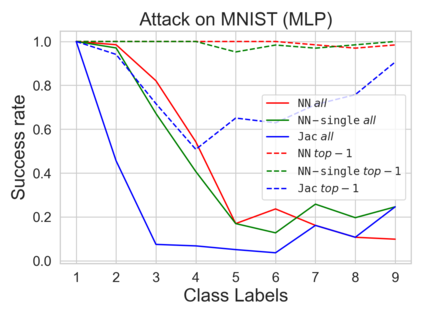

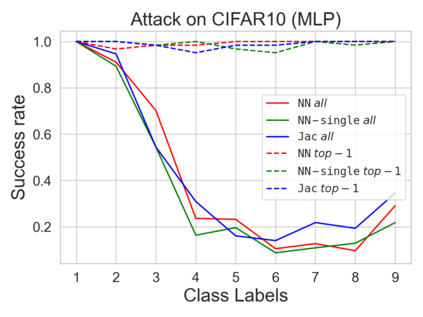

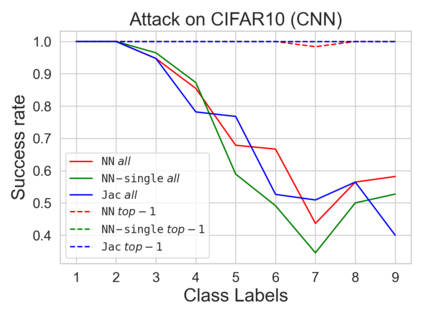

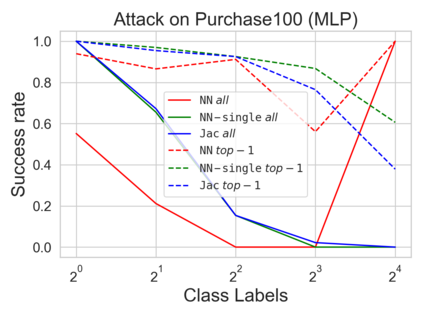

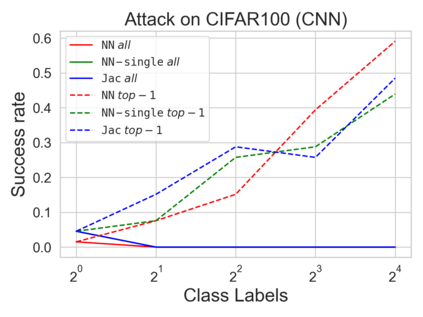

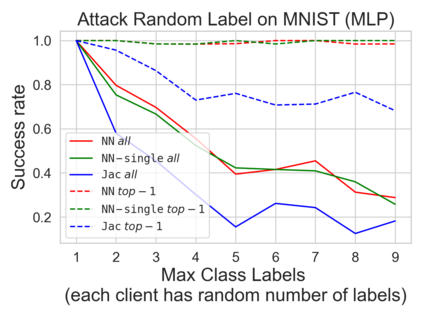

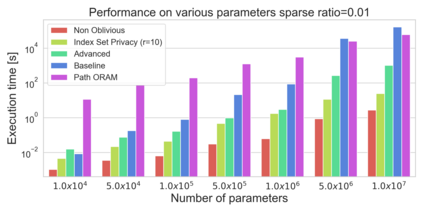

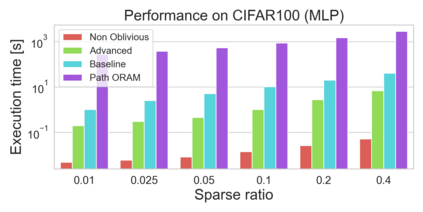

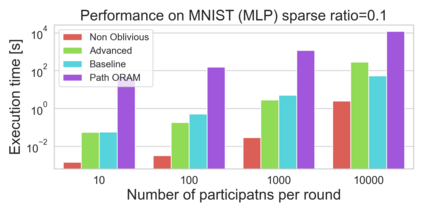

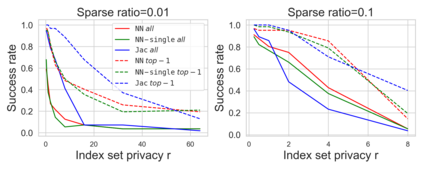

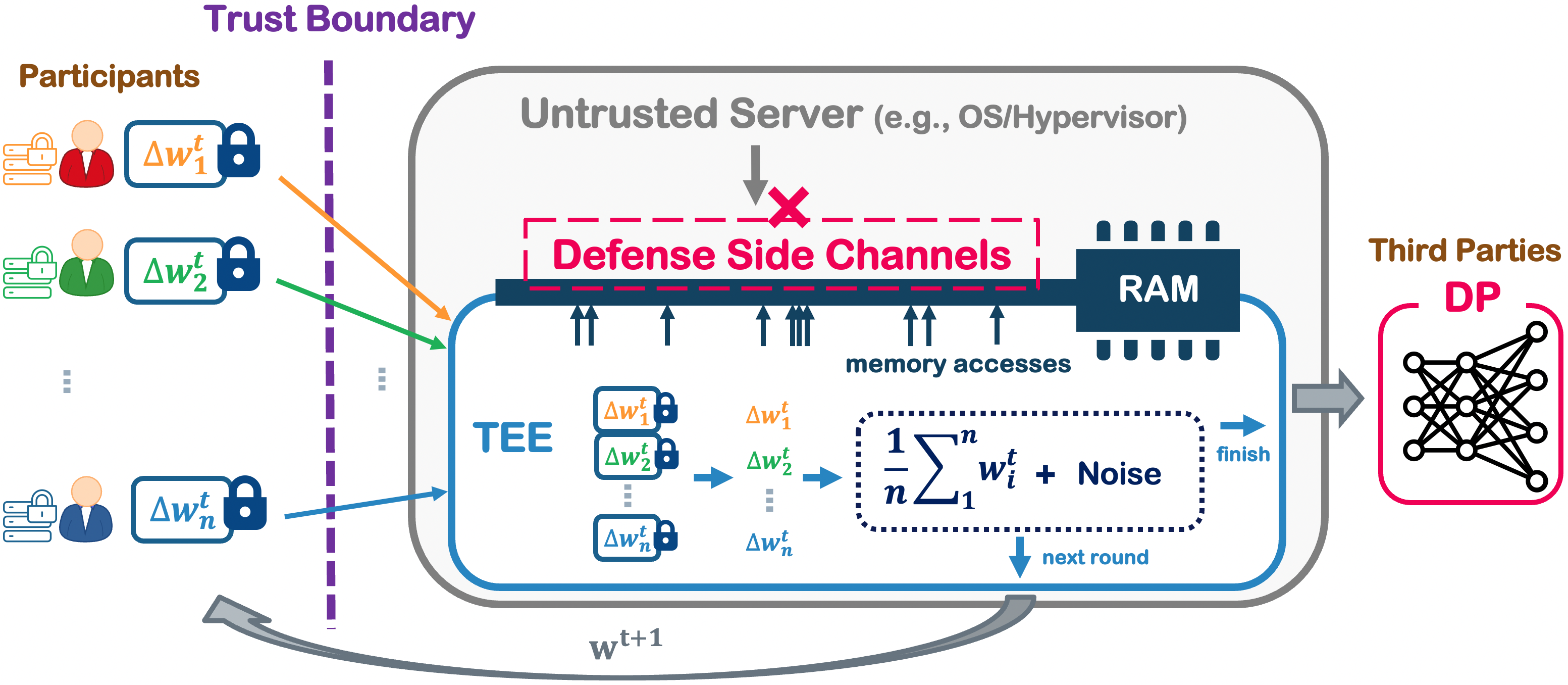

Differentially private federated learning (DP-FL) has received increasing attention to mitigate the privacy risk in federated learning. Although different schemes for DP-FL have been proposed, there is still a utility gap. Employing central Differential Privacy in FL (CDP-FL) can provide a good balance between the privacy and model utility, but requires a trusted server. Using Local Differential Privacy for FL (LDP-FL) does not require a trusted server, but suffers from lousy privacy-utility trade-off. Recently proposed shuffle DP based FL has the potential to bridge the gap between CDP-FL and LDP-FL without a trusted server; however, there is still a utility gap when the number of model parameters is large. In this work, we propose OLIVE, a system that combines the merits from CDP-FL and LDP-FL by leveraging Trusted Execution Environment (TEE). Our main technical contributions are the analysis and countermeasures against the vulnerability of TEE in OLIVE. Firstly, we theoretically analyze the memory access pattern leakage of OLIVE and find that there is a risk for sparsified gradients, which is common in FL. Secondly, we design an inference attack to understand how the memory access pattern could be linked to the training data. Thirdly, we propose oblivious yet efficient algorithms to prevent the memory access pattern leakage in OLIVE. Our experiments on real-world data demonstrate that OLIVE is efficient even when training a model with hundreds of thousands of parameters and effective against side-channel attacks on TEE.

翻译:不同的私人联合学习(DP-FL)在减少联合学习的隐私风险方面日益受到越来越多的关注。虽然提出了不同的DP-FL计划,但仍存在效用差距。在FL(CDP-FL)中采用中央差异隐私可以提供隐私和模型使用之间的良好平衡,但需要一个信任的服务器。使用FL(LDP-FL)的当地差异隐私并不需要信任的服务器,但受到隐私和实用性交易的恶劣影响。最近提出的基于FL的洗礼方案有可能弥补CDP-FL和LDP-FL之间的空白,而没有可靠的服务器参数;然而,当模型参数数量很大时,仍然存在着效用差距。在这项工作中,我们提议使用OLVIV,一个将CDP-FL和LDP-FL的优点结合起来的系统。我们的主要技术贡献是针对TE(L-FL)的脆弱性进行分析和对策。首先,我们从理论上分析溶剂的记忆接触模式渗漏情况,并发现甚至发现,在甚高密度的记忆-LLA值模型设计中,我们共同的记忆-LLL的接触模式可以证明我们如何理解我们的记忆-L的进入。