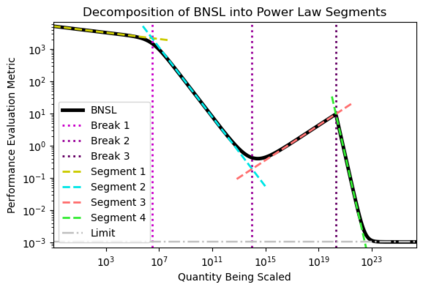

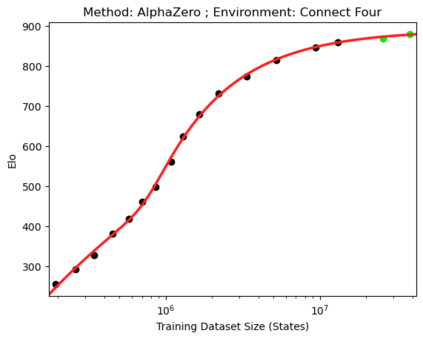

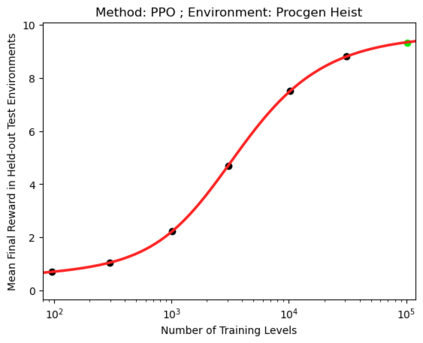

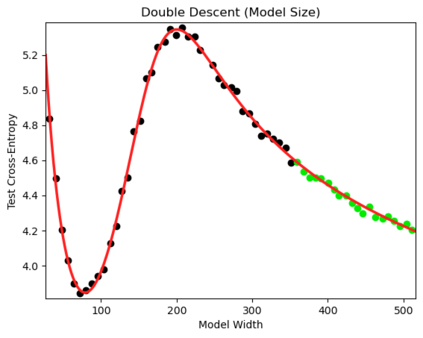

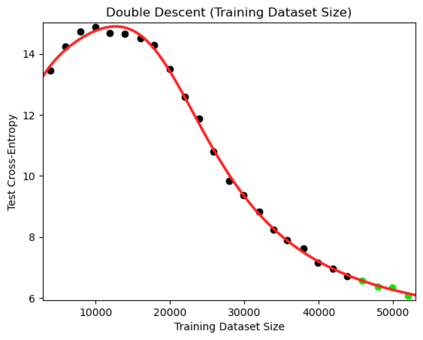

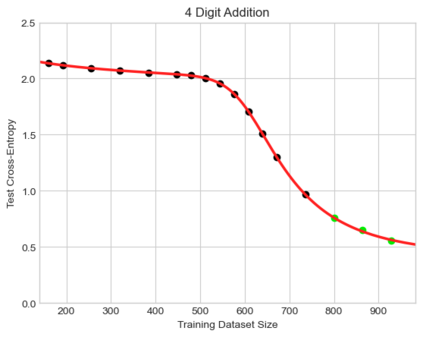

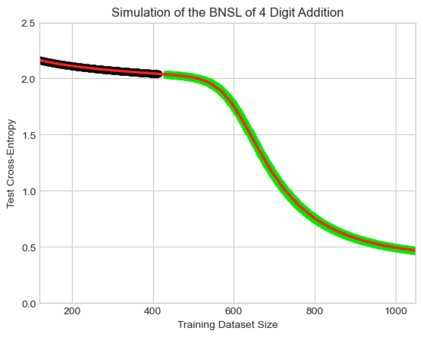

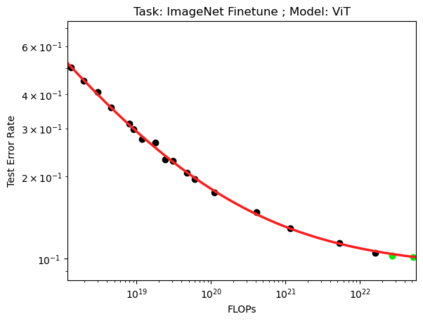

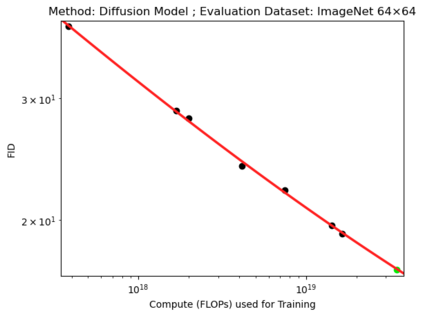

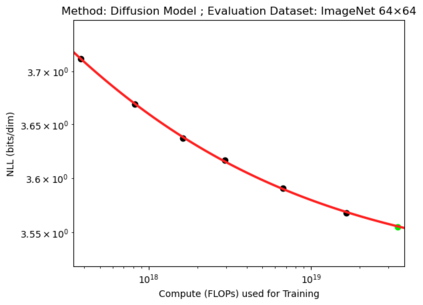

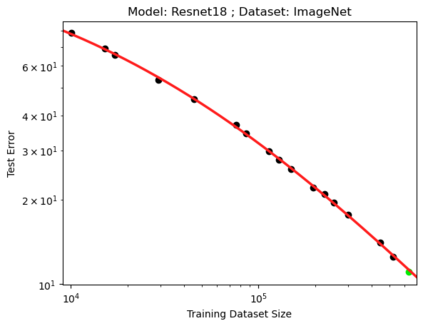

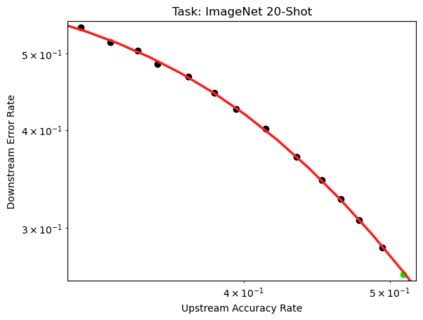

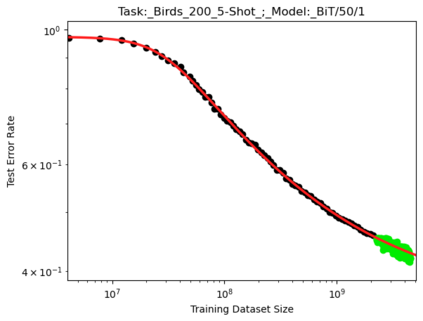

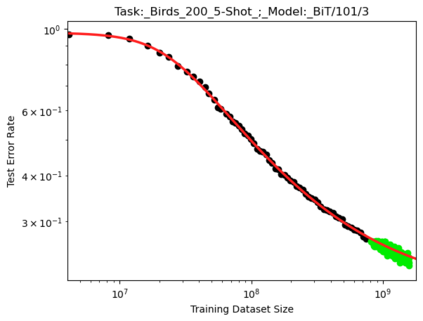

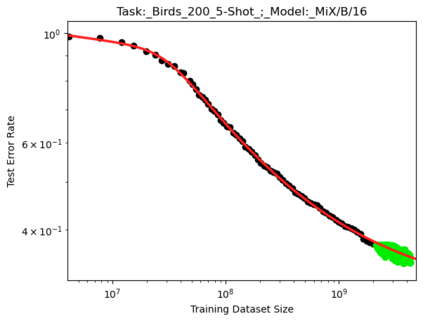

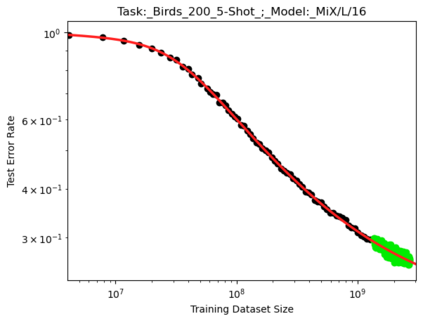

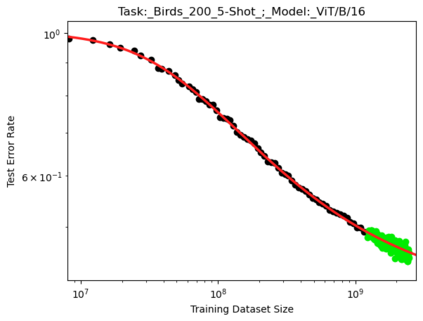

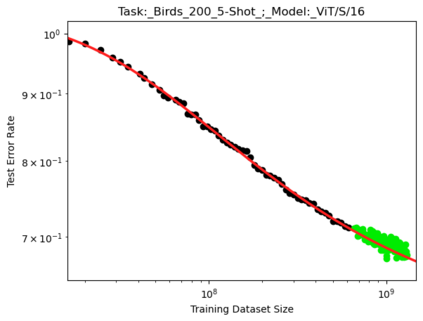

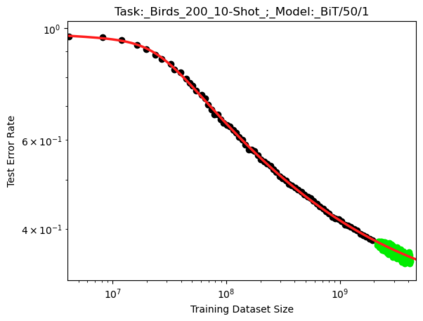

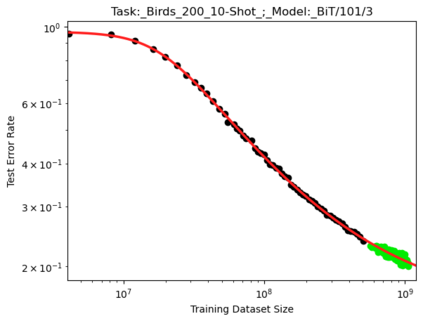

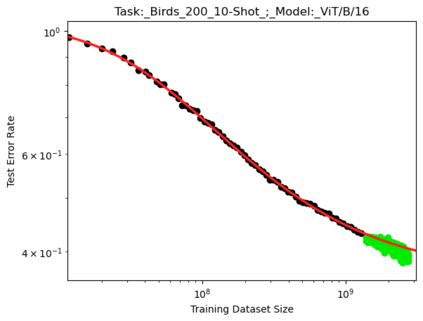

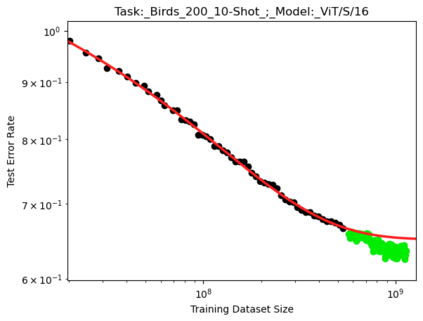

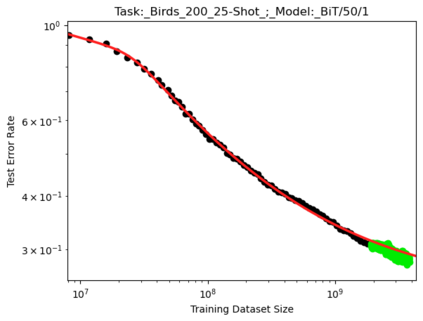

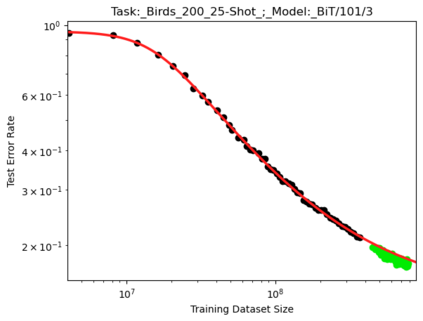

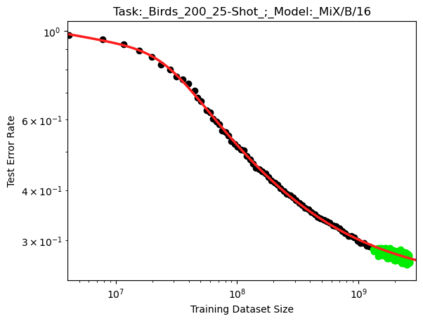

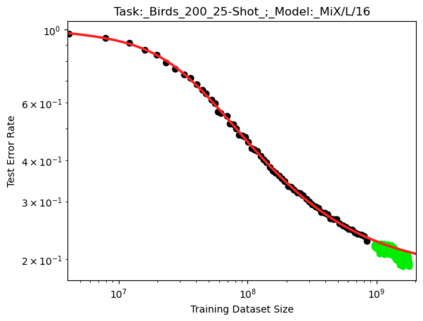

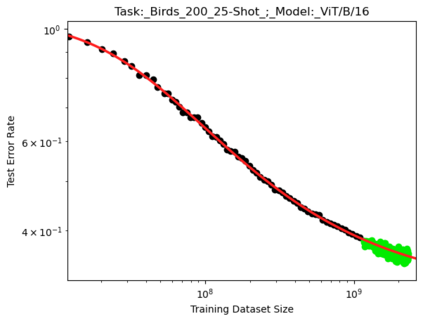

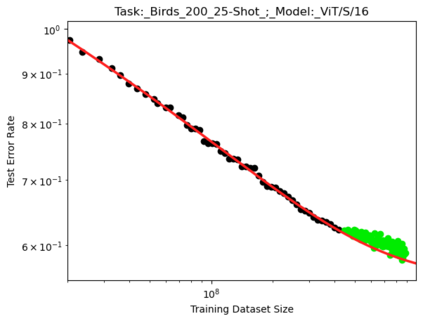

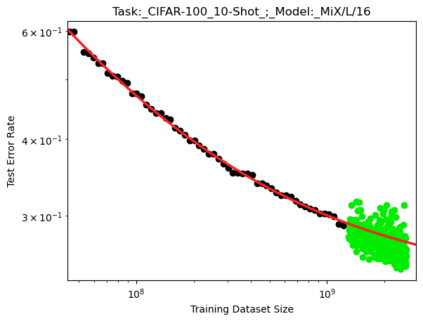

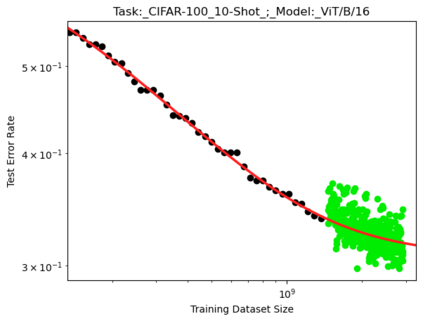

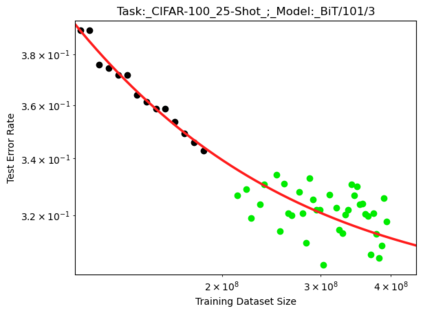

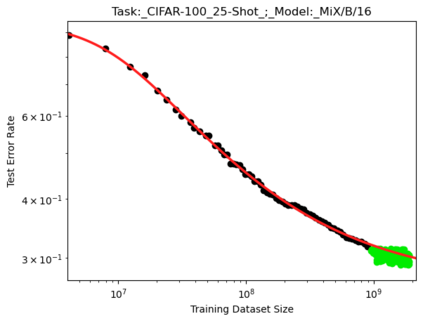

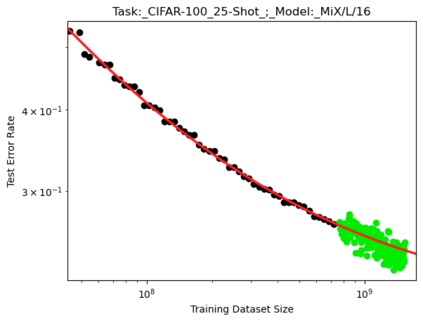

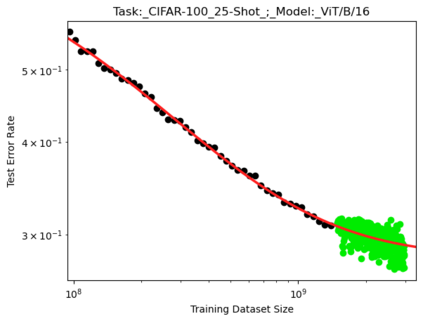

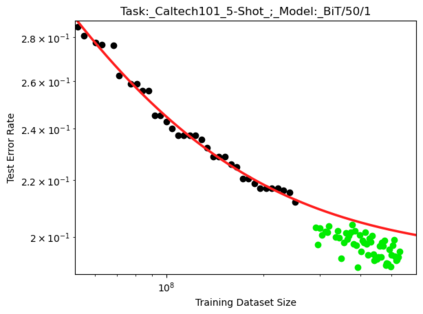

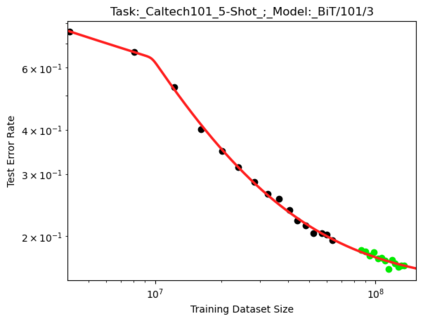

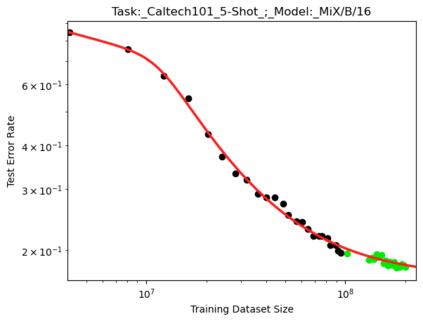

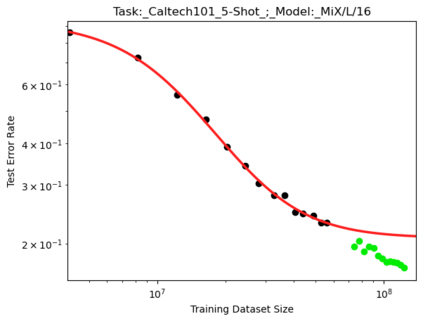

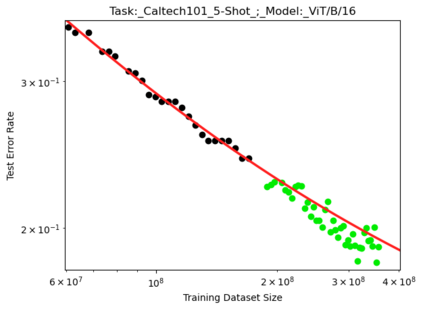

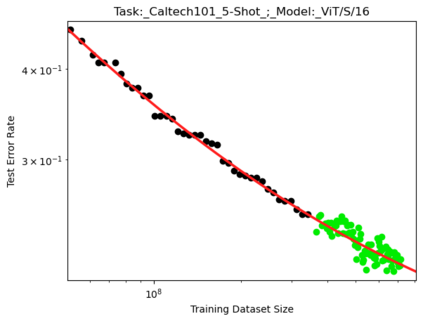

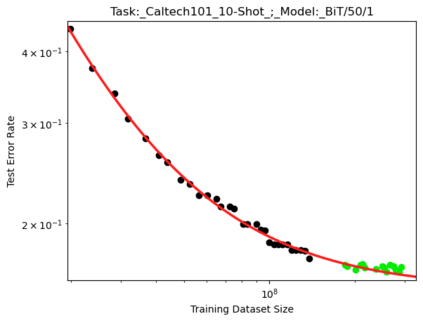

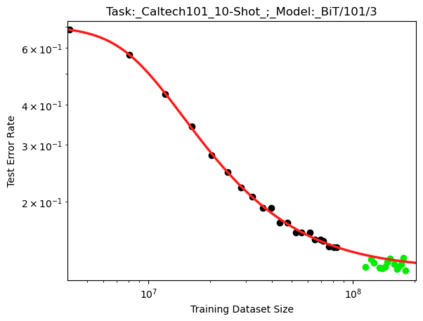

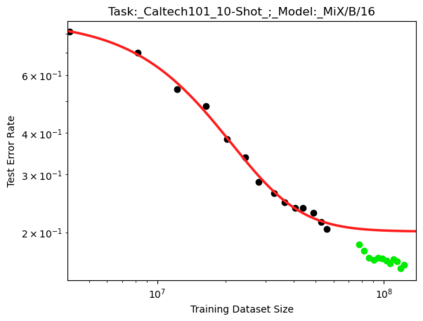

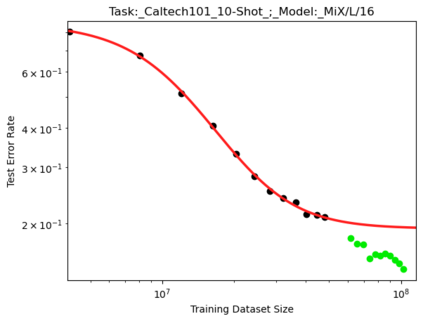

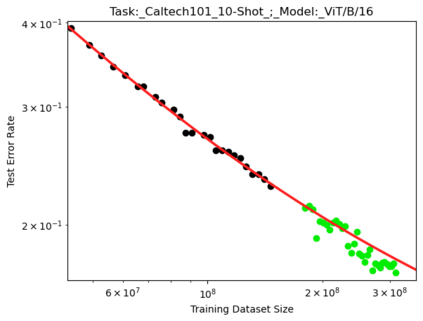

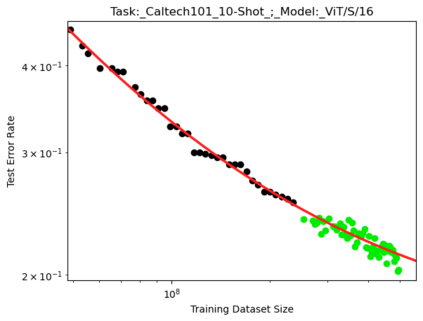

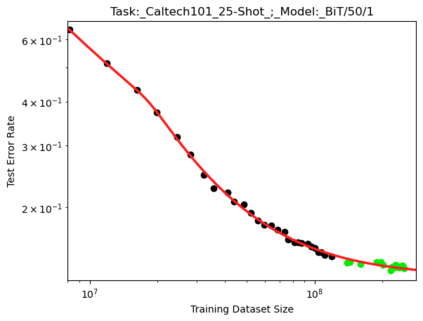

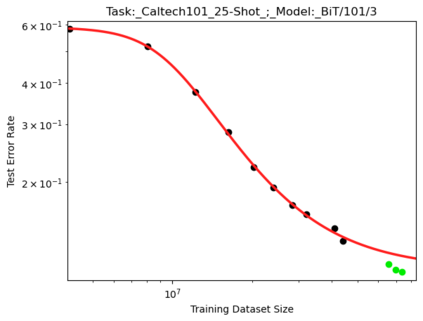

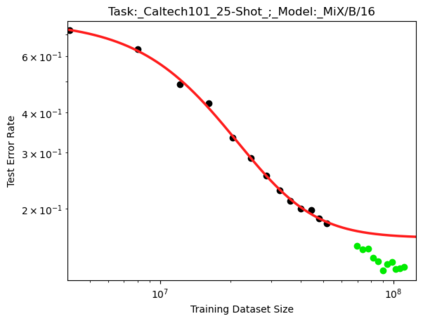

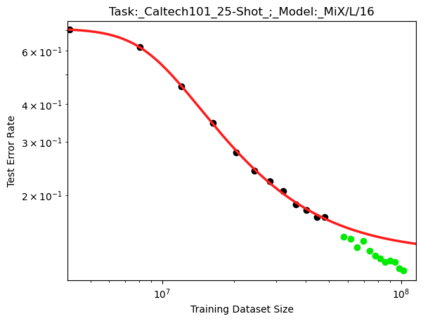

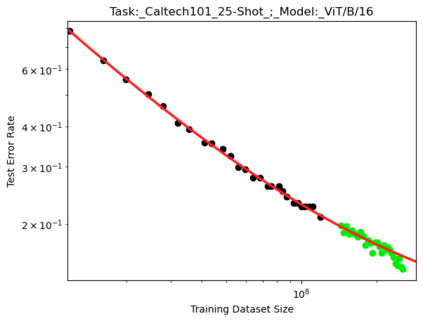

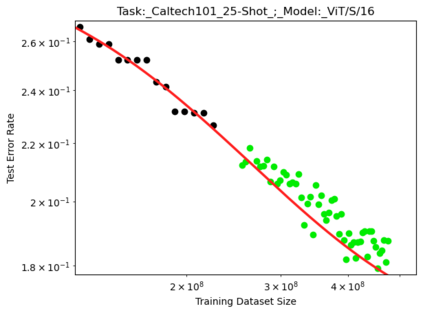

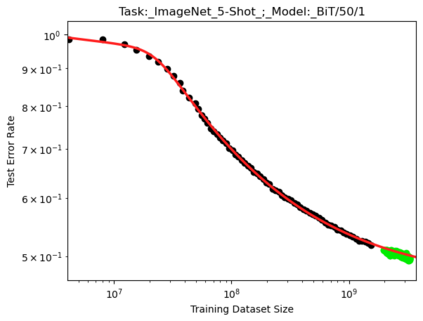

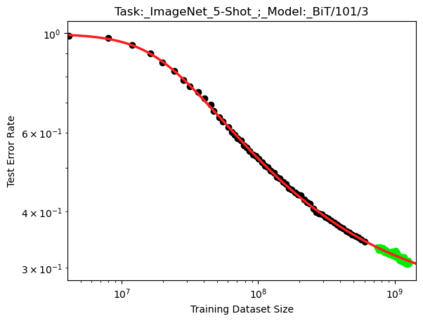

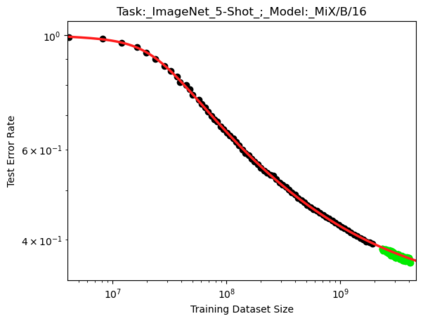

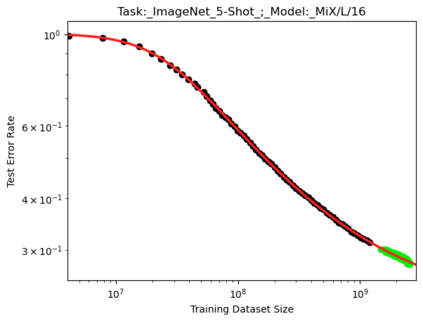

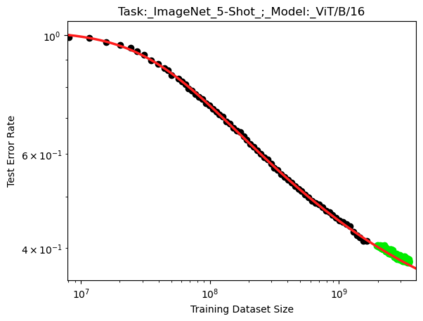

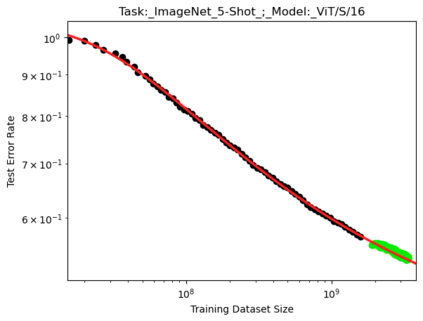

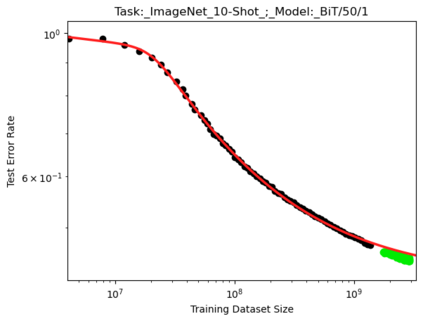

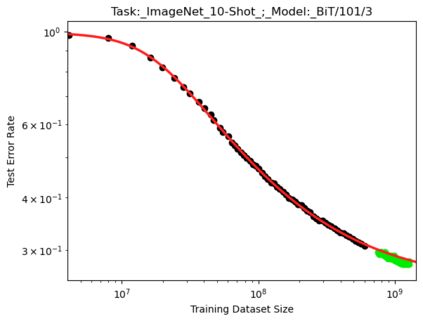

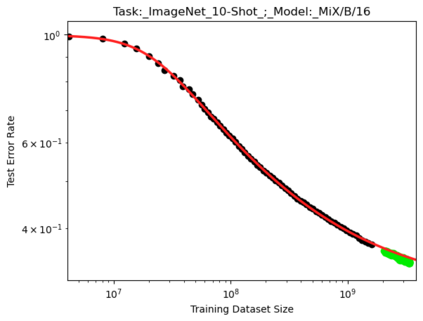

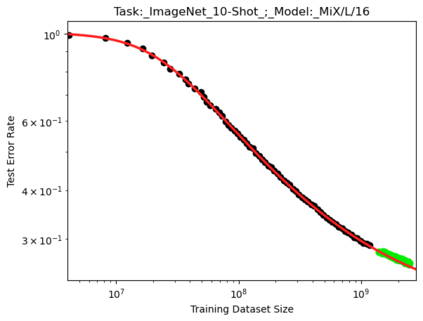

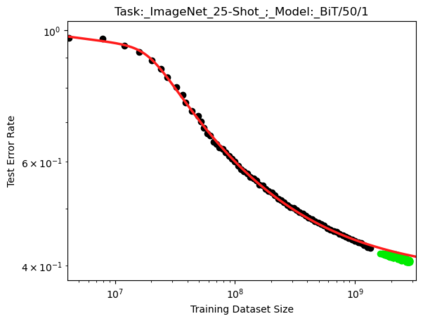

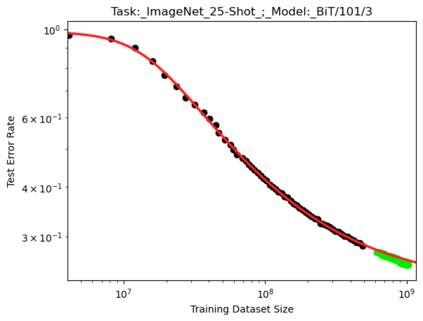

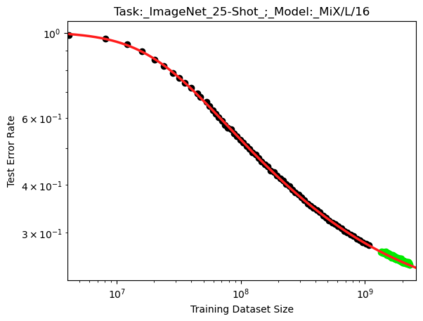

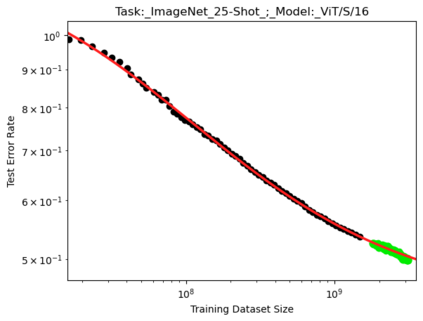

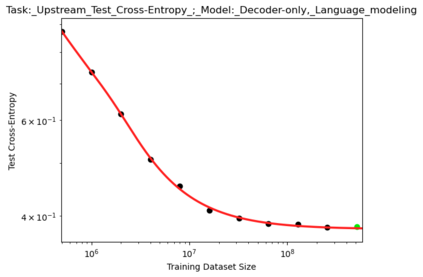

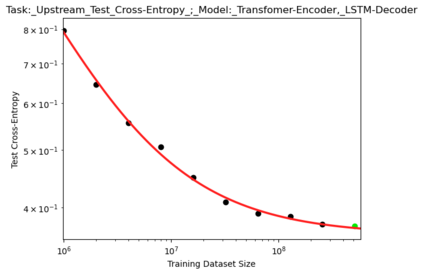

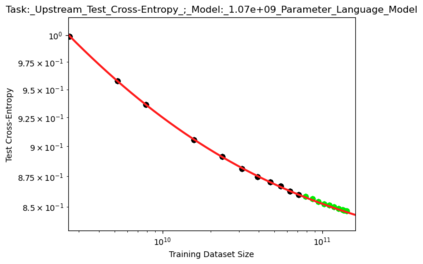

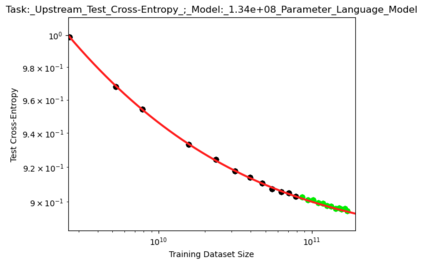

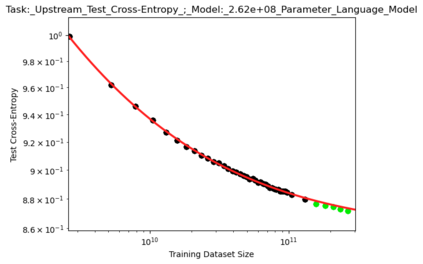

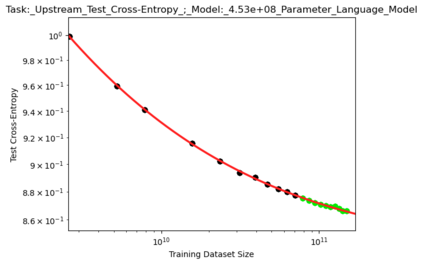

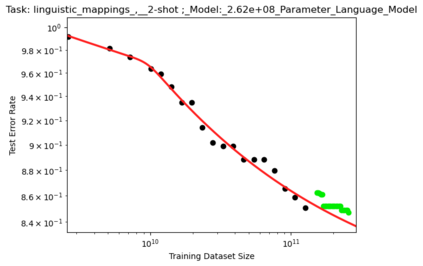

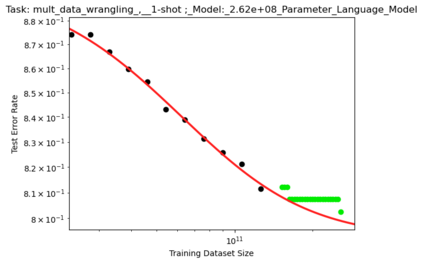

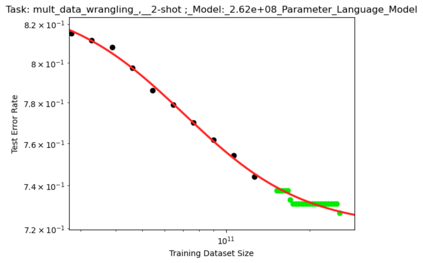

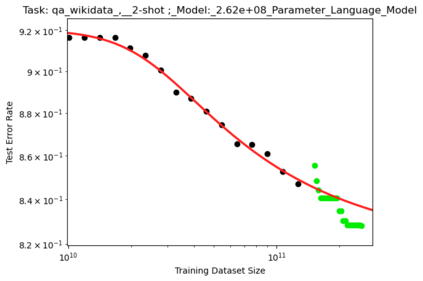

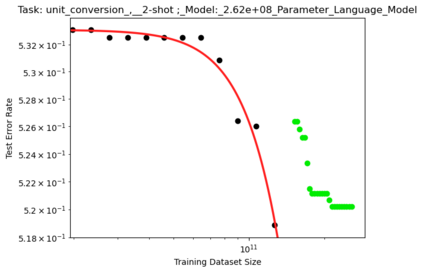

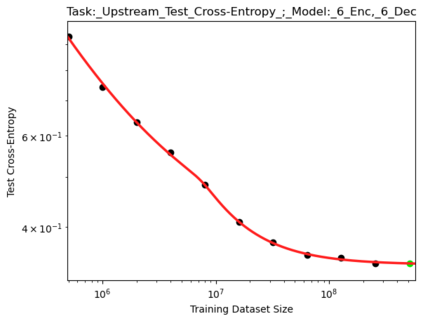

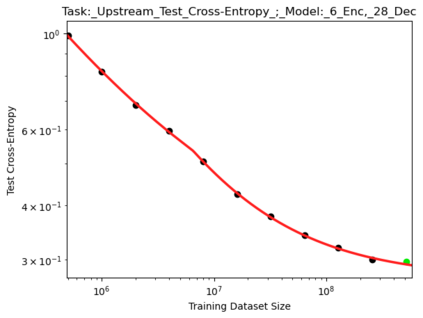

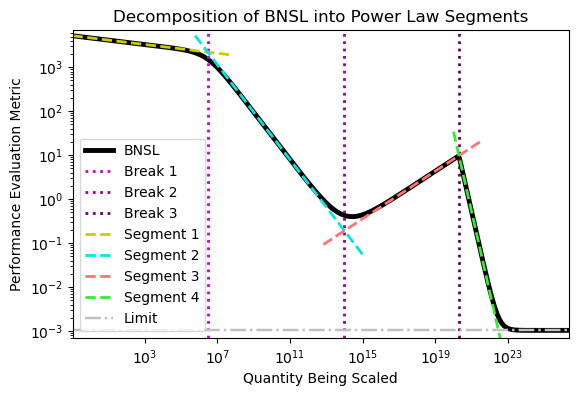

We present a smoothly broken power law functional form that accurately models and extrapolates the scaling behaviors of deep neural networks (i.e. how the evaluation metric of interest varies as the amount of compute used for training, number of model parameters, training dataset size, or upstream performance varies) for each task within a large and diverse set of upstream and downstream tasks, in zero-shot, prompted, and fine-tuned settings. This set includes large-scale vision and unsupervised language tasks, diffusion generative modeling of images, arithmetic, and reinforcement learning. When compared to other functional forms for neural scaling behavior, this functional form yields extrapolations of scaling behavior that are considerably more accurate on this set. Moreover, this functional form accurately models and extrapolates scaling behavior that other functional forms are incapable of expressing such as the non-monotonic transitions present in the scaling behavior of phenomena such as double descent and the delayed, sharp inflection points present in the scaling behavior of tasks such as arithmetic. Lastly, we use this functional form to glean insights about the limit of the predictability of scaling behavior. Code is available at https://github.com/ethancaballero/broken_neural_scaling_laws

翻译:我们展示了一种顺利断裂的权力法功能形式,它精确地模型和外推深神经网络的缩放行为(即,由于用于培训的计算数量、模型参数数量、培训数据集大小或上游业绩不同,评估利息的衡量尺度如何不同),在一系列大而多样的上游和下游任务中,我们以零发、促动和微调的设置的形式,对每项任务都提出了一种顺利的断裂的权力法功能形式。这套形式包括大规模视觉和不受监督的语言任务、图像、算术和强化学习的传播基因化模型。与神经缩放行为的其他功能形式相比,这种功能形式产生对缩放行为的外推法,而该功能形式则相当准确。此外,这种功能形式准确的模型和外推法则无法表达诸如双位和延缓期、尖锐的缩放点等现象在计算等任务的缩放行为中出现的非分子转变。最后,我们使用这种功能形式来对缩放行为可预测性的解读。 代码可在 https://giustar/ball_ball_borancanualbal_com