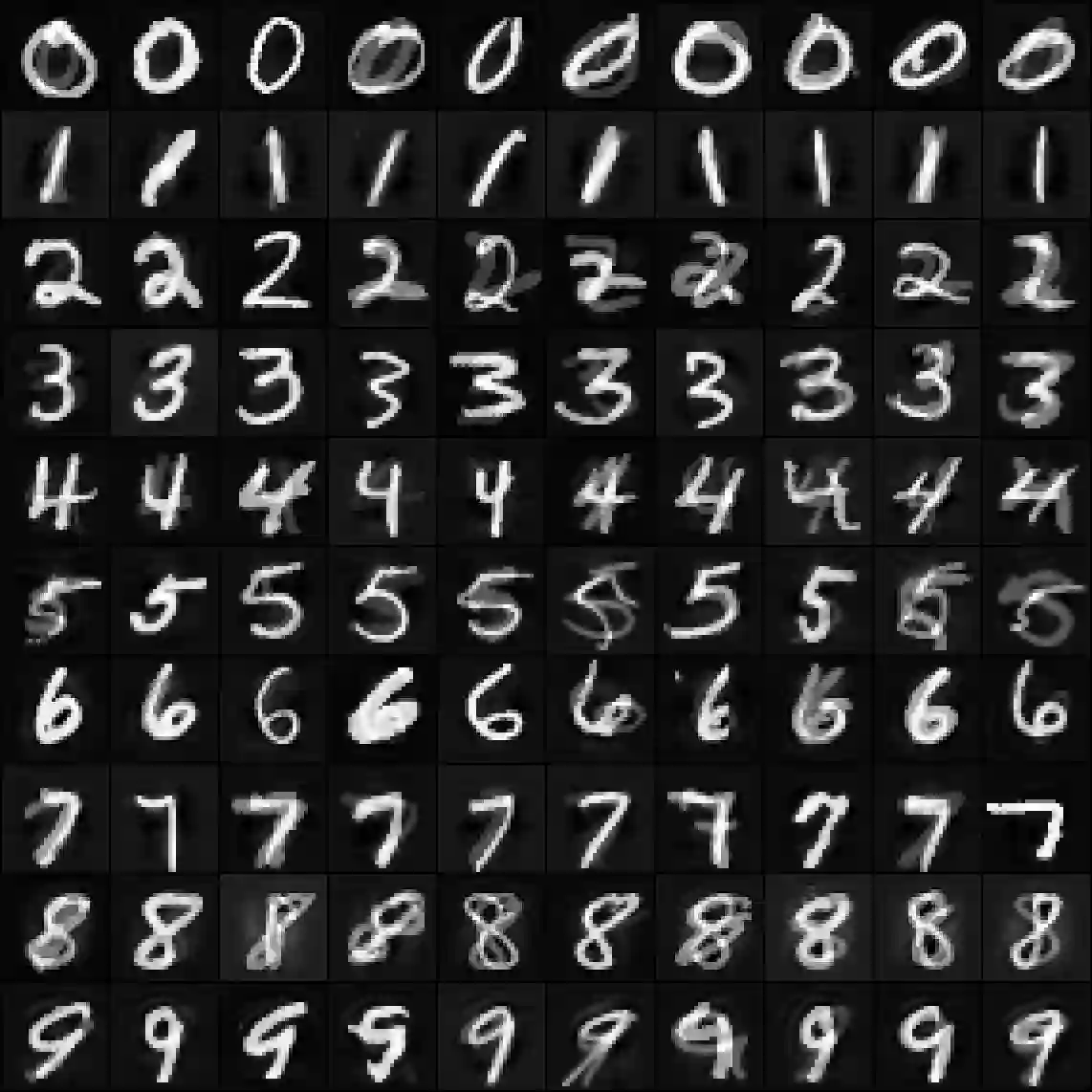

Bayesian Pseudo-Coreset (BPC) and Dataset Condensation are two parallel streams of work that construct a synthetic set such that, a model trained independently on this synthetic set, yields the same performance as training on the original training set. While dataset condensation methods use non-bayesian, heuristic ways to construct such a synthetic set, BPC methods take a bayesian approach and formulate the problem as divergence minimization between posteriors associated with original data and synthetic data. However, BPC methods generally rely on distributional assumptions on these posteriors which makes them less flexible and hinders their performance. In this work, we propose to solve these issues by modeling the posterior associated with synthetic data by an energy-based distribution. We derive a contrastive-divergence-like loss function to learn the synthetic set and show a simple and efficient way to estimate this loss. Further, we perform rigorous experiments pertaining to the proposed method. Our experiments on multiple datasets show that the proposed method not only outperforms previous BPC methods but also gives performance comparable to dataset condensation counterparts.

翻译:贝叶斯伪核心子集(BPC)和数据集压缩是两个并行的工作流,它们构建了一个综合集,使得在这个综合集上独立训练的模型与在原始训练集上训练相同的性能。虽然数据集压缩方法使用非贝叶斯启发式方法构建这样的综合集,但BPC方法采用贝叶斯方法,将问题构建为原始数据和合成数据之间的后验分布之间的散度最小化。然而,BPC方法通常依赖于对这些后验分布的分布假设,这使它们的灵活性较差并阻碍了它们的性能。在这项工作中,我们建议通过对能量分布进行建模来解决这些问题。我们推导出类似于对比散度的损失函数来学习合成集,并展示了一种简单高效的方法来估计这个损失。此外,我们进行了关于所提出方法的严格实验。我们在多个数据集上的实验表明,所提出的方法不仅优于之前的BPC方法,而且性能与数据集压缩对应的方法相当。