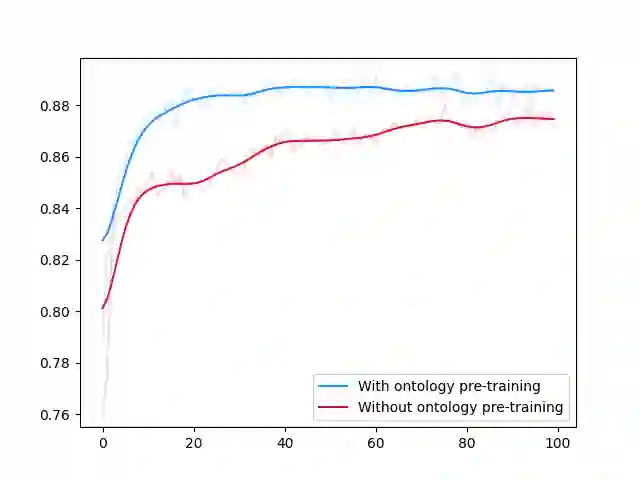

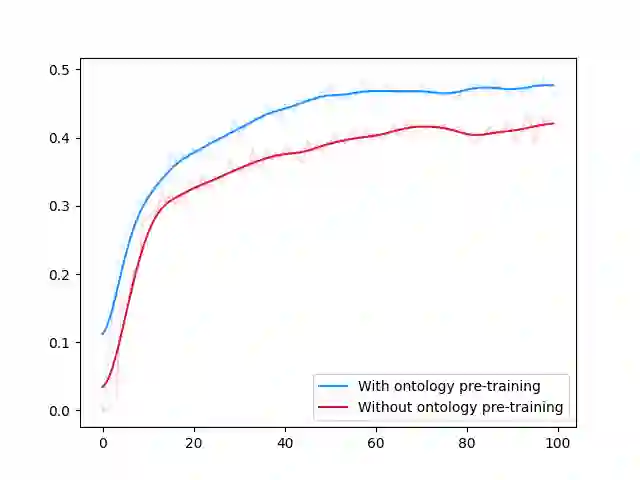

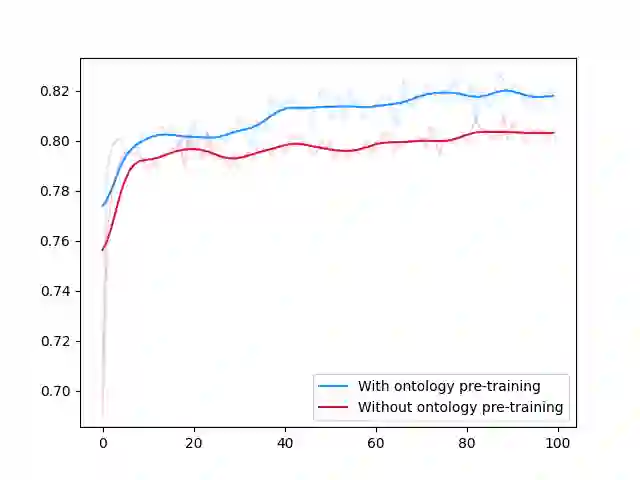

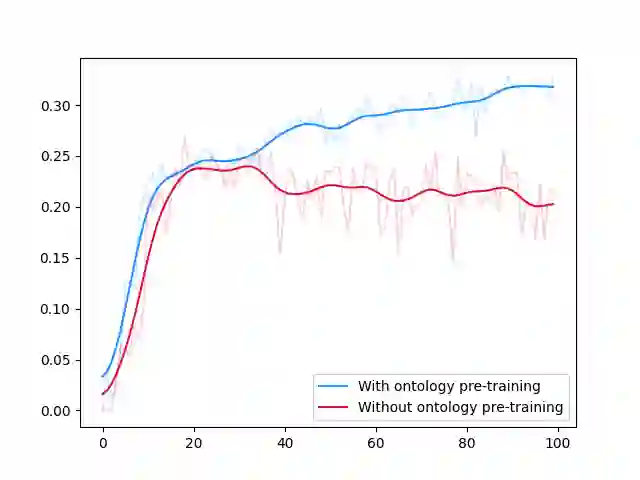

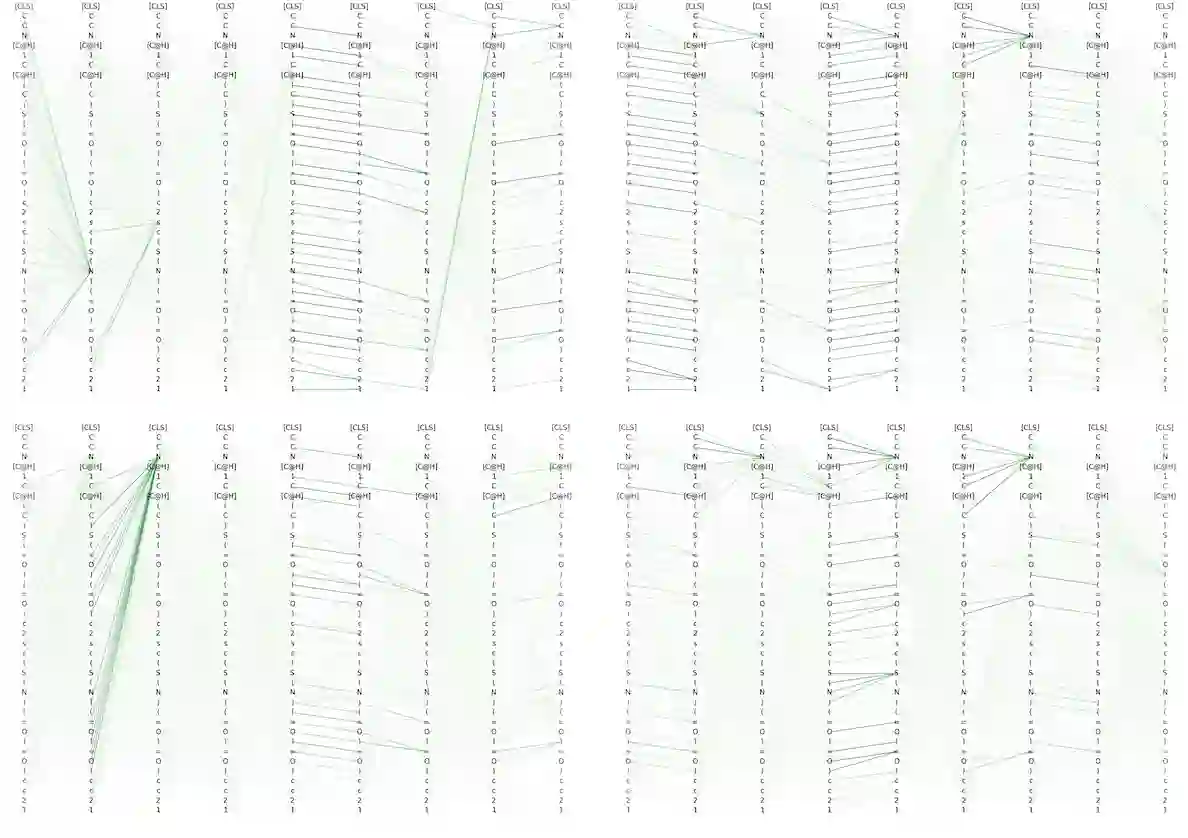

Integrating human knowledge into neural networks has the potential to improve their robustness and interpretability. We have developed a novel approach to integrate knowledge from ontologies into the structure of a Transformer network which we call ontology pre-training: we train the network to predict membership in ontology classes as a way to embed the structure of the ontology into the network, and subsequently fine-tune the network for the particular prediction task. We apply this approach to a case study in predicting the potential toxicity of a small molecule based on its molecular structure, a challenging task for machine learning in life sciences chemistry. Our approach improves on the state of the art, and moreover has several additional benefits. First, we are able to show that the model learns to focus attention on more meaningful chemical groups when making predictions with ontology pre-training than without, paving a path towards greater robustness and interpretability. Second, the training time is reduced after ontology pre-training, indicating that the model is better placed to learn what matters for toxicity prediction with the ontology pre-training than without. This strategy has general applicability as a neuro-symbolic approach to embed meaningful semantics into neural networks.

翻译:将人类知识纳入神经网络有可能提高人类知识的稳健性和可解释性。我们开发了一种新颖的方法,将本科知识纳入我们称为本科培训前培训的变异器网络的结构:我们培训网络,预测本科课程的会籍,以此将本科课程的结构纳入网络,并随后微调网络,以完成特定的预测任务。我们将这一方法应用于案例研究,预测基于分子结构的小分子的潜在毒性,这是生命科学化学中机器学习的艰巨任务。我们的方法改进了艺术状况,还有几项额外的好处。首先,我们能够显示,模型在进行本科前培训的预测时,将注意力集中在更有意义的化学团体上,为更大的坚固性和可解释性铺平了道路。第二,在进行本科培训前培训之后,培训时间缩短了,表明模型比不学前培训更适合学习什么毒性预测的问题。这一战略作为神经-心理-神经-神经-神经-物理学的嵌入系统,具有普遍的适用性。