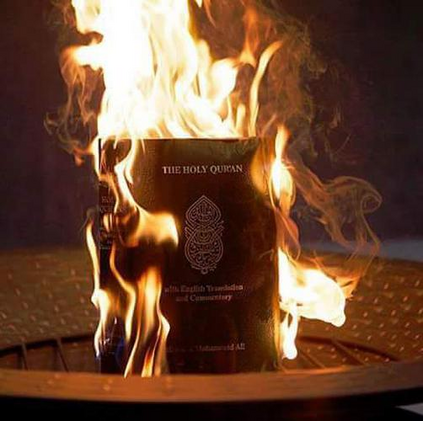

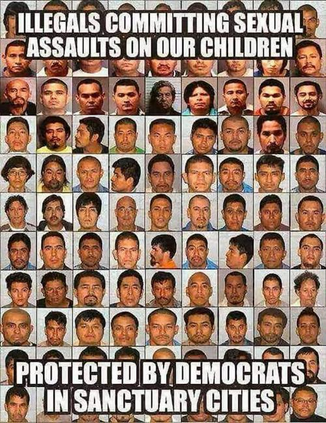

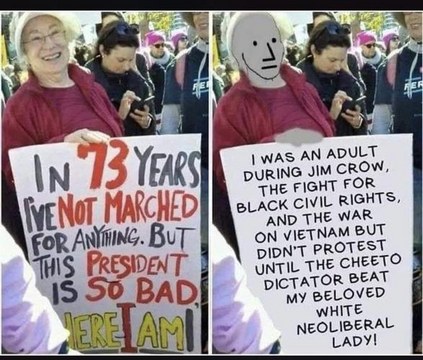

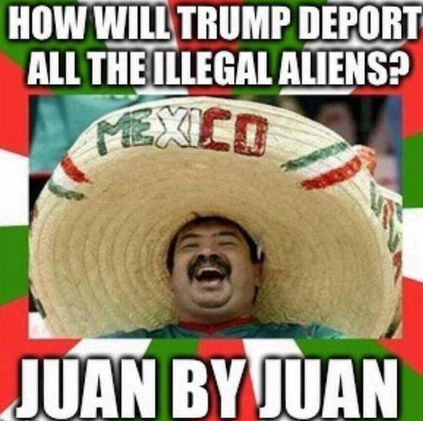

Accurate detection and classification of online hate is a difficult task. Implicit hate is particularly challenging as such content tends to have unusual syntax, polysemic words, and fewer markers of prejudice (e.g., slurs). This problem is heightened with multimodal content, such as memes (combinations of text and images), as they are often harder to decipher than unimodal content (e.g., text alone). This paper evaluates the role of semantic and multimodal context for detecting implicit and explicit hate. We show that both text- and visual- enrichment improves model performance, with the multimodal model (0.771) outperforming other models' F1 scores (0.544, 0.737, and 0.754). While the unimodal-text context-aware (transformer) model was the most accurate on the subtask of implicit hate detection, the multimodal model outperformed it overall because of a lower propensity towards false positives. We find that all models perform better on content with full annotator agreement and that multimodal models are best at classifying the content where annotators disagree. To conduct these investigations, we undertook high-quality annotation of a sample of 5,000 multimodal entries. Tweets were annotated for primary category, modality, and strategy. We make this corpus, along with the codebook, code, and final model, freely available.

翻译:准确检测和分类在线仇恨是一项艰巨的任务。 隐含仇恨尤其具有挑战性,因为此类内容往往具有不寻常的语法、多词和较少的偏见标志(例如,缩略语 ) 。 这一问题随着Memes(文本和图像的混合)等多式内容而加剧,因为它们往往比单式内容(例如,单文本)更难破解。 本文评估了语义和多式联运环境在发现隐含和明确仇恨方面的作用。 我们发现,文本和视觉浓缩都改善了模型的性能,而多式模型(0.771)比其他模式F1的得分(0.544、0.737和0.754)都好。 虽然单式文本背景认知(转换)模型是隐含仇恨检测的子任务中最准确的,但多式模型总体上却超越了它。 我们发现,所有模型在内容上与完整的说明性协议中表现得更好,而且多式模型最好对内容进行分类,说明者不同意。