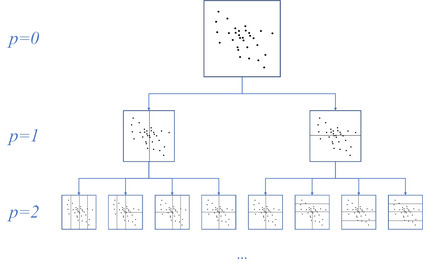

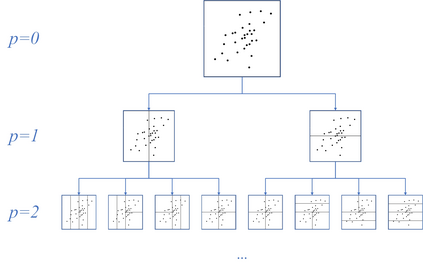

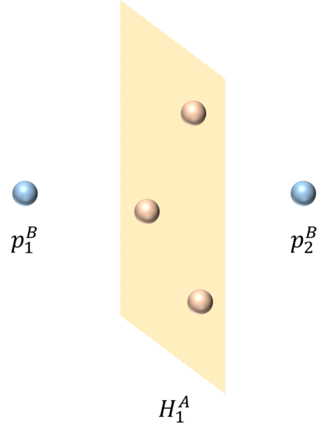

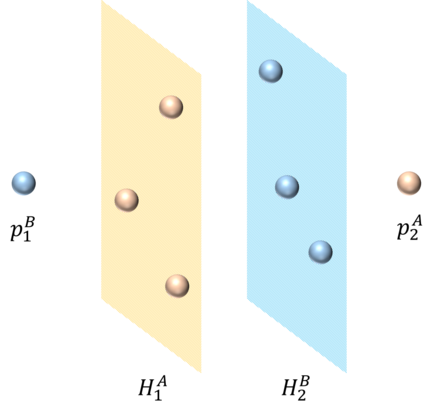

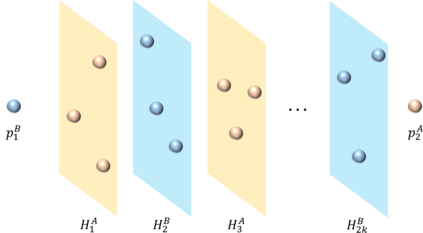

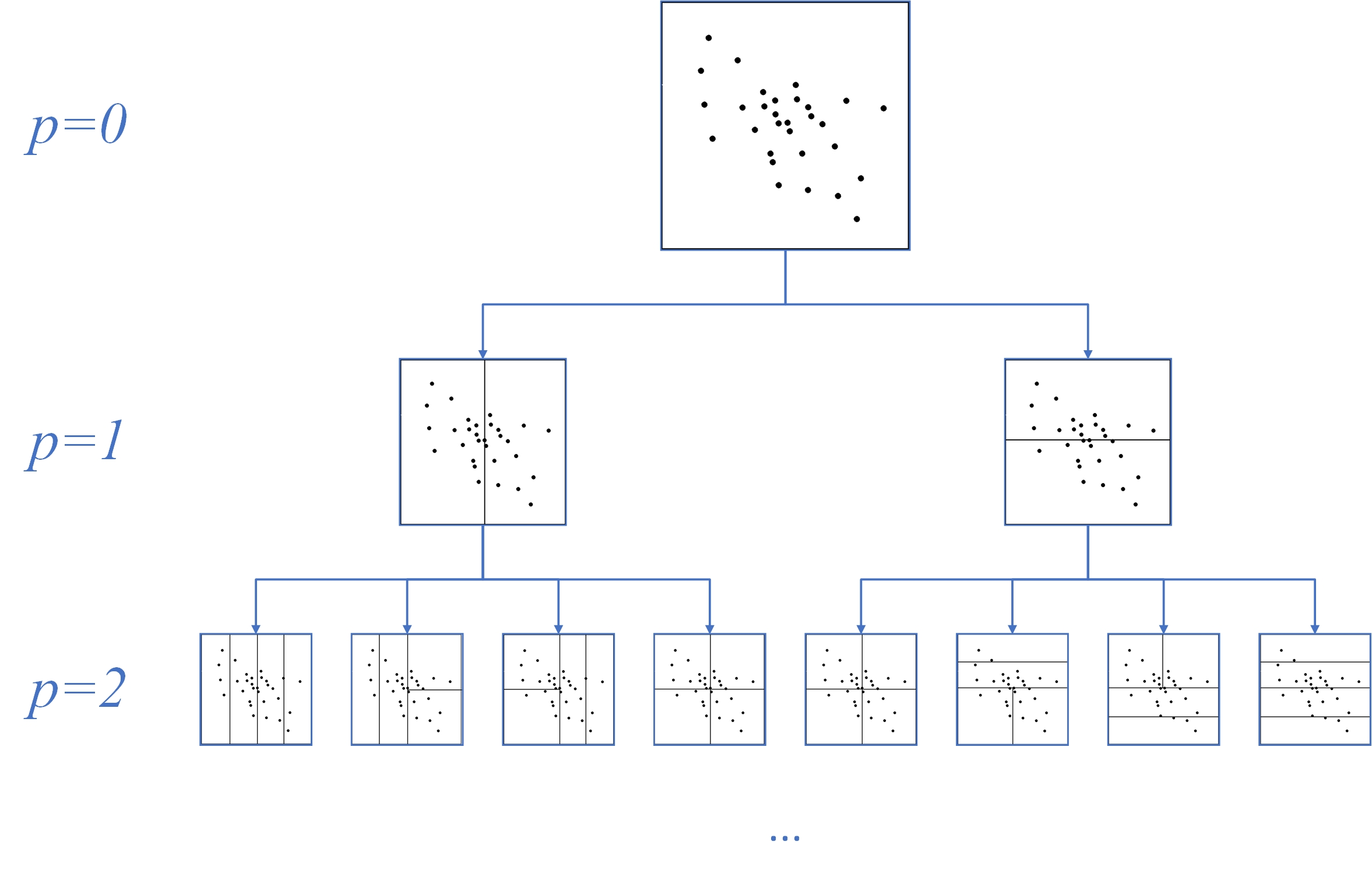

In this paper, we propose a gradient boosting algorithm for large-scale regression problems called \textit{Gradient Boosted Binary Histogram Ensemble} (GBBHE) based on binary histogram partition and ensemble learning. From the theoretical perspective, by assuming the H\"{o}lder continuity of the target function, we establish the statistical convergence rate of GBBHE in the space $C^{0,\alpha}$ and $C^{1,0}$, where a lower bound of the convergence rate for the base learner demonstrates the advantage of boosting. Moreover, in the space $C^{1,0}$, we prove that the number of iterations to achieve the fast convergence rate can be reduced by using ensemble regressor as the base learner, which improves the computational efficiency. In the experiments, compared with other state-of-the-art algorithms such as gradient boosted regression tree (GBRT), Breiman's forest, and kernel-based methods, our GBBHE algorithm shows promising performance with less running time on large-scale datasets.

翻译:在本文中,我们提出一个基于二进制直方图分区和共同学习的大规模回归问题的梯度推增算法,称为\ textit{Gravit 推动二进制直方图共集} (GBBHE) 。 从理论角度看,我们假设目标函数的 H\\ {o}lder 连续性,就可以确定GBBHE在空间 $C ⁇ 0,\alpha} $和 $C ⁇ 1,0} 美元中的统计趋同率,因为基础学习者的趋同率的下限显示了提振的优势。 此外,在空间 $C ⁇ 1,0} 中,我们证明,通过使用共制反制回归器作为基础学习器,可以减少实现快速趋同率的迭代数,从而提高计算效率。在实验中,与其他最先进的算法相比,如梯度推回归树(GBRT),布雷曼森林和内核法,我们的GBBHE算法显示在大规模数据设置上运行时间的有希望的性。