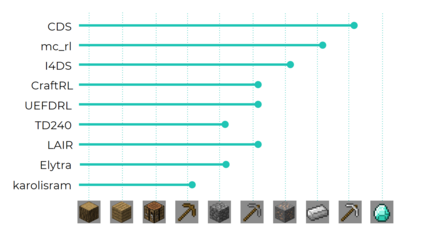

To facilitate research in the direction of sample-efficient reinforcement learning, we held the MineRL Competition on Sample-Efficient Reinforcement Learning Using Human Priors at the Thirty-fourth Conference on Neural Information Processing Systems (NeurIPS 2019). The primary goal of this competition was to promote the development of algorithms that use human demonstrations alongside reinforcement learning to reduce the number of samples needed to solve complex, hierarchical, and sparse environments. We describe the competition and provide an overview of the top solutions, each of which uses deep reinforcement learning and/or imitation learning. We also discuss the impact of our organizational decisions on the competition as well as future directions for improvement.

翻译:为促进在抽样高效强化学习方面开展研究,我们在第三十四届神经信息处理系统会议(NeurIPS 2019)上举办了利用人类前科进行抽样强化学习的MineRL竞赛,其主要目的是促进开发使用人类示范的算法,同时加强学习,减少解决复杂、等级和稀少环境所需的样本数量。我们描述竞争情况,并概述顶级解决方案,其中每种方案都利用深层强化学习和(或)模仿学习。我们还讨论了我们组织决定对竞争的影响以及今后的改进方向。