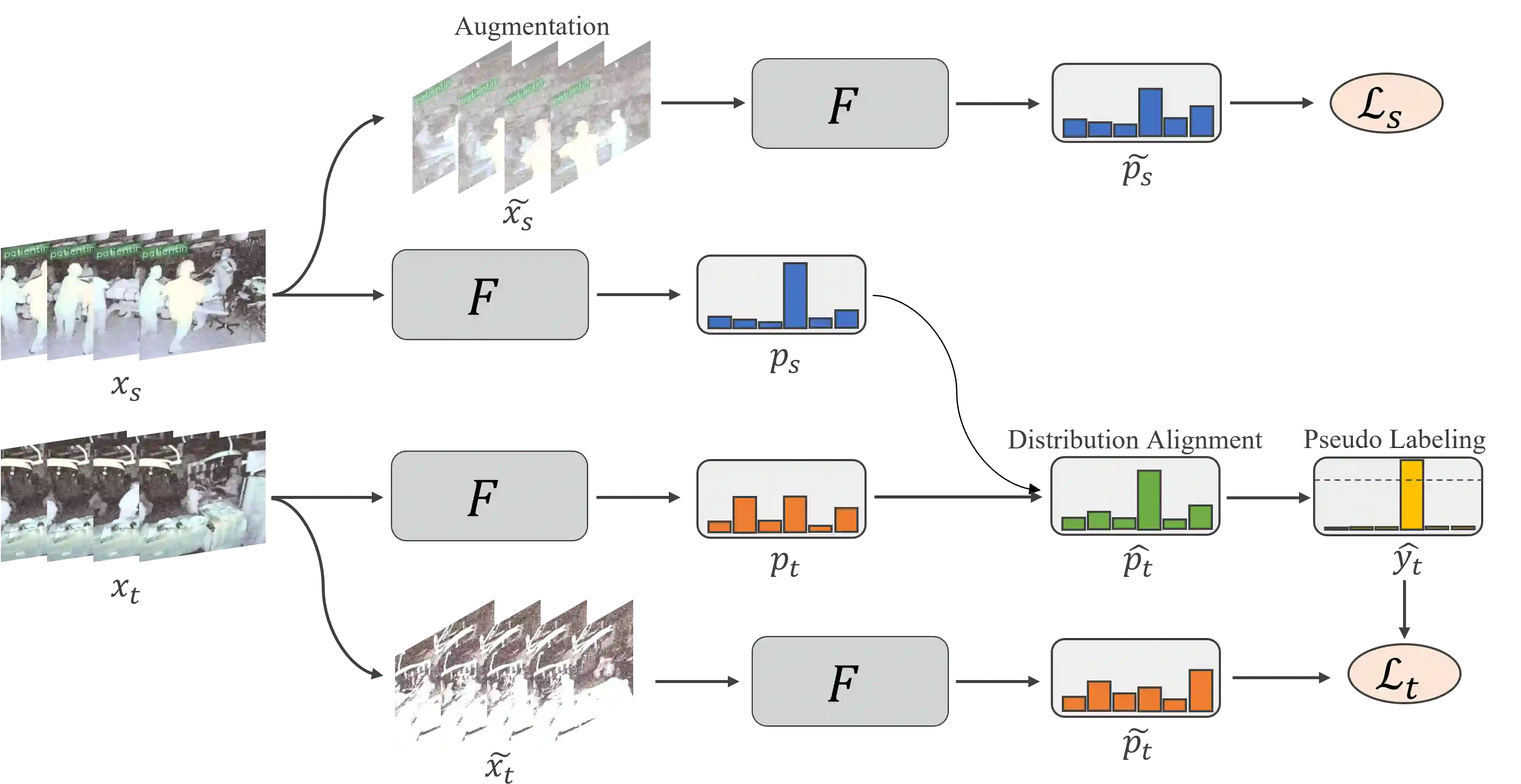

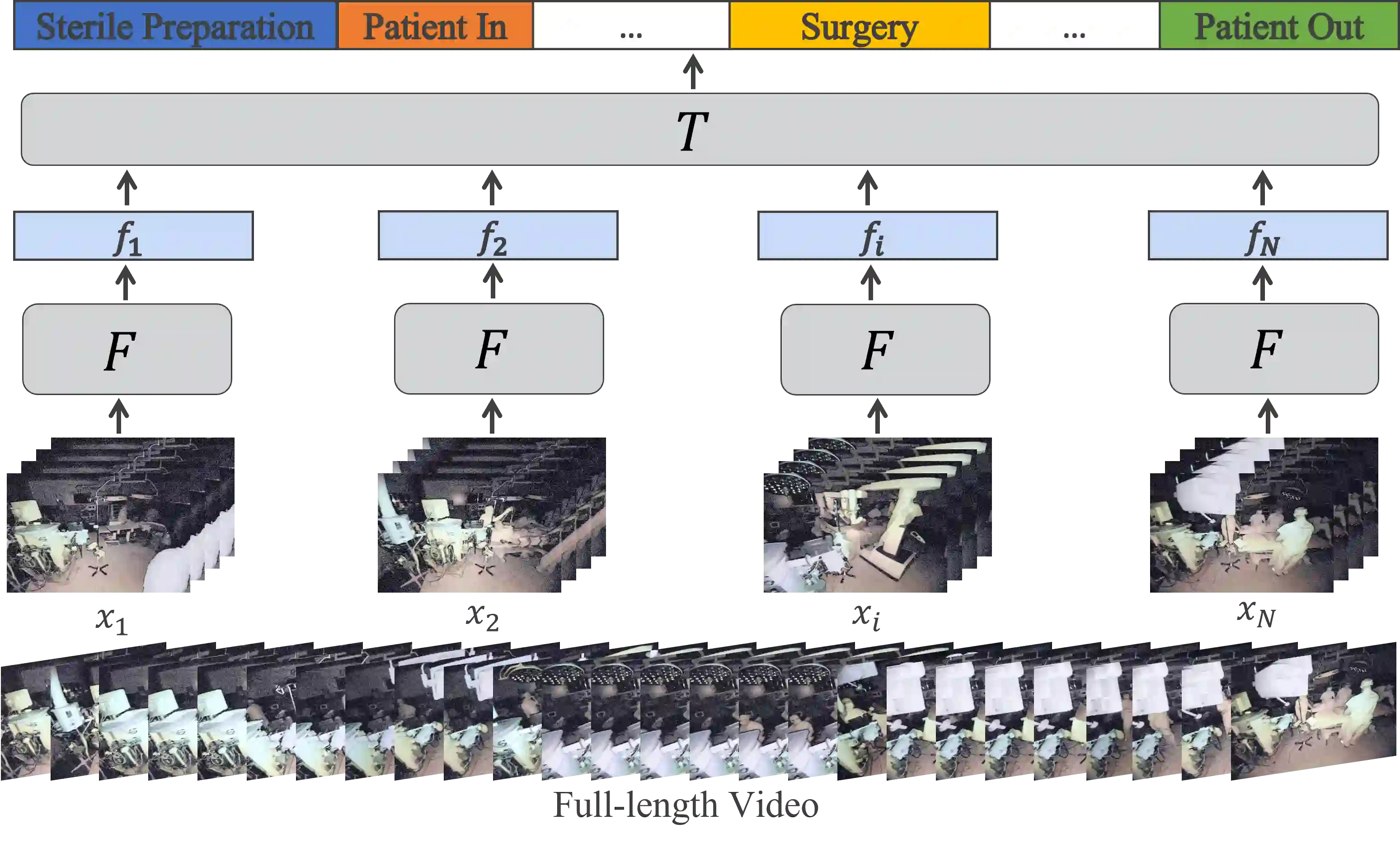

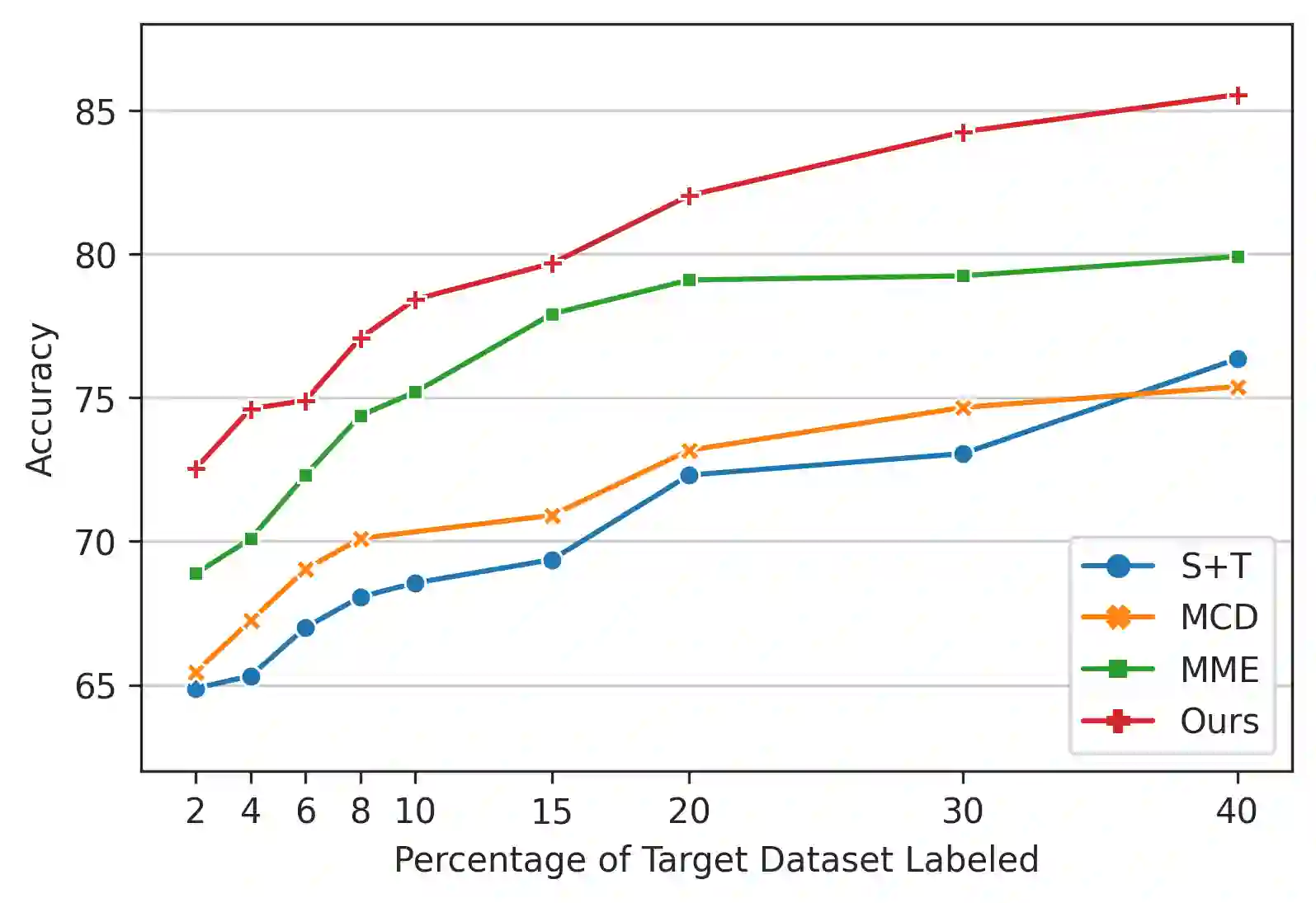

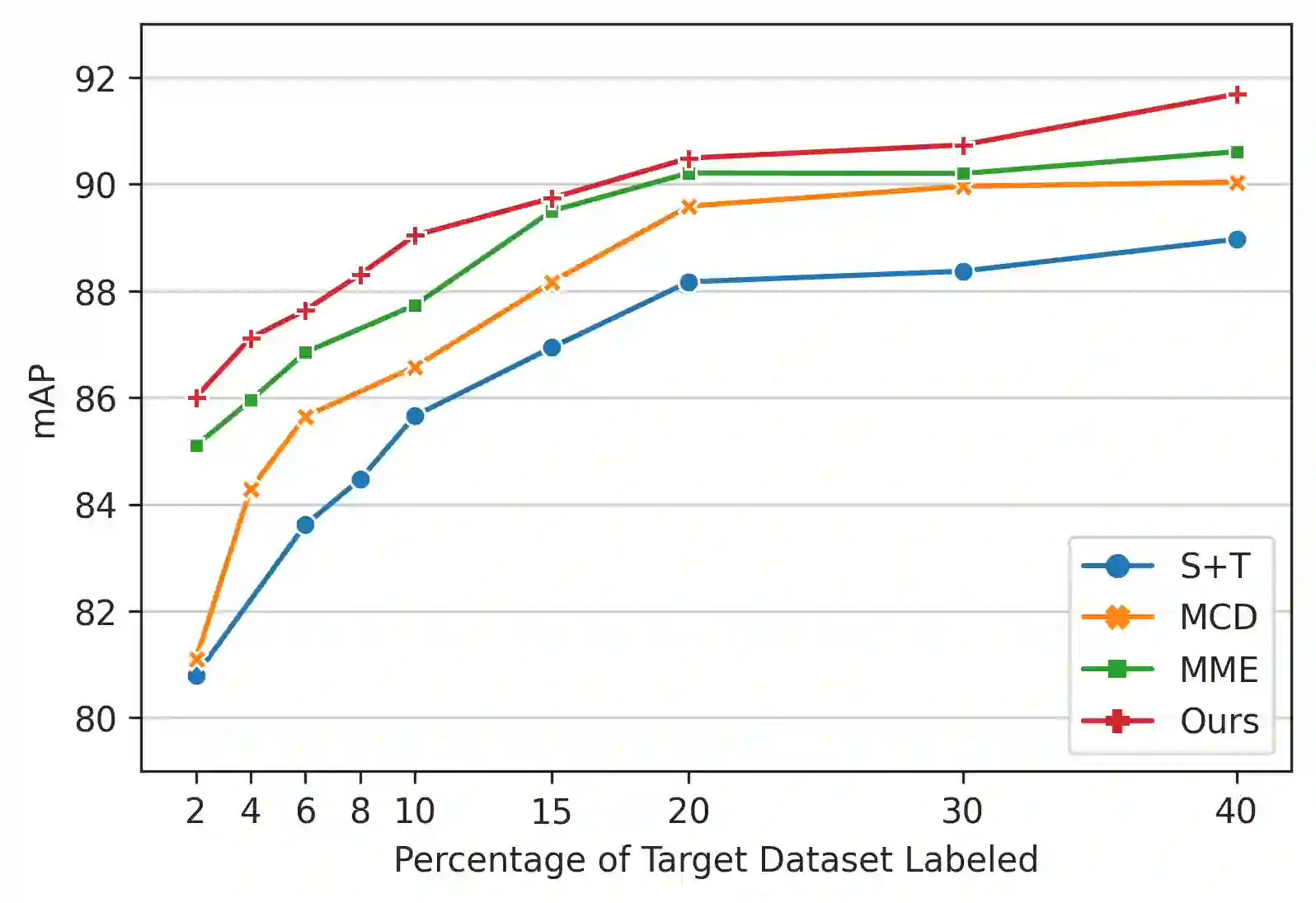

Automatic surgical activity recognition enables more intelligent surgical devices and a more efficient workflow. Integration of such technology in new operating rooms has the potential to improve care delivery to patients and decrease costs. Recent works have achieved a promising performance on surgical activity recognition; however, the lack of generalizability of these models is one of the critical barriers to the wide-scale adoption of this technology. In this work, we study the generalizability of surgical activity recognition models across operating rooms. We propose a new domain adaptation method to improve the performance of the surgical activity recognition model in a new operating room for which we only have unlabeled videos. Our approach generates pseudo labels for unlabeled video clips that it is confident about and trains the model on the augmented version of the clips. We extend our method to a semi-supervised domain adaptation setting where a small portion of the target domain is also labeled. In our experiments, our proposed method consistently outperforms the baselines on a dataset of more than 480 long surgical videos collected from two operating rooms.

翻译:将此类技术纳入新的手术室有可能改善对病人的护理服务,并降低费用。最近的工作在外科活动确认方面取得了有希望的成绩;然而,这些模型缺乏普遍性是广泛采用这种技术的关键障碍之一。在这项工作中,我们研究了各手术室外科活动识别模型的通用性。我们提出了一个新的领域适应方法,以改进外科活动识别模型在新手术室的性能,我们只有未贴标签的视频。我们的方法为无标签的视频剪辑制作了假标签,它相信这些视频剪辑的扩大版,并培训了该模型。我们将我们的方法扩大到半封闭的域适应性设置,其中目标领域有一小部分也贴有标签。在我们的实验中,我们提出的方法始终超越了从两个手术室收集的480多部长的外科视频数据集的基线。