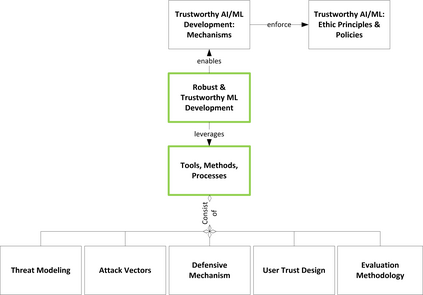

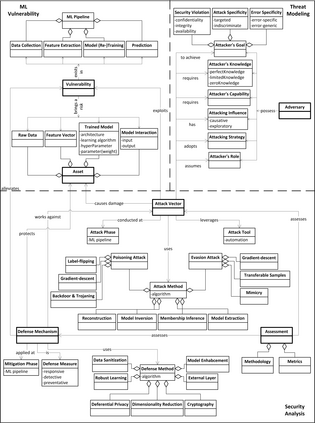

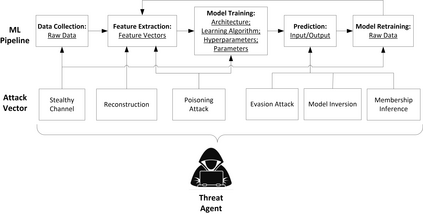

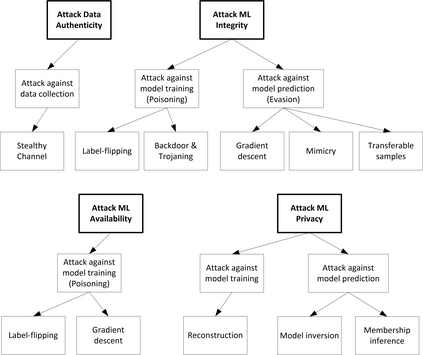

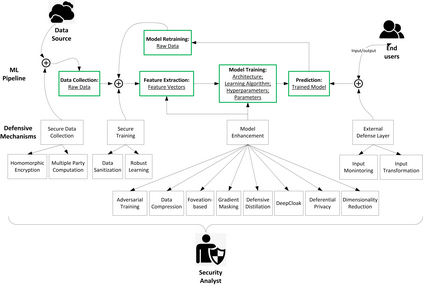

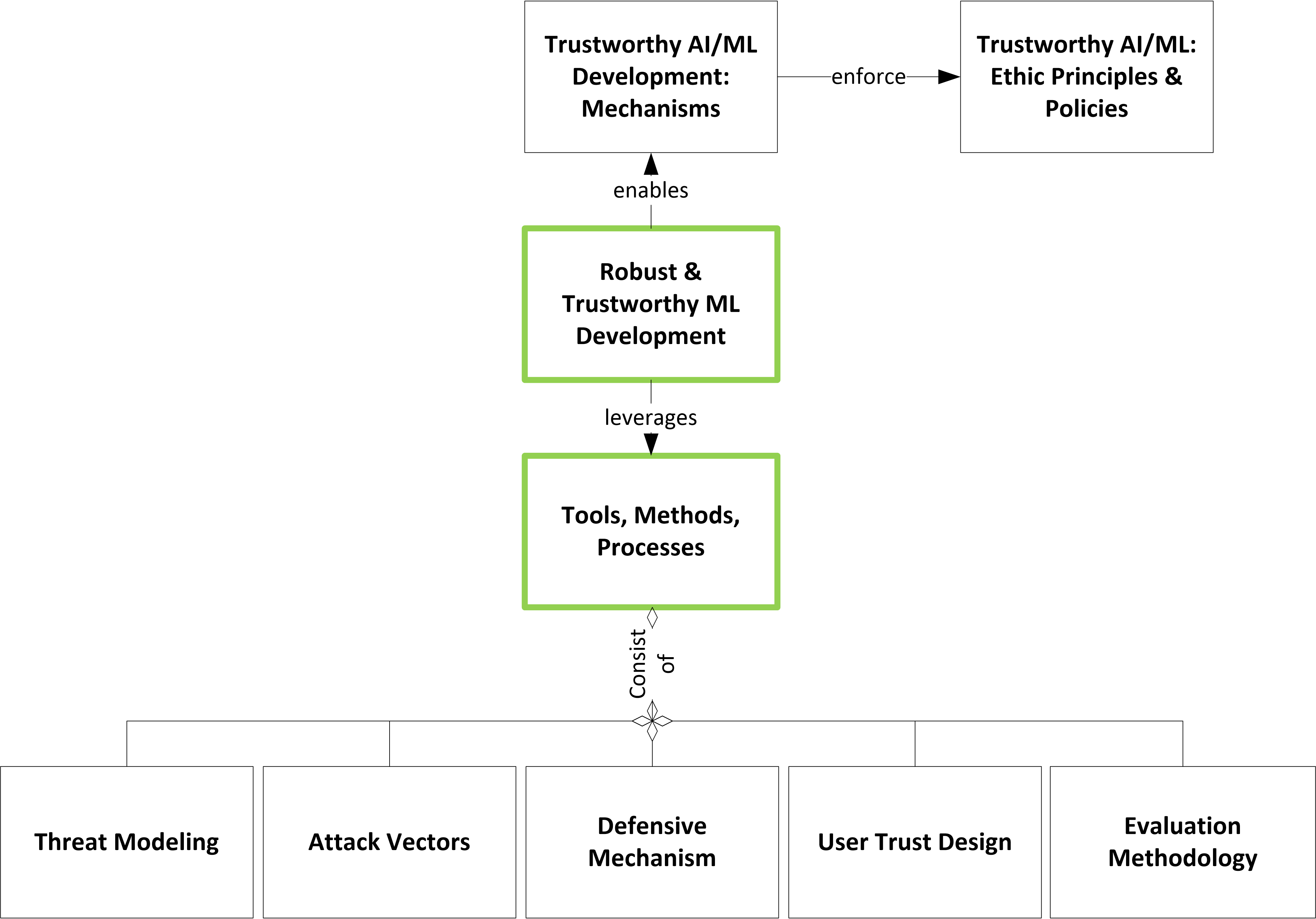

Machine Learning (ML) technologies have been widely adopted in many mission critical fields, such as cyber security, autonomous vehicle control, healthcare, etc. to support intelligent decision-making. While ML has demonstrated impressive performance over conventional methods in these applications, concerns arose with respect to system resilience against ML-specific security attacks and privacy breaches as well as the trust that users have in these systems. In this article, firstly we present our recent systematic and comprehensive survey on the state-of-the-art ML robustness and trustworthiness technologies from a security engineering perspective, which covers all aspects of secure ML system development including threat modeling, common offensive and defensive technologies, privacy-preserving machine learning, user trust in the context of machine learning, and empirical evaluation for ML model robustness. Secondly, we then push our studies forward above and beyond a survey by describing a metamodel we created that represents the body of knowledge in a standard and visualized way for ML practitioners. We further illustrate how to leverage the metamodel to guide a systematic threat analysis and security design process in a context of generic ML system development, which extends and scales up the classic process. Thirdly, we propose future research directions motivated by our findings to advance the development of robust and trustworthy ML systems. Our work differs from existing surveys in this area in that, to the best of our knowledge, it is the first of its kind of engineering effort to (i) explore the fundamental principles and best practices to support robust and trustworthy ML system development; and (ii) study the interplay of robustness and user trust in the context of ML systems.

翻译:在许多关键任务领域,例如网络安全、自主车辆控制、医疗保健等,广泛采用机器学习技术,这些应用中常规方法的绩效给人留下深刻印象,但人们对系统抵御ML特定安全攻击和隐私侵犯的复原力以及用户对这些系统的信任表示关切。在本篇文章中,首先,我们从安全工程角度介绍我们最近对最先进的ML稳健性和可信赖性技术的系统全面调查,从安全工程角度涵盖安全 ML系统开发的所有方面,包括威胁建模、共同进攻性和防御性技术、隐私保存用户学习、机器学习方面的用户信任以及ML模型稳健性的经验评估。第二,我们随后推动我们的研究,超越调查范围,说明我们创建的模型,以标准化和直观的方式代表ML从业人员的知识库。我们进一步说明如何利用模型,在通用ML系统开发过程中指导系统的系统性威胁分析和安全设计过程,该模型扩展和扩展了典型的流程。 第三,我们建议今后在机器学习过程中,以最可靠的研究方向为基础,从我们的现有研究方向,从ML领域,从我们的现有研究方向,从我们最可靠的研究领域,到最可靠的研究领域,从我们最可靠的M系统,到最可靠的研究领域,从我们的现有研究方向,到最可靠的研究领域,从我们最可靠的研究领域,到最可靠的研究领域,从M系统。