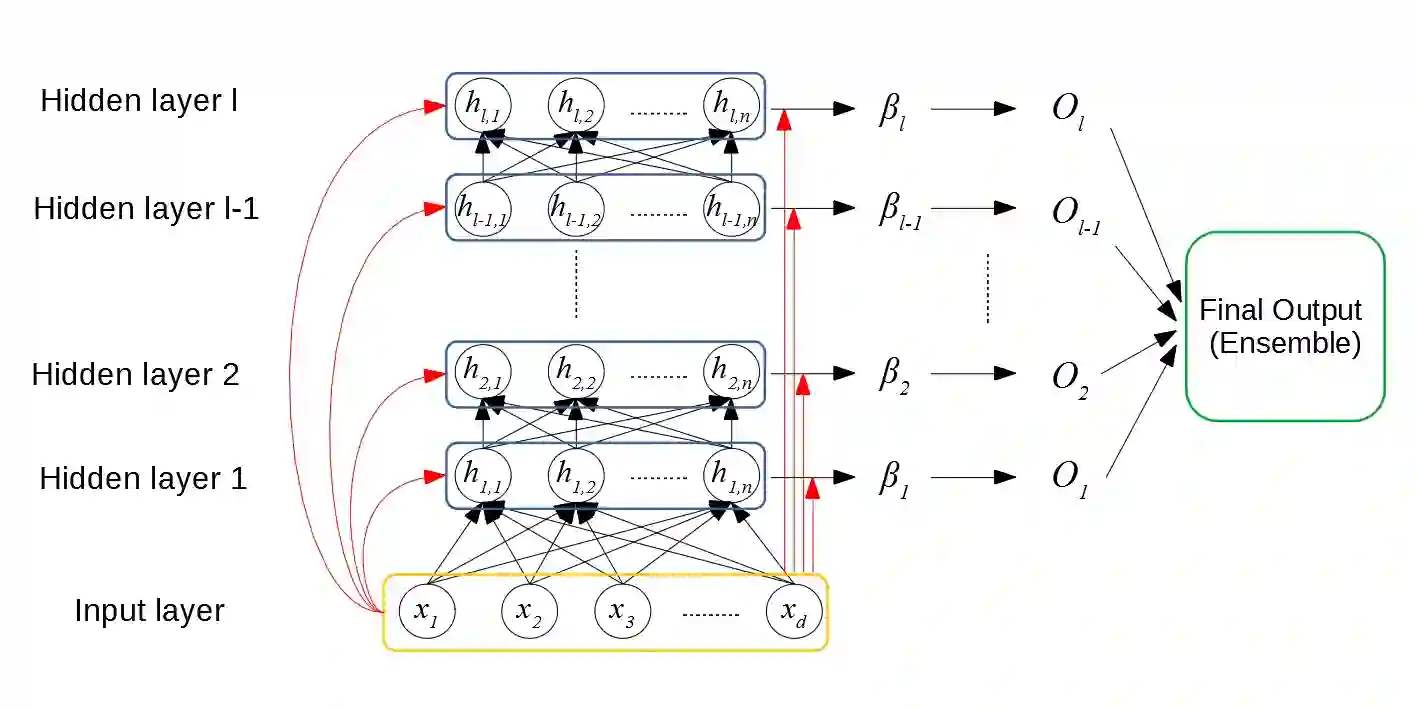

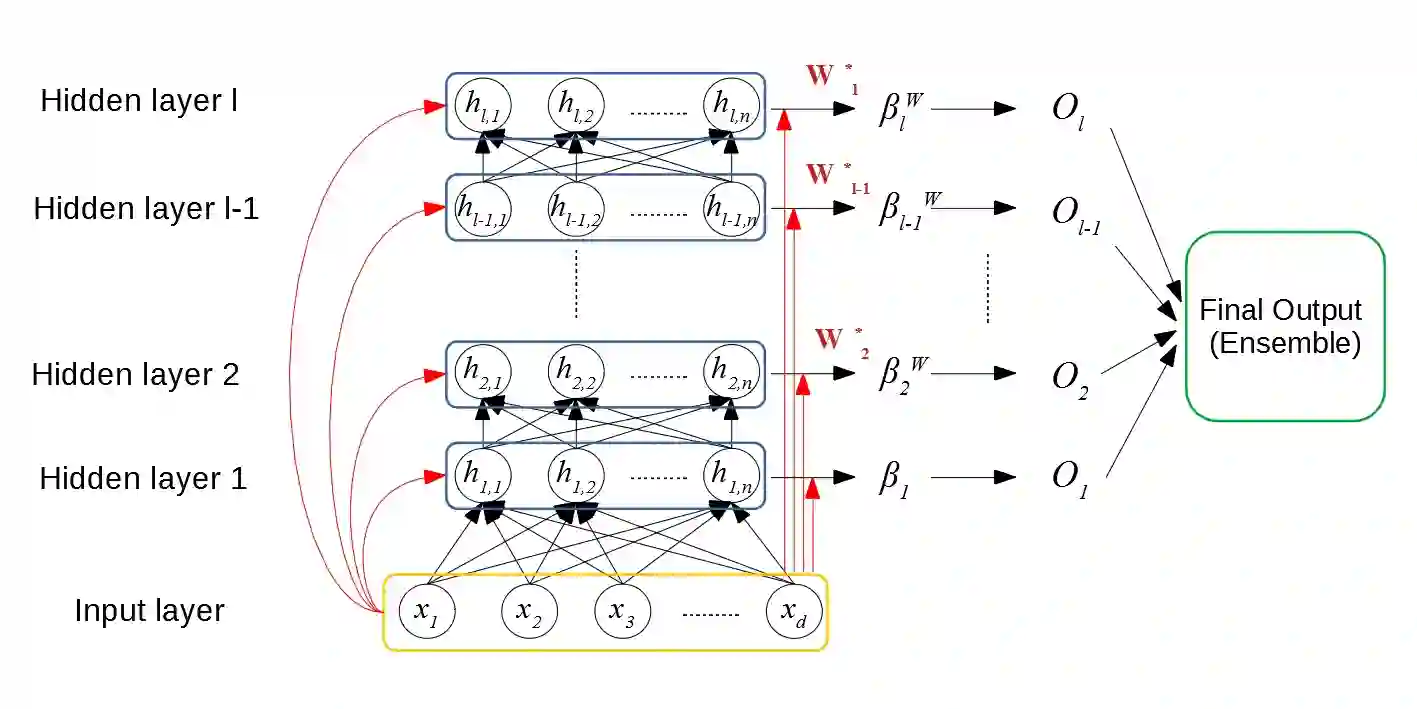

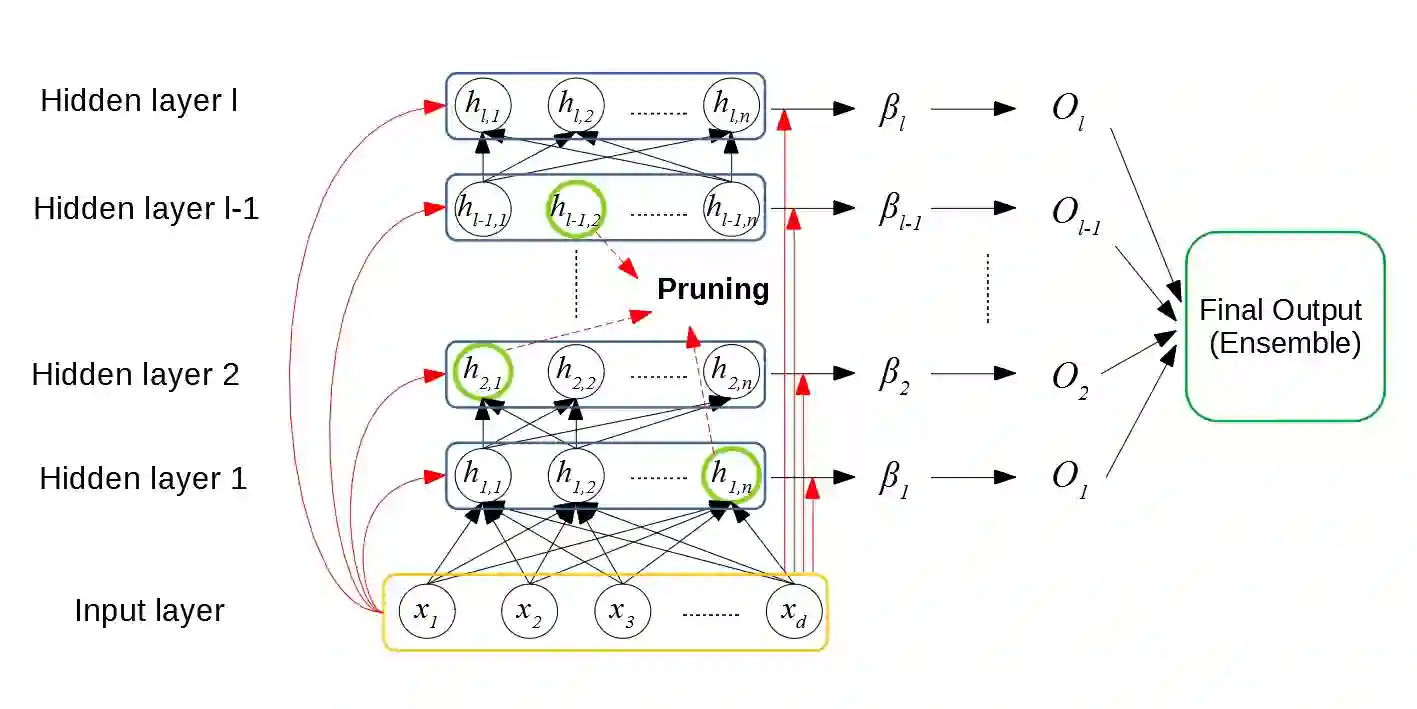

In this paper, we first introduce batch normalization to the edRVFL network. This re-normalization method can help the network avoid divergence of the hidden features. Then we propose novel variants of Ensemble Deep Random Vector Functional Link (edRVFL). Weighted edRVFL (WedRVFL) uses weighting methods to give training samples different weights in different layers according to how the samples were classified confidently in the previous layer thereby increasing the ensemble's diversity and accuracy. Furthermore, a pruning-based edRVFL (PedRVFL) has also been proposed. We prune some inferior neurons based on their importance for classification before generating the next hidden layer. Through this method, we ensure that the randomly generated inferior features will not propagate to deeper layers. Subsequently, the combination of weighting and pruning, called Weighting and Pruning based Ensemble Deep Random Vector Functional Link Network (WPedRVFL), is proposed. We compare their performances with other state-of-the-art deep feedforward neural networks (FNNs) on 24 tabular UCI classification datasets. The experimental results illustrate the superior performance of our proposed methods.

翻译:在本文中, 我们首先将批量正常化引入 edRVFL 网络 。 这种重新归正方法可以帮助网络避免隐藏特性的差异 。 然后, 我们提出新变体“ 集合深随机矢量功能链接( edRVFL) ” 。 经过加权的 edRVFL (WedRVFL) 使用加权方法, 使不同层次的样本培训不同重量, 根据样品在上层的可靠分类方式, 从而增加共性的多样性和准确性 。 此外, 还提议了一个基于运行的 edRVFL( PedRVFLL) ( PedRVFL) (PedRVFL) (PedRVFL) (PedRVFL) 。 我们根据它们对于分类的重要性, 在生成下一个隐藏层之前, 我们根据它们对于分类的重要性, 开发了一些低级神经元的精度神经元。 我们通过这个方法确保随机生成的低级特征不会扩散到更深层 。 随后, 提议了基于 深层矢测的 深层矢测线网络( FNNS ) 测试结果 。