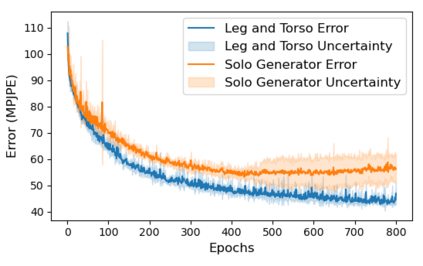

This paper addresses the problem of 2D pose representation during unsupervised 2D to 3D pose lifting to improve the accuracy, stability and generalisability of 3D human pose estimation (HPE) models. All unsupervised 2D-3D HPE approaches provide the entire 2D kinematic skeleton to a model during training. We argue that this is sub-optimal and disruptive as long-range correlations are induced between independent 2D key points and predicted 3D ordinates during training. To this end, we conduct the following study. With a maximum architecture capacity of 6 residual blocks, we evaluate the performance of 5 models which each represent a 2D pose differently during the adversarial unsupervised 2D-3D HPE process. Additionally, we show the correlations between 2D key points which are learned during the training process, highlighting the unintuitive correlations induced when an entire 2D pose is provided to a lifting model. Our results show that the most optimal representation of a 2D pose is that of two independent segments, the torso and legs, with no shared features between each lifting network. This approach decreased the average error by 20\% on the Human3.6M dataset when compared to a model with a near identical parameter count trained on the entire 2D kinematic skeleton. Furthermore, due to the complex nature of adversarial learning, we show how this representation can also improve convergence during training allowing for an optimum result to be obtained more often.

翻译:本文针对的是2D在未经监督的 2D 到 3D 在未经监督的 2D 到 3D 升降过程中的2D 表示比例问题。 所有未经监督的 2D-3D HPE 方法都为培训过程中的模型提供了整个 2D 运动骨架 2D 运动骨架。 我们争论说,这是亚最佳和破坏性的,因为独立的 2D 关键点和在培训期间预测的 3D 坐标之间引发了长期关联。 为此,我们进行以下研究。 以6 剩余区块的最大结构能力来进行以下研究。 我们评估了5 模型的性能,每个模型的2D 构成的准确性、稳定性和通用性。 在未经监督的2D 3D HPE 对抗过程中,所有未经监督的2D HPE 方法都提供了整个2D 运动骨架骨架。 此外,我们展示了培训过程中所学的 2D 关键点之间的关联性关系。 我们的结果表明, 2D 最优化的表达方式是两个独立的部分, 托索和双腿, 每个网络之间没有共同的特性。 在经过培训的模型中,这一方法也减少了一个学习结果, 以20xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx