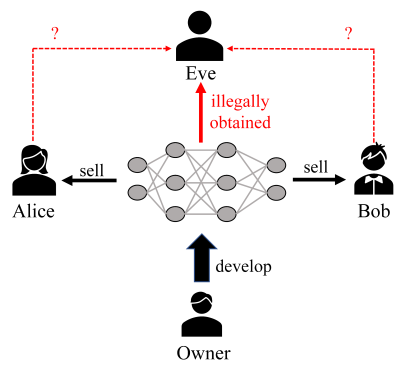

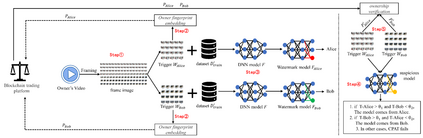

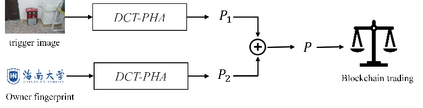

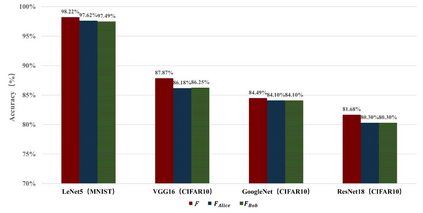

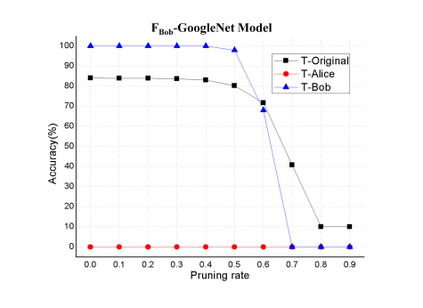

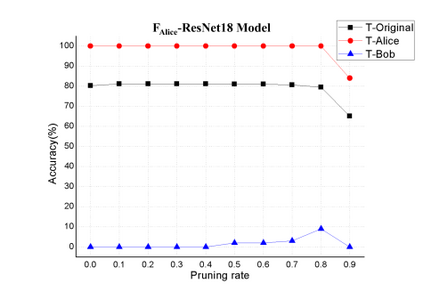

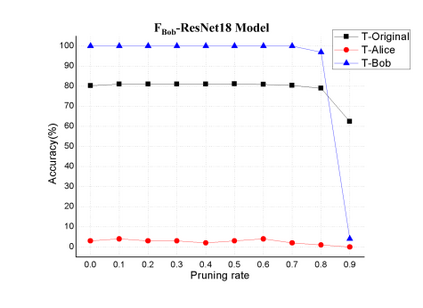

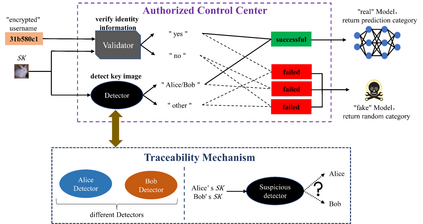

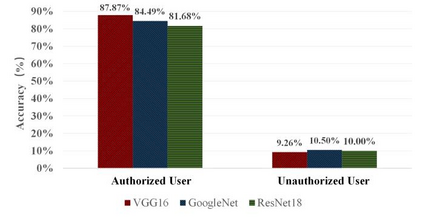

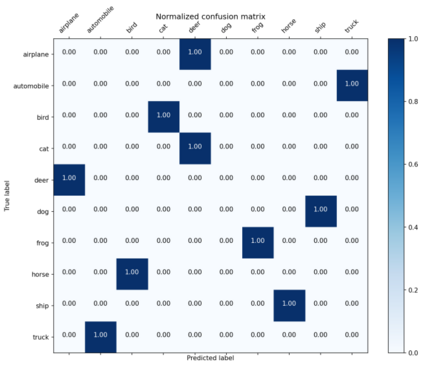

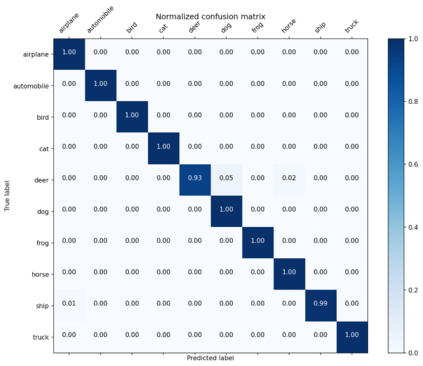

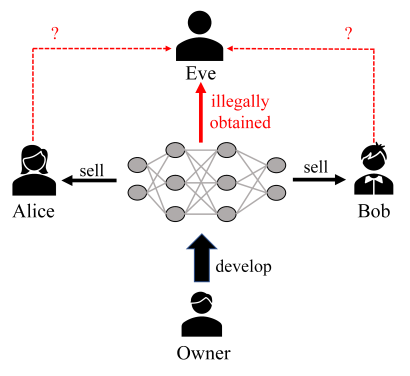

Deep neural networks (DNNs) have achieved tremendous success in artificial intelligence (AI) fields. However, DNN models can be easily illegally copied, redistributed, or abused by criminals, seriously damaging the interests of model inventers. Currently, the copyright protection of DNN models by neural network watermarking has been studied, but the establishment of a traceability mechanism for determining the authorized users of a leaked model is a new problem driven by the demand for AI services. Because the existing traceability mechanisms are used for models without watermarks, a small number of false positives is generated. Existing black-box active protection schemes have loose authorization control and are vulnerable to forgery attacks. Therefore, based on the idea of black-box neural network watermarking with the video framing and image perceptual hash algorithm, this study proposes a passive copyright protection and traceability framework PCPT using an additional class of DNN models, improving the existing traceability mechanism that yields a small number of false positives. Based on the authorization control strategy and image perceptual hash algorithm, using the authorization control center constructed using the detector and verifier, a DNN model active copyright protection and traceability framework ACPT is proposed. It realizes stricter authorization control, which establishes a strong connection between users and model owners, and improves the framework security. The key sample that is simultaneously generated does not affect the quality of the original image and supports traceability verification.

翻译:深心神经网络(DNN)在人工智能领域取得了巨大成功。然而,DNN模式很容易被犯罪分子非法复制、重新分配或滥用,严重损害了模型发明者的利益。目前,已经研究了通过神经网络水印对DN模式的版权保护,但建立追踪机制以确定被授权使用泄漏模型的用户,这是由对独立智能服务的需求驱动的一个新问题。由于现有追踪机制用于没有水印的模型,产生了少量虚假的阳性。现有的黑箱主动保护计划不受授权控制,容易受到伪造攻击。因此,基于黑箱神经网络水印有视频设计和图像感知性散射算法的构想,这项研究提议使用更多类型的DNNNM模型来被动保护版权和追踪框架,改进现有的追踪机制,产生少量虚假的阳性。根据授权控制战略和图像感官算法,利用现有授权控制中心,使用检测和核实器来构建授权控制系统, DNNNW 积极版权保护模式和追踪框架将实现更牢固的链接。