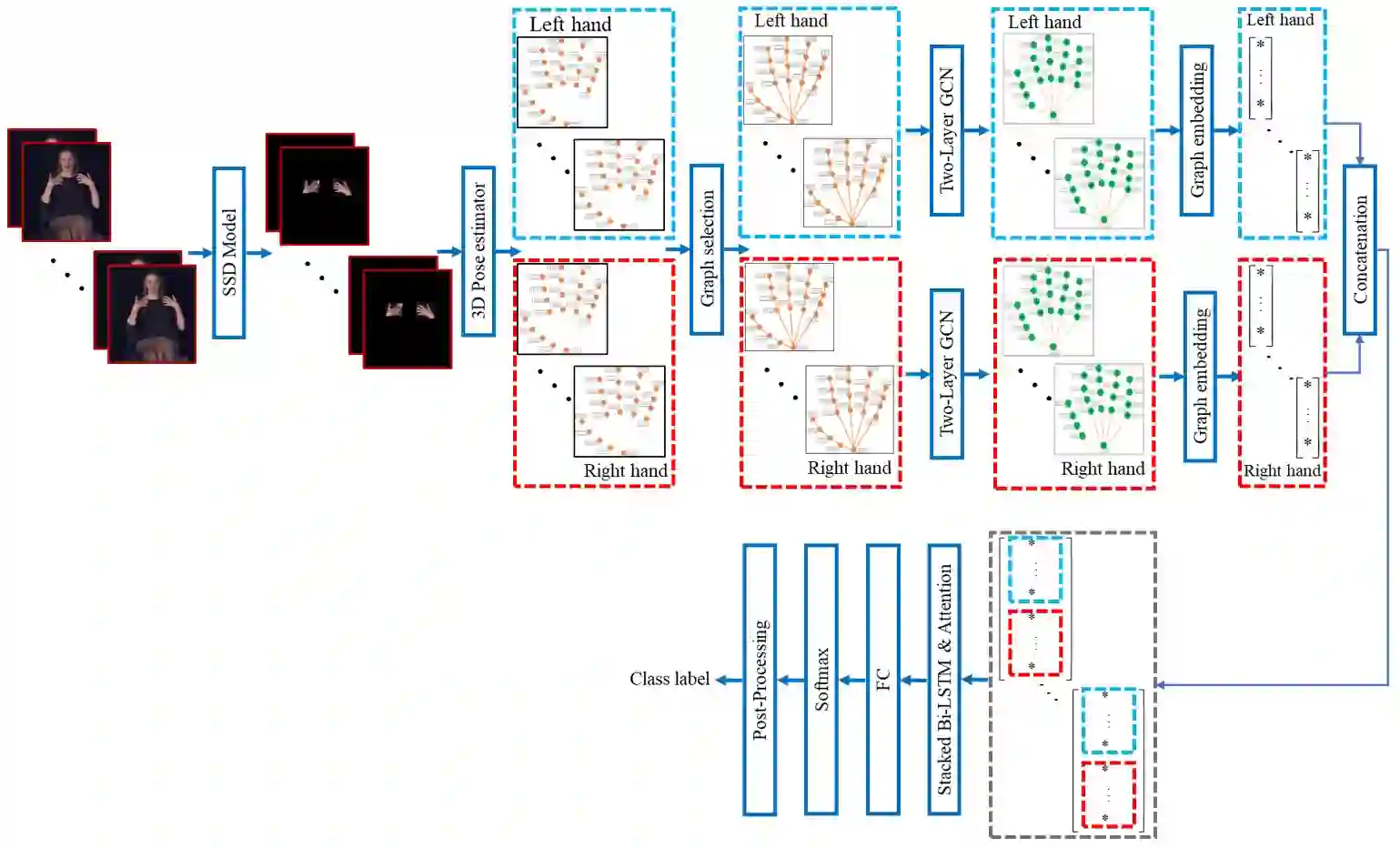

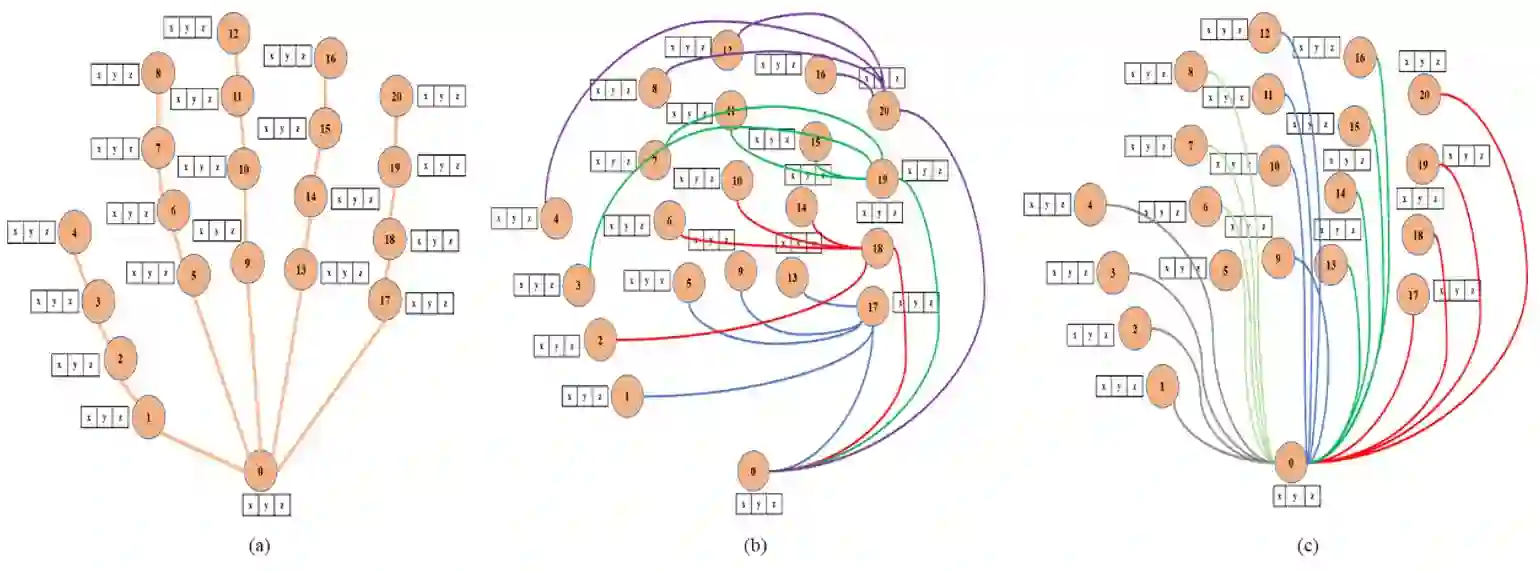

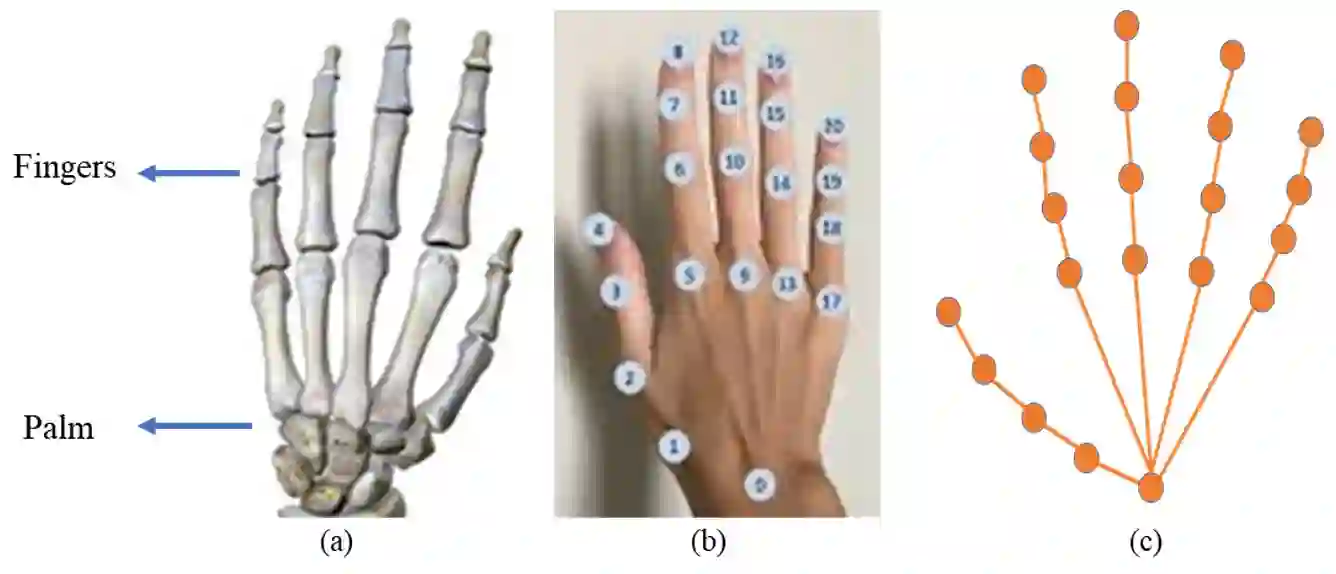

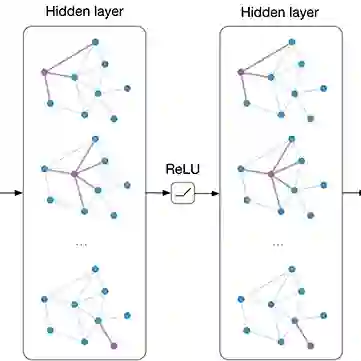

Continuous Hand Gesture Recognition (CHGR) has been extensively studied by researchers in the last few decades. Recently, one model has been presented to deal with the challenge of the boundary detection of isolated gestures in a continuous gesture video [17]. To enhance the model performance and also replace the handcrafted feature extractor in the presented model in [17], we propose a GCN model and combine it with the stacked Bi-LSTM and Attention modules to push the temporal information in the video stream. Considering the breakthroughs of GCN models for skeleton modality, we propose a two-layer GCN model to empower the 3D hand skeleton features. Finally, the class probabilities of each isolated gesture are fed to the post-processing module, borrowed from [17]. Furthermore, we replace the anatomical graph structure with some non-anatomical graph structures. Due to the lack of a large dataset, including both the continuous gesture sequences and the corresponding isolated gestures, three public datasets in Dynamic Hand Gesture Recognition (DHGR), RKS-PERSIANSIGN, and ASLVID, are used for evaluation. Experimental results show the superiority of the proposed model in dealing with isolated gesture boundaries detection in continuous gesture sequences

翻译:在过去几十年里,研究人员广泛研究了连续手脚识别(CHGR)在过去几十年中进行的广泛研究。最近,为了应对在连续的手势视频[17]中发现孤立手势的挑战,提出了一种模型。为了提高模型性能,同时在[17]中取代模型中的手工制作特征提取器,我们提议了一个GCN模型,并将其与堆叠的B-LSTM和关注模块结合起来,以推进视频流中的时间信息。考虑到GCN骨架模式模式的突破,我们提议了一个双层GCN模型,以赋予3D手骨架特征的权能。最后,从[17]中借用的每个孤立手势的等级概率将输入后处理模块。此外,我们用一些非模拟图形结构取代了解剖图结构。由于缺少一个大型数据集,包括连续的手势序列和相应的孤立手势,因此在动态手势识别(DHGRGR)、RKS-PERSIANING)和ALVID中的三个公共数据集。在评估中,在连续的姿态定位模型中,利用了与连续的姿态测测定的优势。

相关内容

Source: Apple - iOS 8