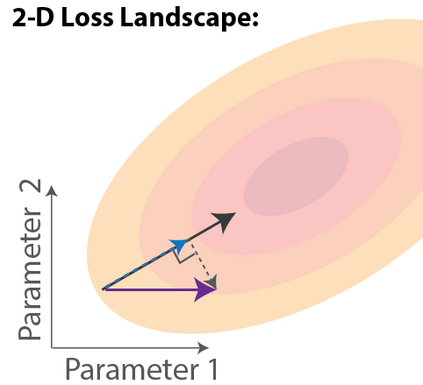

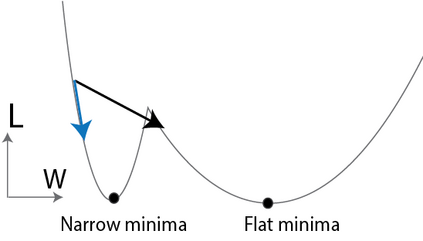

To unveil how the brain learns, ongoing work seeks biologically-plausible approximations of gradient descent algorithms for training recurrent neural networks (RNNs). Yet, beyond task accuracy, it is unclear if such learning rules converge to solutions that exhibit different levels of generalization than their nonbiologically-plausible counterparts. Leveraging results from deep learning theory based on loss landscape curvature, we ask: how do biologically-plausible gradient approximations affect generalization? We first demonstrate that state-of-the-art biologically-plausible learning rules for training RNNs exhibit worse and more variable generalization performance compared to their machine learning counterparts that follow the true gradient more closely. Next, we verify that such generalization performance is correlated significantly with loss landscape curvature, and we show that biologically-plausible learning rules tend to approach high-curvature regions in synaptic weight space. Using tools from dynamical systems, we derive theoretical arguments and present a theorem explaining this phenomenon. This predicts our numerical results, and explains why biologically-plausible rules lead to worse and more variable generalization properties. Finally, we suggest potential remedies that could be used by the brain to mitigate this effect. To our knowledge, our analysis is the first to identify the reason for this generalization gap between artificial and biologically-plausible learning rules, which can help guide future investigations into how the brain learns solutions that generalize.

翻译:为了展示大脑是如何学会的,我们正在进行的工作寻求以生物上可替换的梯度下游算法近似值来培训经常性神经网络。然而,除了任务准确性之外,还不清楚这些学习规则是否融合到与非生物上可替换的对应方相比具有不同程度的概括化解决方案。我们问:利用基于丧失景观曲线的深层次学习理论的结果,生物上可替换的梯度近值如何影响概括化?我们首先展示培训RENS的先进生物上可替换的学习规则与更密切跟踪真实梯度的机器学习对应方相比,表现更差、更变幻的概括化。接下来,我们核查这种普遍化规则是否与丧失景观曲线的曲线曲线曲线相对应。我们发现,生物上可移动的学习规则倾向于在同步重力空间与高精度区域接触。我们利用动态系统的工具,得出理论论,提出一个解释这种现象的理论。这预测了我们的数字结果,并解释了为什么生物上可识别的规则导致更糟糕和更不稳定的和更具变的常规化的解决方案,我们最终会提出我们学习的大脑分析。